Articles & case studies

6 Secrets to Supplier Selection Criteria

Good stewardship of supplier data is foundational to every Enterprise Resource Planning (ERP) transformation. Selection criteria are the set of rules that determine which records from your legacy ERP solution need to be converted into the new ERP system. Strategizing supplier selection will start your organization on the path to a successful ERP implementation. Keep reading to learn the secrets to robust supplier selection.

1. Balance Competing Methodologies

Competing methodologies will be present throughout your ERP transformation. Comparing the pros and cons of different methodologies is the first step to determining which approach is best for your organization.

Should selection criteria be wide or narrow?

- If selection is too wide, unneeded data could find its way to the new system. If selection is too narrow, last-minute manual entries may be needed to close gaps.

Will legacy data cleanup be automated or manual?

- Manual data cleanup can be time consuming, while automating it could result in an overengineered or hard-to-maintain conversion program.

Should development follow a Waterfall or Agile methodology?

- A Waterfall approach could be easier to plan than Agile, but Agile could account for a progressively better understanding of the data landscape.

No one-size-fits-all answer exists for the questions above, but asking them early in the project allows you to make informed decisions.

2. Question Legacy Data

Decades-old software solutions do not have the amount of built-in validation that modern solutions have. Activity flags are notoriously inaccurate in legacy systems and suppliers that are flagged as inactive may still appear on open transactions. Restrictive field widths in the legacy system might have led to truncated or abbreviated data, including the supplier name. Evolving business requirements may have led to some legacy fields being repurposed – for example, storing email addresses in a fax field. Profiling and research into the legacy data will help reveal these surprises early and allow time to figure out appropriate workarounds.

3. Consider Historical Data Requirements

Historical data requirements will significantly impact supplier selection. For example, if 2 years of historical Accounts Payable invoices are being converted then it forces all the suppliers associated with those invoices to be converted as active vendors even if they are inactive in the legacy system. This could introduce significant manual work later in the project when those vendors need to be flipped from active to inactive. Additionally, the historical data requirements increase volume and processing time for data conversion, which directly impacts the cutover window.

4. Prioritize Open Transactions

Open Purchase Orders and Open Accounts Payables provide an excellent starting point for supplier selection criteria. By simultaneously considering master data and transaction data selection criteria, you guarantee that your conversion approach is internally consistent. Understanding how these modules interact and are interdependent also encourages the business and developers to build reusable code and to document shared sets of rules in a single place. One more benefit from considering open transactions is that it helps to shake out data exceptions, like orphaned records or old invoices that were incorrectly left open, and these issues can be addressed before moving to your new ERP system.

5. Understand Structural Changes

A firm understanding of the structural changes happening to Supplier data will aid reconciliation and final signoff of the converted data. No modern Supplier conversion results in data that has a one-to-one relationship to the legacy data: legacy solutions are rarely as normalized as modern ones, supplier sites may be used differently, and their corresponding contacts may be populated in a novel manner.

Even top-level supplier data will not correspond directly across the legacy and target system since value-adding supplier deduplication causes multiple legacy supplier to be merged to a single supplier in the new system. Cross-references of the supplier data, including insights into how legacy records have been merged (as with deduplication) or split apart (as with site or contact information), will be critical to a thorough validation and reconciliation of converted data.

6. Employ a Cross-Functional Approach

In his book Conscious Capitalism, Whole Foods co-founder John Mackey emphasizes the importance of supplier relationships in creating a responsible and successful organization. Extending Mackey’s idea, realize that good supplier relationships start with good data. Supplier data will not reside all in one place – it will be scattered across separate tables, modules, and databases – a cross-functional approach is needed to bring it all together. Avoid gaps in your supplier conversion strategy by identifying and including the right stakeholders starting with the earliest design sessions. At the very least, involve both the Payables team and the Supply Chain team in building a holistic understanding of Supplier data. Depending on your company and industry, additional teams may be needed, such as Lease Accounting, Patient Financial Services, Maintenance, or something else. Major transformations may even employ multiple technologies and implementation teams – like Oracle Cloud for ERP and Ivalua for procurement. Collaborating across tracks will reveal countless ways to make Supplier data serve your business and your corporate mission better than ever before.

5 Dangers of Oracle’s Quarterly Release and How to Address Them

Quarterly patches are released for Oracle Fusion Cloud ERP (Enterprise Resource Planning), these expand functionality, resolve bugs, and update security. The modules being updated, the nature of the changes, and the overall complexity of the patch varies each quarter, and this makes it difficult for businesses to predict their impact on an Oracle Cloud implementation.

Impact on Data Conversion

Oracle patches impact data conversion because File Based Data Import (FBDI) processes and templates are evolving to meet expanding customer needs. Changes to the load process and template must be accounted for by your implementation team. If load process and template changes are ignored then project delays will ensue as unexpected load errors emerge, records fail to insert into Oracle, and data appears missing or misplaced from Oracle’s user interface (UI). We have identified 5 major risks posed by Oracle’s quarterly patch along with 5 solutions that will drive your business forward and avoid data migration failure.

Risk 1: Implementing the patch requires system downtime

Solution: Plan your project schedule and data loads around Oracle patches

Before your project starts you should be aware of when Oracle’s quarterly patches will take place by reviewing Oracle’s published schedule. Understand that Oracle staggers the application of the quarterly patch: it is first applied to test environments and applied to production later. Plan your implementation test cycles around these patch dates so that the patch will not interrupt test cycle loads - this is especially important when considering 24/7 schedules like those for User Acceptance Testing (UAT) and the dry run cutover. If the quarterly patch is unavoidably scheduled over your test cycle, then plan loads ahead of time to ensure that all scheduled processes complete before the patch begins, and do not resume loads until you receive confirmation from your Oracle contact that the patch is complete in the environment that you are loading into.

Risk 2: Changes to File-Based Data Import Templates (FBDIs)

Solution: Review updates to the FBDI spreadsheets before the patch is applied.

Oracle releases the latest File Based Data Import (FBDI) templates for each patch prior to the update taking place in the system. The majority of the FBDIs will not have any changes between patches, but those that do need to have the new template incorporated in your conversion process before the patch is applied. Changes to the FBDI include added columns, removed columns, and updated fields. If the correct FBDI template is not used after the patch was applied, then data might be loaded to incorrect fields. As soon as Oracle releases the updated FBDI templates you need to compare them with the version of the FBDI that you are currently using and identify any changes. Automated tools to perform the quarterly FBDI comparisons are part of Definian’s data migration services.

Risk 3: List of Values (LOV) Changes

Solution: Extract and review configuration prior to loading data

Many fields in Oracle are driven by a list of values (LOV). If you try to load data into one of these fields with a value that does not exactly match any of configured values in the LOV, then the record will fail to load, and an invalid value error will be returned. An Oracle patch may change the expected list of values for one of these fields. The best way to prevent an invalid value for a LOV load error is to query backend Oracle tables using Oracle Business Intelligence Publisher (BI Publisher) prior to loading. Once you extract the list of acceptable values from Oracle configuration table these can be validated against the being passed through conversion. This exercise will confirm that the values you are passing in the fields are acceptable and will not cause records to fail load.

Risk 4: Changes to load process

Solution: Test load one record before initiating a full load

A less common, but highly impactful, update with an Oracle patch is a change to a load process. You should be informed of these kinds of updates before your next data load and have an updated load procedure associated.

In the past year, an Oracle patch eliminated the primary method for loading manufacturers. At that time, we were in the middle of a project and the CSV import for manufacturers that we had used since the beginning was no longer an option. Luckily, on our project, we were able to load manufacturers in production early with the original loading method. Otherwise, we would have had to switch the manufacturers load template used which would have required changing the entire conversion program.

Updates like this one tend to have more risk for your next load. To avoid any potential issues in the mass data load, make sure to test load one record before kicking off a full load of an entire conversion object. Make sure the one record goes through the load process successfully and then confirm that the record looks how it is expected to on the front end as well.

Risk 5: Added cutover risk

Solution: Do not load production data immediately following a new patch

Given all the risks outlined above, the biggest risk to your project would be having your system undergo an Oracle patch immediately before cutover without being able to test the update in a non-production environment. When you are creating your project, plan make sure that you can test the latest Oracle patch in your final test cycle environment and do not make any updates to Oracle that you are unable to test in a different environment before performing the load in production. Fortunately, Oracle’s quarterly patch follows a predictable schedule and project leadership will know in advance if the release coincides with go-live and you can plan accordingly.

Benefits of Oracle’s Quarterly Patch

The handful of risks associated with Oracle's quarterly patches are far outweighed by the benefits they bring to your organization. Many patches include functionality that has been newly implemented or enhanced based on customer service requests – this could include changes your own organization has requested! The quarterly cadence guarantees that your organization is always running on the latest and greatest version of Oracle Fusion Cloud ERP, particularly around data security. The workplace is constantly evolving, and cyber threats are always lurking somewhere. Implementing Oracle Fusion Cloud ERP and taking advantage of their quarterly patches is one way to future proof your organization.

Throw Some Data Stewards At It

"Gonna' throw some data stewards at it!"

I'm not exactly sure what that means, but that was a response I received regarding a $3B company's MDM plan. While data stewards are not the end all/be all solution to a data management plan, I applaud that the organization has recognized data troubles that they currently face. It's a big initial step in thinking about the value that data is bringing to their organization. It's a recognition of how poor data standards has led to costly headaches as they modernize their application and data landscape. It is also the start of a cultural shift that I hope continues to evolve.

How does one start that cultural shift around data without having to live through that pain element?

There isn't an easy answer to the question, but there are organizations of all sizes and stripes don't have defined data governance and data management functions. They let the applications do what they do, the people use them how they use them, and the data end up how it ends up.

If your organization is lacking that cultural importance around data and recognizing its value as an asset, here are a few ideas that may spark conversations and potential a culture shift. If your company is thinking about implementing a new business application in the next 1 to 3 years, it's a golden opportunity for instituting data governance operations.

Inform your company that data is the most cited reason for implementation delay, cost overrun, and project pain. Data has a high potential to increase implementation costs by millions. This is backed by Gartner, Forrester, or anyone that has lived through a major implementation.

Next , I would talk through how the firm can mitigate that risk and ease future pain by starting to implement data quality and data standards today. The clean-up work will need to be done regardless. By having that head start, you can potentially light a spark that builds out the policies, mechanisms, and attitudes about the value of data across the organization.

Not only will you mitigate risks and lower costs of the implementation, you will improve current business operations dramatically. I read a report by McKinsey that identified that organizations spend 30% of time doing extra work because of data issues and data management inefficiencies.

Additionally, ask how the organization going to prevent data from getting as bad as it today post go-live. Depending on the type of data, it will degrade between 4 and 30% each year.

In 5 years, best case scenario the data will be ~80% as clean as it needs to be without clear management in place. That data degradation will have a similar costly impact as the bad data that exists today and will start to negate the savings and process improvements that the new application is suppose to yield.

If your company is bumping along on their current systems with no plans to change course, guess what? It also a golden time to spark the conversation.

Start to identify and talk through how much data quality and inefficiencies that the team has to fight through on a daily basis. Identify additional value and insights that the organization can gain if it was properly managed. That 30% stat from McKinsey is valid here. Start to be that data champion and start small on one aspect of the data landscape. Supplier, customer, or address data might be easy lift with high impact.

On one project, we were told by the client that cleansing up and de-duplicating their supplier data would save them approximately 30% on their raw material costs. Holy Buckets! This was for Fortune 500 organization, so for a few months of work, we'd conservatively be able to save this organization millions of dollars each year.

Regarding the organization at the beginning of the piece that are throwing some data stewards at their data, we'll see how it plays out over the next months. They're open to learning more about data management and data governance and that open mind is incredible and without a doubt will lead to great success over the next years.

PODCAST: Evolving an Employee-First AND Client-First Culture

On the For Love of Team podcast with Winston Faircloth, Premier International (now Definian) CEO Craig Wood provides a forthright account of Premier International's (now Definian) history, employee culture, overarching value system, and approach when handling unforeseen circumstances. The listener gets a unique lens into the tools used by company leadership that have contributed to the organization’s growth and award-winning culture, such as the tenets of The Definian Way, radical candor, gratitude, and self-awareness.

Aligning with Premier International's (now Definian) foundation and guiding principles, Craig celebrates Premier International's (now Definian) founders for creating the company with such an intentional ethical groundwork that has solidified the precedent for today’s simultaneously employee-first and client-first culture.

Click on the image below to listen to the full podcast interview with Premier International (now Definian) CEO Craig Wood.

Oracle Cloud Implementation Success for a Global Hospital System

One of the world’s largest medical centers aimed to consolidate their ERP systems across hospital branches onto a single, integrated data platform. The purpose was to improve patient care and to be equipped to handle higher patient volume by achieving the following: modernization and optimization of business operations, standardization of new acquisition onboarding, and the enhancement of analytical insight capability. They selected Oracle ERP Cloud as their new technological platform and engaged Definian to support the data conversion efforts. As one of the first healthcare systems to adopt the full Oracle ERP Cloud solution, the client faced many unique challenges; however, Definian’s methodology and Applaud® technology minimized the risk associated with data migration and paved the way for a successful implementation.

Freeing the Hospital to Focus on Validation

The client was initially responsible for loading data into Oracle Cloud, but did not have knowledge of how to execute Oracle loads nor how to perform analysis of the accompanying load logs. Recognizing Definian’s experience in loading data to Oracle Cloud for other large organizations, the client asked Definian to perform data loads in addition to the other data conversion responsibilities. This lifted significant cost from the client, allowing them to focus on data validation and testing in the Oracle Cloud environment.

High Data Volume

Conversion requirements stated that a large volume of historical data needed to be migrated for auditing purposes. Definian worked with the client to outline strategies to best address the large data volumes. From a conversion standpoint, we grouped and aggregated historical data by the month or the year using our Applaud® software, thereby decreasing data volume and easing validation efforts. For production loads, Definian worked with the client to create a schedule that ensured most of their data would be loaded prior to Cutover, allowing more time for urgent tasks closer to go-live. Definian also optimized the load processing times by tweaking Oracle’s standard settings, which improved load efficiency by up to 500%.

Master Data Management

Supplier and customer addresses from the client’s legacy systems were inconsistently formatted and contained user-input errors. Definian’s address standardization process normalized and validated addressed while providing audit trails, ensuring transparency every step of the way.

All master data (Customers, Suppliers, Items, and more) was loaded into Production at least three weeks prior to their corresponding transactional data (Sales Orders, Purchase Orders, Invoices, and more). During this transition period, all changes in the legacy systems also needed to be made in Oracle ERP Cloud. Using Applaud®, Definian developed conversion programs that accounted for both newly created and modified master data to ensure all transactional data would be aligned with existing data in the Oracle Cloud Production environment.

Project Risks and Complexities

Due to the COVID-19 pandemic, we conducted the Cutover data conversions virtually. Definian consultants continued to maintain regular communication with the client and we addressed all data-related issues as they arose.

The client determined that they needed to convert Purchase Order (PO) Receipts, despite recommendations from vendors to avoid this conversion due to validation complexity. Definian took on the challenge and minimized load errors by using Definian’s Oracle Cloud Critical to Quality (CTQ) pre-load validation checks, reporting validation issues before loads took place. Definian also optimized the PO Receipts conversion process by utilizing inheritance riles to pull values from the corresponding Purchase Order, which minimized the risk for mismatching POs/receipts and significantly decreased the volume of load errors.

As a research hospital, the client uses the Oracle Cloud Grants module to manage the finances of research grants and awards. The client’s awards data requirements necessitated significant manual updates/intervention, so they would not be handled with programmatic conversion like other data objects. We instead leveraged our technical support team to develop webservices that efficiently updated award records and ensured that the awards data met the client’s initially specified requirements.

Key Activities

- Identified and documented requirements for converting data to Oracle ERP Cloud.

- Identified, prevented, and resolved problems before they became project issues.

- Facilitated discussions between the business stakeholders, the functional team, and the load team to coordinate requirements and drive data issues to completion before each test cycle and Go Live.

- Quickly adapted to changing data conversion requirements and proactively identified and addressed downstream implications, keeping the project on schedule.

- Harmonized, cleansed, and restructured Supplier data through Definian’s deduplication process; only clean, unique records were converted to Oracle. Standardized and cleansed Customer and Supplier Addresses; additionally provided clear reporting to business stakeholders.

- Definian’s Oracle Cloud Critical to Quality (CTQ) pre-load validation checks identified load errors prior to executing Oracle data loads. This early-stage resolution enabled efficient data loads to fit into the tight Cutover window.

- Utilized robust data profiling and error reporting to assist business stakeholders with data cleansing, data enhancement and data enrichment prior to Go-Live.

- Fully automated all data transformation activities, removing error-prone manual steps from all processes; ensured consistent and predictable output.

- Tracked records throughout the data transformation process to assist with post-project audits.

- Loaded over 18 million data records into Oracle Cloud while meeting cutover deadlines and maintaining high data quality.

- Provided project management services to ensure data migration was completed on-time and within budget.

- Generated reports through Applaud® to aid in validation of finances and to ensure accounting tied out.

- Provided detailed tasks in project plan to ensure every step in cutover was accounted for.

- Developed webservices to efficiently update large volume of awards and projects that otherwise would have required manual updates by the client.

Migrating Critical-to-Customer Data to Dynamics 365: How We Resolved Missing Legacy Data Challenges

A Project in Jeopardy

UAT is typically considered a dress rehearsal for Go-Live and should result in minimal change requests. But this was not your typical UAT. During the final weeks of the project a major gap in critical-to-customer data was discovered that jeopardized this steel mill’s entire Microsoft Dynamics 365 implementation.

User Acceptance

As the name suggests, a successful UAT depends on the end users accepting the results of the conversion test cycle. To guarantee a successful go-live, each subsequent test cycle includes more end users and experts than the one that preceded it. For this final round of testing, the expert resource on steel certifications discovered that many Customer Specifications were missing and could not be entered manually. This surprise finding altered the course of our Dynamics 365 implementation, and all resources resolved to find a solution.

Customer Specifications

In a nutshell, Customer Specifications (specs) include the chemical, physical, and mechanical properties that customers need displayed on the steel certification when they receive the product. Customer specifications are applied to materials that will eventually find their way into products where failure would be disastrous. For example, if a steel bar does not meet straightness requirements, then hydraulic cylinder applications (as with those on heavy construction equipment) would fail and endanger workers. We have faith in the structural integrity of skyscrapers and the reliability of cars and heavy equipment because manufacturers strive to meet strict industry standards (like ASTM) and additional customer specifications for critical applications.

Commitment to Customers

By meeting customer specifications, the steel mill demonstrates their commitment to customer needs and delivers on a promise to provide the highest quality steel needed for each application. When UAT began, we were already migrating 5,000 such specifications. Weeks after the UAT loads, our client, one of the largest steel manufacturers in the US, informed us that there was something odd with the data.

Piecewise Uncovering of New Information

This had become a recurring theme – a gradual, piecewise uncovering of new information revealing how their heavily customized legacy AS/400 (mainframe) system operates. The client’s homegrown system afforded countless operational benefits over several decades of use but created challenges for today’s implementation. The downside with non-standard systems like this is that data management is incredibly difficult. Executing a successful conversion required Definian’s project management methodology and the technical capability, data profiling, and rapid application development available through Applaud® software.

Enhanced Conversion Program Delivered

The total count of converted Customer Specifications jumped from 5,000 to over 25,000 just weeks before go-live. Given the short timeframe and quick turnaround, our herculean efforts to expand conversion scope were met with praise from both our client and our partners. A combination of end user due-diligence, expert project management, technical consulting, and the Applaud software streamlined development of the Customer Specification conversion program enhancements. We celebrated this victory and continued loads to Dynamics 365 for testing and validation. Little did we know that an even more daunting task awaited us.

Recreating Custom Backend Code

We were told, yet again, that certain specs were missing. This time, even after extensive data spelunking, no information about the specs could be retrieved in the presumed Customer Product Master table or any other master data tables in the mainframe database. We were at an impasse – how could customer specs exist while no information about them is present in the database? The client revealed that they implemented backend COBOL logic in the AS/400 mainframe that dynamically creates specs directly from sales orders. To close this gap Definian was asked to mimic the backend logic and create item-level specs from transactional data. Since this master data did not exist in the mainframe, we needed to dynamically create it through conversion code.

Rising to the Challenge

The challenge of dynamically creating master data from transactions did not discourage us. Because of Definian’s commitment to client success, this challenge could not faze us. It is exactly moments like this where we rise to the occasion and leverage the power of Applaud to make the impossible a reality. Definian’s experience in complex situations like this empowered us to gather the necessary requirements and turn around a new Customer Specs load file in a single weeks' time. The final record count had skyrocketed to roughly 62,000 – an 1100% increase from the UAT record count.

Reflecting on Our Success

This is one of the many stories from this Microsoft Dynamics 365 implementation where Applaud enabled us to make complex conversion changes quickly and keep the project timeline on track. In ambitious projects like this, requirements are expected to evolve over time. But when complex changes are requested late into an implementation, it introduces risk into the conversion process. I credit our success on this implementation to Applaud and its unique ability to rise to even the most complex technical challenges and minimize data migration risk.

Transforming Supply Chain Data to Dynamics 365: Unravelling a Complex Legacy Solution

A Proudly Home-Grown Solution

Our steel manufacturer client proudly designed their own Supply Chain software solution decades ago. Everything involving requisitioning, manufacturing, and sales was handled in a custom solution they built on mainframe servers. Occasionally, I would stumble upon decades-old comments between lines of COBOL code and be reminded that this is a home-grown, one-of-a-kind system. For example, a happy anniversary message to my colleague's mom and dad 20 years ago was among the many lines of code I reviewed. These happy notes aside, this legacy database posed serious challenges to the transformation project because of how different it was from the target system, Microsoft Dynamics 365.

The Challenge of Missing Data

We suffered from a total absence of certain master data in the legacy system. Missing data in the system posed challenges to the project and called into question the feasibility of data migration. To explain how this business could operate without master data, they intentionally built their software solution around transactions. This design allowed steel product to be defined dynamically by customer orders. Additional specifications for finished products were derived from steel industry standards (ASTM) and physical, chemical, and mechanical properties. The programmers of this custom mainframe manufacturing software recognized that they could carry out business without maintaining a Product Master! Creatively, the legacy solution used attributes on work orders and sales orders to define the finished product.

Likewise, there was no master data for Formula, Routes, and Operations – these functional areas document the raw material and the processing steps needed to manufacture finished product for customer orders. The cold drawing, stress relieving, turning, grinding, and other cold finishing steps were driven by the deeply knowledgeable foremen on the plant floor and scheduled accordingly. Effectively, there was no 'single source of truth' for several data objects that were needed in Dynamics 365.

Developing a Product Master from Transactional Data

Before we could go live in Dynamics 365, we first needed to create a Product Master. Our conversion program considered 2 years’ worth of sales orders, work orders, and purchase orders. Dozens of fields across these tables were used to derive physical and chemical attributes of the product. After extensive research and data profiling, we grouped transactions on matching attribute fields and could confidently define a Product Master for each distinct combination or attributes. 95,000 sales orders, work orders, and purchase orders boiled down to 5,000 distinct products successfully converted to Dynamics 365.

Developing Formulas and Routes from Transactional Data

To standardize manufacturing processes, we were also tasked with creating master Formula and Route data for Dynamics 365. Surprisingly, most of the metalworking instructions we needed for conversion were non-existent in the system. We found that only a few weeks' worth of scheduling data was retained at any given time. To overcome this gap, the business restored 2 years' worth of scheduling data from tape backups, and we used these historical backups to extrapolate the Formula, Route, and Operations. In addition to historical scheduling data, we worked with manufacturing experts to understand undocumented complexities in their manufacturing process. For example, certain steps needed to be structured inline (like when steel passed directly from a turning machine to a grinding machine) and others needed to be discretely broken out for capacity planning. Also, certain operations were structured as alternates to give the buisness greater flexibility in the manufacturing process. As a result, the business now has a source of truth for the plant floor that does not rely on tribal knowledge.

A Brilliant Legacy System Unraveled

Reflecting on the experience, I recognize that the legacy database and software was the result of over 20 years of ongoing development by loyal, lifelong employees. The clever and unconventional software this group created contributed to the cold finishing for millions of pounds of steel. Very likely, I have driven in a car, or been in a skyscraper, or flew in an airplane made with steel that at some time was a data point in this AS/400 mainframe server. I am humbled to have been called to help this business maintain their leadership position in the global supply chain. Together we unraveled this steel mill’s one-of-a-kind solution and migrated it onto a system that will carry the business forward for the next 20 years

Building Data Clarity and Trust through Improved Metadata Management and Data Governance

Client: $17B Privately Held Diversified Automotive Company

After implementing Workday HR solution, our client encountered difficulties in effectively tracking the usage of Workday reports and managing inbound/outbound HR-related datasets. Consequently, there was little insight into user adoption, poor visibility into potential data misuse, and a continued lack of data oversight. To address these issues, our team embarked on a mission to streamline their data management processes and enhance data governance.

Assess and Improve Data Governance Operations: Definian's Data Governance-in-a-Box approach assessed current data governance processes, operational model, and requirements. The assessment created a roadmap to quickly meet the immediate needs and set the groundwork to more easily incorporate future requirements.

Integrated Workday, Collibra, and Purview Metadata: Leveraging Definian's MetaMesh technology, we designed and integrated the metadata across Workday, Collibra, and Purview. This enabled the unified management of Tableau reports, Workday reports, diverse datasets, and other data products across the enterprise. Driving clarity and trust within our Client's data

Workflow Design and Implementation: Our team designed and implemented robust workflows aimed at governing the report certification process. These workflows were pivotal in ensuring that data shared both internally and externally adhered to stringent data-sharing agreements.

One of the largest technical hurdles that we worked to overcome was how Workday limited the extraction of metadata necessary to meet our Client’s requirements. To overcome those constraints, we strategically extended the functionality of native Workday reporting. This extension enabled MetaMesh to extract the essential metadata required for effective tracking and governance of their reports and datasets.

Through integrating Collibra, Workday, and Purview, designing governance workflows, and innovatively extending the capabilities of Workday reporting, we were able to build clarity and trust around the HR reporting functions for our Client. Our solution not only facilitated efficient tracking but also bolstered their data governance framework, ensuring adherence to regulations and optimizing data utilization.

This initiative signifies our commitment to crafting tailored solutions that overcome system limitations and propel organizations towards effective data governance and management. At every step, we remain dedicated to empowering businesses to harness the full potential of their data assets.

Moving to the Cloud ... Now What

Cloud implementations continue rising in popularity and are projected to make on-premise database-driven business solutions obsolete. This trend has been accelerated by the current pandemic and its global impact. Before COVID-19, experts projected the worldwide public cloud services market would jump by 17% this year [2020], reaching $266.4 million. At no other point in history has there been such a need for the instant availability of IT resources and remote collaboration. In response to this global pandemic, over twice as many IT leaders are planning to accelerate their cloud adoption plans than those that are planning to put them on hold. No matter what you or your organization chooses, it is important to not make this decision lightly.

While moving to the cloud has its obvious benefits and can be a very cost-efficient solution, it can also spiral out of control without careful planning and a data-driven approach. Cloud computing reduces or eliminates the need to purchase equipment and build-out and operate data centers. This presents significant savings on hardware, facilities, utilities, and other expenses required for on-premise computing. As an additional incentive, some cloud computing programs and applications, ranging from ERP and CRM solutions, use a subscription-based model. This allows businesses to scale up or down according to their needs and budget. It also eliminates the need for major upfront capital expenditures.

In stark contrast to the benefits and promised cost reductions when moving to the cloud are the risks and costs associated with a delayed, over budget, or unsuccessful implementation. According to Garter, “83% of all data migration projects either fail outright or cause significant cost overruns and/or implementation delays”. In this case, getting your data loaded into the cloud is only half the picture; the data also needs to be correct, both literally and for the intended business purpose. You can find more about how data migration can fail and the downstream consequences of that failure.

One of the most significant risks in any implementation is data. Data migration often gets underestimated because most think of it as “moving data from point A to point B”, but it is much more complicated. Gartner has recognized the risk inherent to data migration. “Analysis of data migration projects over the years has shown that they meet with mixed results. While mission-critical to the success of the business initiatives they are meant to facilitate, lack of planning structure and attention to risks causes many data migration efforts to fail.” Poorly understood/documented legacy data, dirty data, and constant requirement changes are only some of the reasons migrations often fail.

Lack of preparation is often the leading cause of delayed and over budget projects. No matter the target system, the first part of any successful migration/implementation is a data assessment. Definian’s Enterprise Data Modernization is a short, focused effort that helps organizations prepare for their upcoming initiatives. The list below can be used as a template of questions that must be asked and answered as part of a data assessment effort.

Identify

- Analyze the relevant data across your application landscape

- Generate detailed data statistics and facts for every data element in every relevant legacy table/file

- Report invalid data scenarios

- Summarize unique data patterns in key data elements

- Identify additional data-related anomalies

Assess

- Understand redundant data within an individual application and across multiple disparate applications

- Review and quantify missing, erroneous, and inconsistent data

- Gather metrics summarizing identified data errors

- Compare legacy data against data governance standards

Prepare

- Review detailed findings with the project team and issue recommendations

- Define a data quality strategy that outlines cleansing recommendations for each distinct error/warning

- Providence guidance regarding historical data

- Brief project leadership regarding potential project risks

The only way to plan effectively and create accurate business requirements is to base them on data facts and not assumptions. These facts come from a through data assessment effort. Research from IDG found that 41% of IT directors delayed or abandoned some of their 2019 IT modernization initiatives, often due to competing priorities or lack of a clear strategy. A current client, implementing Oracle, was able to meet their originally planned System Integration Testing (SIT) cycle deadlines and conversion metrics, despite not leaving enough time between the initial 3 test cycles to address the complex, dirty, and heavily customized legacy data. The project suffered the heavy additional cost of overtime work for several months. The additional cost would have been unnecessary if a data assessment was performed before the creation of the project timeline because the complexity of the legacy data would have been uncovered and the milestones adjusted accordingly. Other implementation efforts are not so lucky; for example Target Canada. Starting with and committing to a data-driven methodology alongside industry experts, your organization is on the right path to successful cloud implementations and systems for years to come.

If you want to ensure your cloud implementation is a success, have questions, or just want to discuss cloud migration, feel free to contact us.

Evaluation of Tableau Catalog

Tableau Catalog is a feature within Tableau that provides a comprehensive and unified view of your organization's data assets. It helps users discover and understand the data available to them, promoting better data governance and collaboration. Here are some key aspects of Tableau Catalog:

- Metadata Management: Tableau Catalog allows you to capture metadata about your data sources, such as data definitions, lineage, and usage information. This metadata is crucial for understanding the context and reliability of the data.

- Data Discovery: Users can easily search and discover relevant data sources within the organization. This promotes self-service analytics and reduces the time spent on searching for the right data.

- Impact Analysis: Tableau Catalog provides impact analysis capabilities, allowing users to understand how changes to a particular data source may affect downstream reports, dashboards, or analyses.

- Data Lineage: Understanding the lineage of data is essential for ensuring data quality and making informed decisions. Tableau Catalog visually represents the flow of data from its source to its usage, helping users trace the origin of the data.

- Usage Metrics: It provides insights into how frequently data sources are used, which can help organizations optimize their data infrastructure based on actual usage patterns.

- Collaboration: Users can add comments and annotations to data sources, fostering collaboration and knowledge-sharing among team members.

- Security and Governance: Tableau Catalog supports data governance by allowing administrators to define and enforce data security policies. This ensures that sensitive data is accessed only by authorized users.

- Integration with Tableau Server and Tableau Online: Tableau Catalog is integrated with Tableau Server and Tableau Online, providing a seamless experience for users working within the Tableau ecosystem.

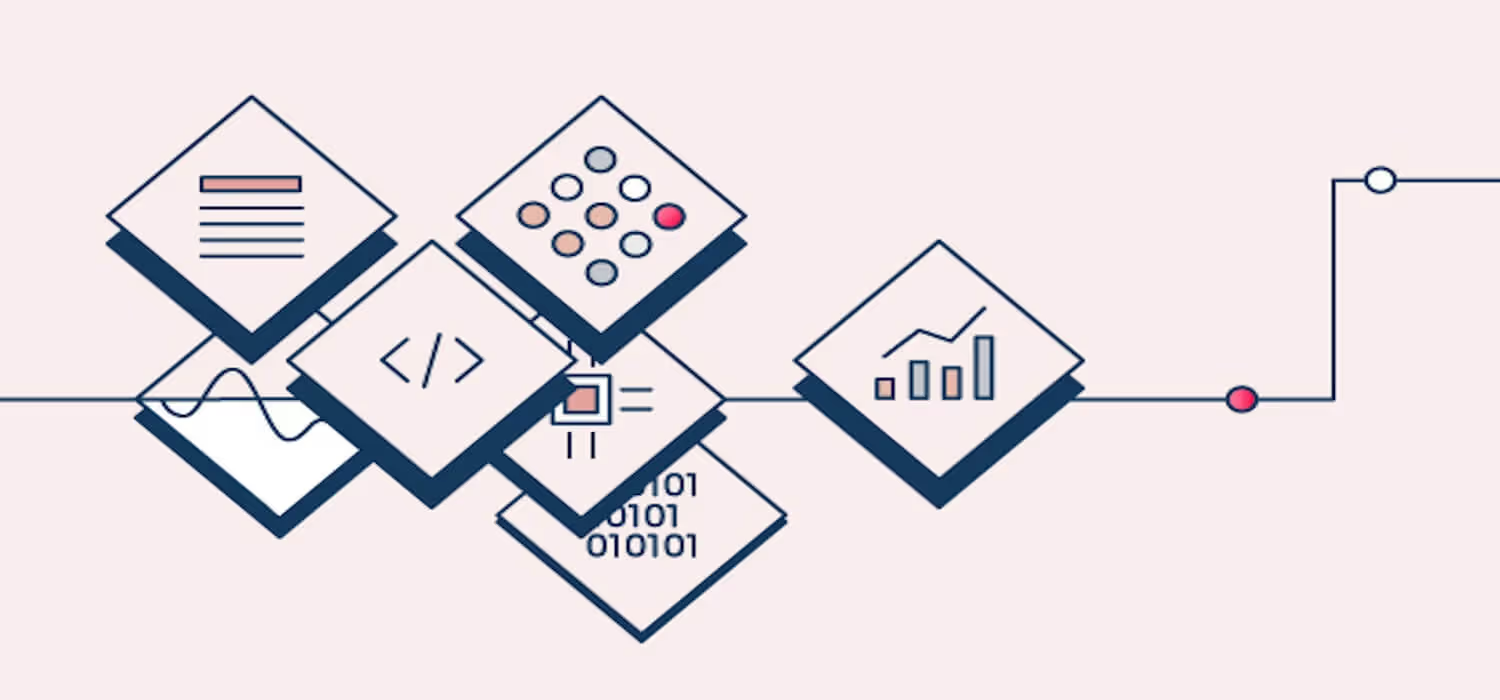

Tableau Catalog integrates features like lineage and impact analysis, data discovery, data quality warnings, and search into Tableau applications (Figure 1).

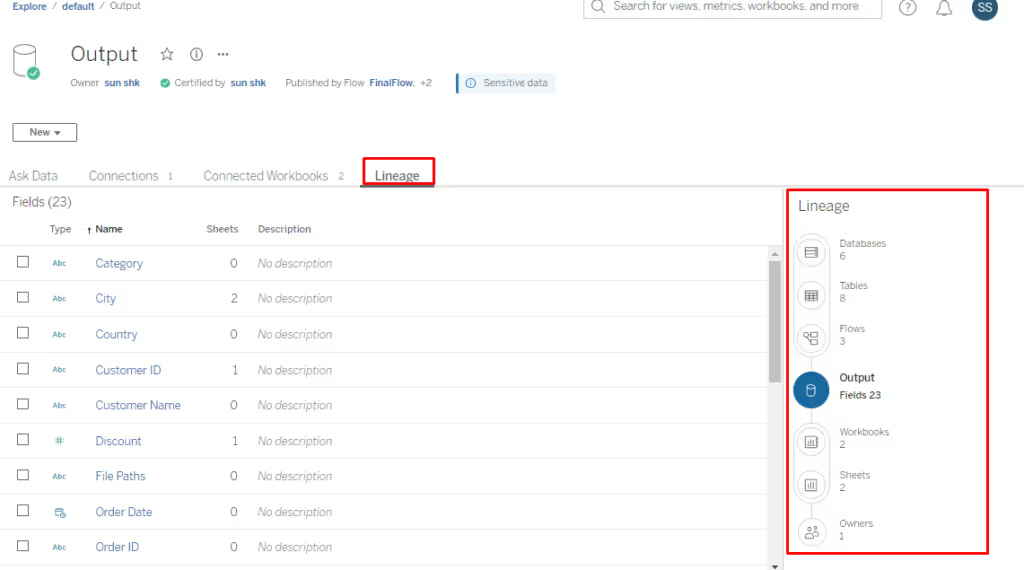

Tableau Catalog supports data lineage including databases, tables, flows, workbooks, sheets and owners (Figure 2).

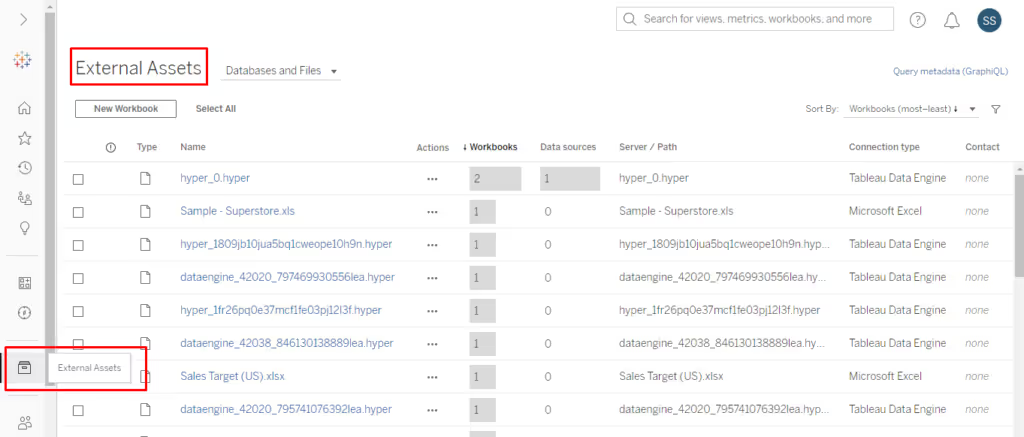

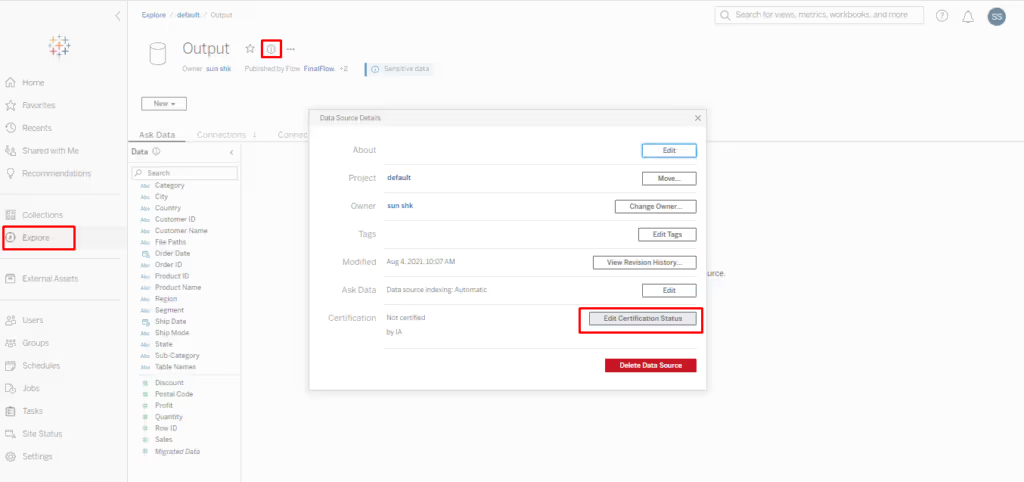

Tableau Catalog also supports data certification. After executing the certification process by data owners, a data source gets a green check mark on its icon. Certified data sources rank higher in search results and are added to recommended data sources (Figure 3).

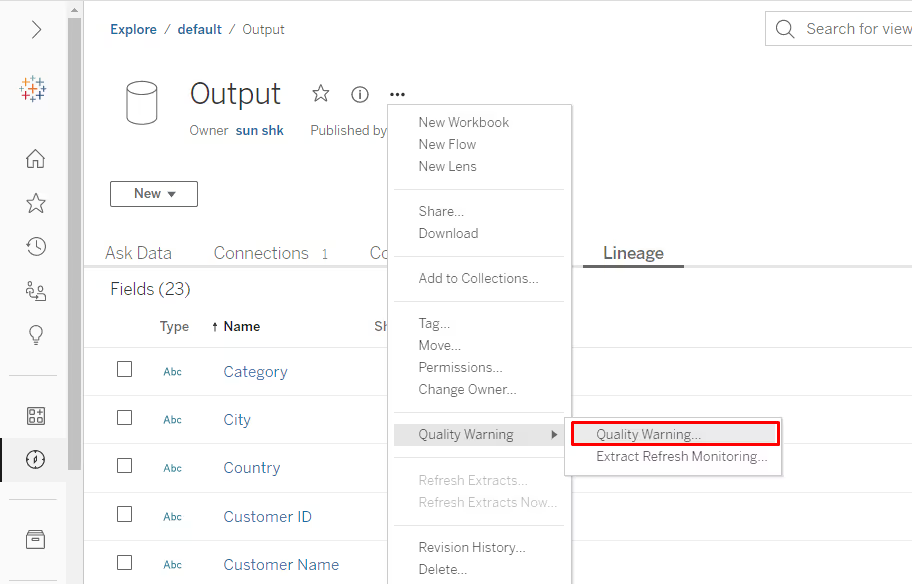

Users can also set Quality Warning messages on data assets such as data sources, databases, flows, and tables. Quality Warnings include Deprecated, Stale Data, Under Maintenance, and Sensitive data (Figure 4).

Overall, Tableau Catalog combined with Tableau Prep Conductor forms part of Tableau Data Management, which is fit-for-purpose for Tableau customers.

Ounce of Prevention Worth a Pound of Cure

An ounce of prevention is worth a pound of cure. This is true in terms of Grandma logic and in the world of data management. Organizations spend millions implementing new ERP and business application solutions but frequently neglect the foresight to plan out master data management after the transformation occurs.

Implementation partners are the best in the world at implementing new solution, whether it be Oracle Cloud, SAP, Workday, D365, or another solution. Implementation partners/consultants know their stuff. I've worked with tons of them. They're whip smart and driven to make the solution work. However, the focus is making the solution work for you and not how to improve and sustain your data's value and cleanliness.

I'm going to spend a minute with you today to outlining how to start a conversation on data quality and data management in a way that can help prevent it from backsliding and costing a fortune to fix in a few years and invigorating the organization's data governance.

A good way to start that conversation is too talk through how data quality is going to maintained on an ongoing basis and that if it is not measured, it will degrade.

- Work with the stakeholders to rough out key data areas that cause significant impact and the impact of that data if it degrades

- Create a rudimentary scorecard by outlining out the acceptable level for each issue

- Collaborate with the team to identify who owns these metrics and if there are mechanisms in place that capture to them

Once the basics of the process and the seed is planted about measuring data quality and how it affects the organization, start to dive deep into the types of data quality issues that affect the business.

Think cross platform data flows and how/where data can go south as it moves across the landscape. After the data conversations has started, there is a lot more that the team can do, but the important part is that everyone is starting to recognize the value and impact data has.

This small mindset shift will pay off dividends in terms of efficiency and insights as the data governance and master data management culture begins to take shape.

If you have questions about data management, data quality, or data migration, click the chat bubble below. I'd like to learn about them and work with you to figure out solutions.

4 Steps in your Ivalua and Oracle Cloud Journey

Implementing Oracle Cloud ERP together with Ivalua can improve your organization’s supplier on-boarding management, sourcing and RFx, contract redlining, and provide an intuitive marketplace for procurement. However, that functionality comes with a significant amount of complexity and dependencies that we experienced while executing the complex data migration process for both Oracle Cloud and Ivalua at one of the nation’s premier hospital systems.

If you plan on implementing Oracle Cloud ERP and Ivalua, the following best practices will help guide your journey:

1. Recognize that not all cloud infrastructures are the same

Like Oracle Cloud, Ivalua also rests on a cloud infrastructure. However, Ivalua’s cloud model is different from Oracle. Ivalua is highly configurable, and more closely resembles a typical on-prem structure, with the capability to customize data migration load templates that are client-specific.

For example, this hospital system converted legacy contracts to Ivalua, which were then migrated to Oracle Cloud via a BPA (Blanket Purchase Agreements) interface. One of the hospital’s unique business requirements was that contract priority be converted to Ivalua. Even though Ivalua does not have such a field out of the box, we were able to quickly create a new custom field, add it to the Ivalua load template, and integrate it into an existing Oracle Cloud field.

2. Adapt your load approach for each system

As an all-encompassing ERP, Oracle Cloud enforces data integrity validation and structural crosschecks that will prevent invalid data from loading, which consequently requires longer loading times. In contrast, Ivalua’s focus on specific modules enables faster data loads.

For this hospital’s implementation, during the second System Integration Testing (SIT2) cycle, a new Ivalua scope requirement unexpectedly came up which required the team to purge previously loaded data and reload contracts according to the new requirements. The entire contracts purge and re-load process was able to be completed within 48 hours (the load itself took less than 1 hour).

3. Understand Inter-dependencies

When implementing Ivalua alongside Oracle Cloud, consider the common conversion elements between the two data transformations. For example, when converting procurement elements into Ivalua, it is critical to maintain a focus on Suppliers, Items, and Manufacturers that are also being converted to Oracle Cloud. A consistent scope and deduplication strategy should be implemented in Ivalua and Oracle Cloud, and common keys will need to be referenced in both systems. One final area worth exploring could be using your existing Oracle Cloud to Ivalua integration to replace some of the Ivalua migration work.

To help us handle these complex interdependencies, we used a data conversion tool – Applaud – to maintain a shared data repository across these data sets. This made it possible to report against the entire data landscape and keep key values synchronized between Oracle Cloud and Ivalua. Applaud also accelerated deduplication of supplier data in both Oracle Cloud and Ivalua, resulting in optimized business outcomes after go-live, related to a single view of suppliers, total spend, corresponding volume discounts, etc. Finally, we were able to rely on existing integrations between Oracle Cloud and Ivalua to replace several supplier conversions, saving the hospital tens of thousands of dollars in the process.

4. Establish a program-level plan encompassing both Oracle Cloud and Ivalua

It is important to view things at a program level, not just at the individual Oracle Cloud and Ivalua project levels. For example, on this hospital’s implementation, supplier data was initially being reviewed, loaded, and validated by separate teams for Oracle Cloud and Ivalua, which caused inharmonious data to be loaded to the two systems. By moving to a common team performing a common sign-off, data quality was vastly improved, and the amount of rework was reduced.

Additionally, Oracle Cloud load failures can have a major impact on Ivalua data conversion. Even a handful of suppliers falling out from the Oracle Cloud loads have the potential to significantly impact downstream Ivalua loads. For example, during the SIT2 cycle, one of the suppliers that fell out from the Oracle Cloud loads corresponded to dozens of contracts and thousands of contract lines. Without performing a delta load of this supplier to Oracle Cloud and Ivalua, thousands of item prices would be missing from Ivalua.

The steps outlined above give you a framework to understand the Ivalua and Oracle Cloud systems relative to one another and accordingly plan your conversion effort. Having a common team with a common data migration solution to work on both Oracle Cloud and Ivalua gives you visibility into all the inter-dependencies mentioned above and potential breakdowns.

IT Transformations that involve multiple technologies can be daunting, but the payoff is that your organization will have a best in class solution that addresses your unique needs. I wish you luck on your implementation and if you have any questions about data conversions for Oracle Cloud and Ivalua, please contact me at luke.sherer@definian.com.