Articles & case studies

4 Questions to Ask When Implementing Oracle Cloud Projects and Grants

We are at the beginning of our Cloud journey for a globally recognized research hospital. This institution has thousands of beds and conducts 2,500 ongoing research projects. When Definian was brought onto the project, our goal was to help establish a high-level process flow for the Oracle Project Portfolio Management and Grants. The insights we gained over the last several months will fuel this institution’s ERP transformation as it continues to grow and acquire additional institutions.

If you plan on implementing Oracle Cloud PPM and Grants you will have to face the questions I pose in the 4 critical areas listed below:

1. What is the financial design of Projects and Grants?

The Projects Portfolio Management (PPM) module organizes activities as projects and provides a framework to organize finances. Depending on the financial requirements, there are various ways to set up projects. For example, billable projects require a project contract, which identifies billing methods and procedures. For any institution receiving external grant funding, they will likely have to implement the Grants module. This module can be seen as an extension of Projects, where Awards are created to organize and control Grant-related finances in a unified platform. Note that a contract is automatically created when an award is created, but updates may need to be made manually or through a web-service.

Each design decision has implications for downstream conversions, business users, and even other modules. However, these decisions are rarely finalized in the early days of a project. For this hospital, we sketched out multiple approaches, loaded data for a few options as a proof-of-concept, and iteratively zeroed in on the right solution. This iterative approach enabled the Client to see their data structured in different ways early in the project and saved the implementation team time by figuring out the right design outside an official integration test.

2. How are personnel configured?

A project must be assigned a project manager, and an award must be assigned a principal investigator (PI). Before employees can be assigned to these roles, they must fulfill certain configuration requirements, which might require several rounds of testing. This setup is especially difficult if you are not using Oracle Cloud as your HCM solution since the interface itself may need to be updated. Project Managers must be assigned a job and department and have an active assignment, whereas PI’s must be loaded as a PI in Awards Personnel and be configured as resources.

These are all separate configurations and the complex interplay between them needs to be thought through and vetted. Even after the vetting, the maintenance of these configurations can be difficult to maintain. One technique we’ve developed that has been critical in the success of the project is an automated process that checks for the missing and mismatched configurations for the personnel within Oracle Cloud prior to every conversion cycle.

3. How should budgets and costs be designed?

Depending on business requirements, there are several ways that budgets and costs can be organized. If the projects are budgetary controlled, the total cost for a project must be less than the total budget for the project – if the legacy system did not enforce these controls, then you should expect load errors and data cleanup. Another consideration that is critical to auditors and compliance officers is how historical cost data will be handled. If business requires historical data, you may want to summarize/aggregate costs by month or year to address the high volume and streamline the loading and review process.

This summarization is exactly what we did to ensure that our Client meets audit requirements. Our conversion approach is to aggregate historical costs by expenditure type, month, and year so that this data can be easily reviewed in Oracle Cloud for auditing purposes. Then as part of this process, we validate that total costs on the project does not exceed the total budget. This approach prevents data discrepancies and avoids data fallout during the load process.

4. Can the data be loaded and processed within the cutover window?

The cutover window is a necessary part of go-live where the legacy system is completely shut down, data is migrated to the target system, and the new system is turned on. During cutover, business cannot be conducted as usual and, for healthcare, there is clinical impact. All data migration activities must fit into this window so that business functions are not impeded. Note that loading and processing times can be different depending on the configuration of your Oracle Cloud pod, so it is critical to monitor performance in preparation for cutover.

For this project, we found that even after aggregating cost data by month and expenditure type, over 1.5 million Project Cost records would still need to be converted to Oracle Cloud. We learned from test cycles that it will take 24 hours to load this volume of data. This posed a serious risk to go-live and left little time for running conversions, loading upstream objects, post-load processing, and validating data during the cutover window. To reduce risk, we worked with the hospital on a plan that migrates the vast majority of the data weeks prior to go-live and shortens the cut-over window.

Whether you represent a research hospital, a university, a non-profit, or any other enterprise that relies on Project and Grants management, these same questions will be relevant to you as you begin your Cloud journey.

For a hospital like this, projects range from grant funded research in everything from cancer to coronavirus, internally funded initiatives, and even construction. By upgrading from their aging Lawson solution to Oracle Cloud, the institution will be able to track projects from inception to close and have access to comprehensive reporting. This will make meeting budgetary, statutory, and regulatory requirements easier, and the institution will become a better steward of donor and sponsor money.

This implementation also positions the hospital for continued growth. The questions detailed above are an important part of the discovery and requirements gathering process for implementing Oracle Cloud PPM and Grants for each acquisition and are part of our best practices approach.

I wish you luck on your implementation and if you have any questions about data conversions for Oracle Cloud Project and Grants modules, please contact me at dennis.gray@definian.com.

COVID-19 Won’t Stop Our Hospital’s ERP Implementation

A few months ago, while working on an ERP implementation for a large hospital, one of the directors on the supply chain team was abruptly pulled away. A key supplier admitted that one of their contractors was manufacturing in a facility not registered with the FDA and surgical gowns they manufactured may not be sterile. Considering this emergency, I was ready to postpone the meeting. But the team quickly agreed that we needed to complete this requirement gathering session. We needed to move forward and make progress on this project. After all, this supply chain disruption was exactly the type of challenge that our new solution would be able to handle more swiftly and accurately than their aging system could.

Due to the complexity of hospitals’ supply chains, clinically impactful disruptions are more common than most people expect. In addition to having multiple locations, departments, and business units to account for, hospital supply chains are further complicated by the range and types of products involved and the different requirements for handling them. For example, pharmacy products are highly regulated and therefore require accurate end-to-end tracking and management to ensure compliance. Countless products are subject to safety recalls – like the one that pulled our supply chain director away. Mismanagement of these supply chain complexities can result in situations in which doctors and nurses are unable to carry out their mission as caregivers.

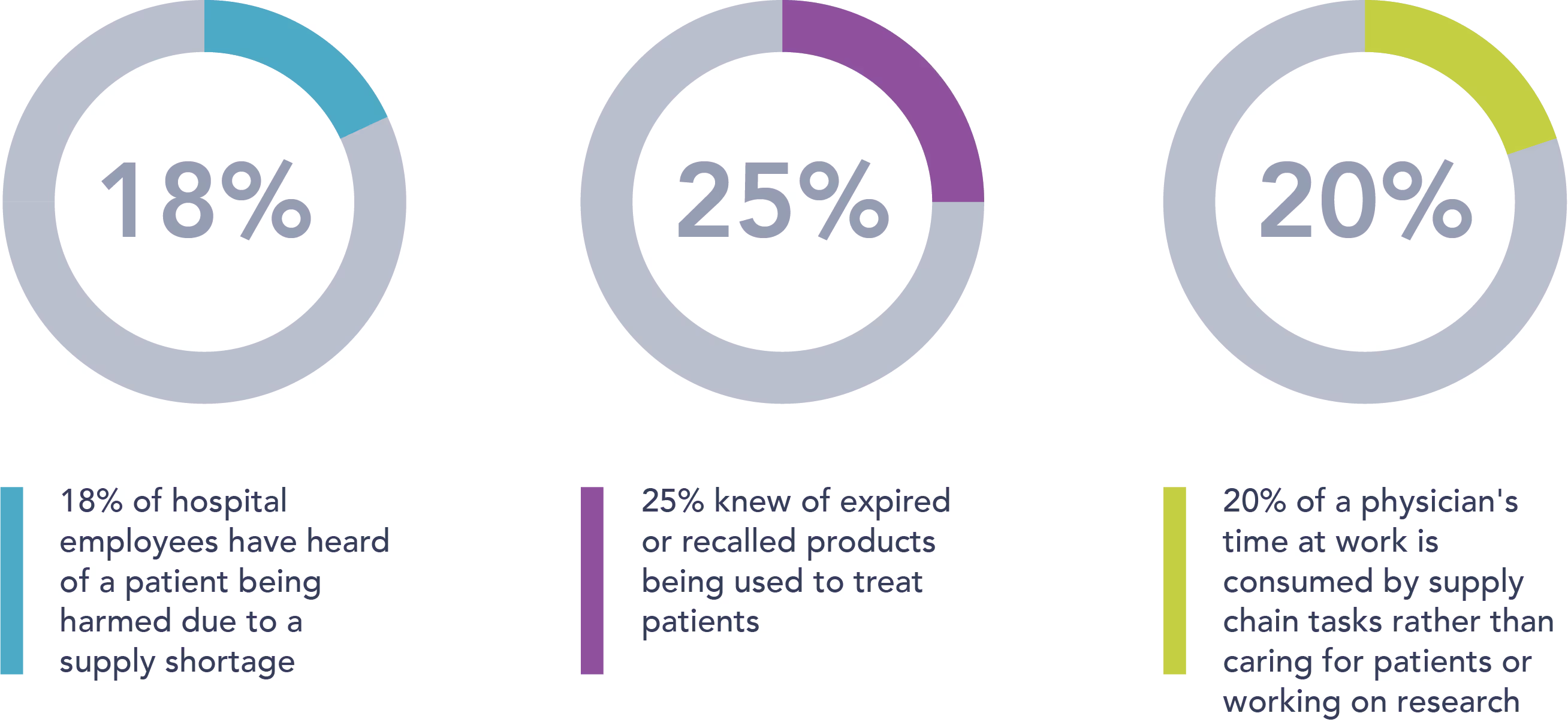

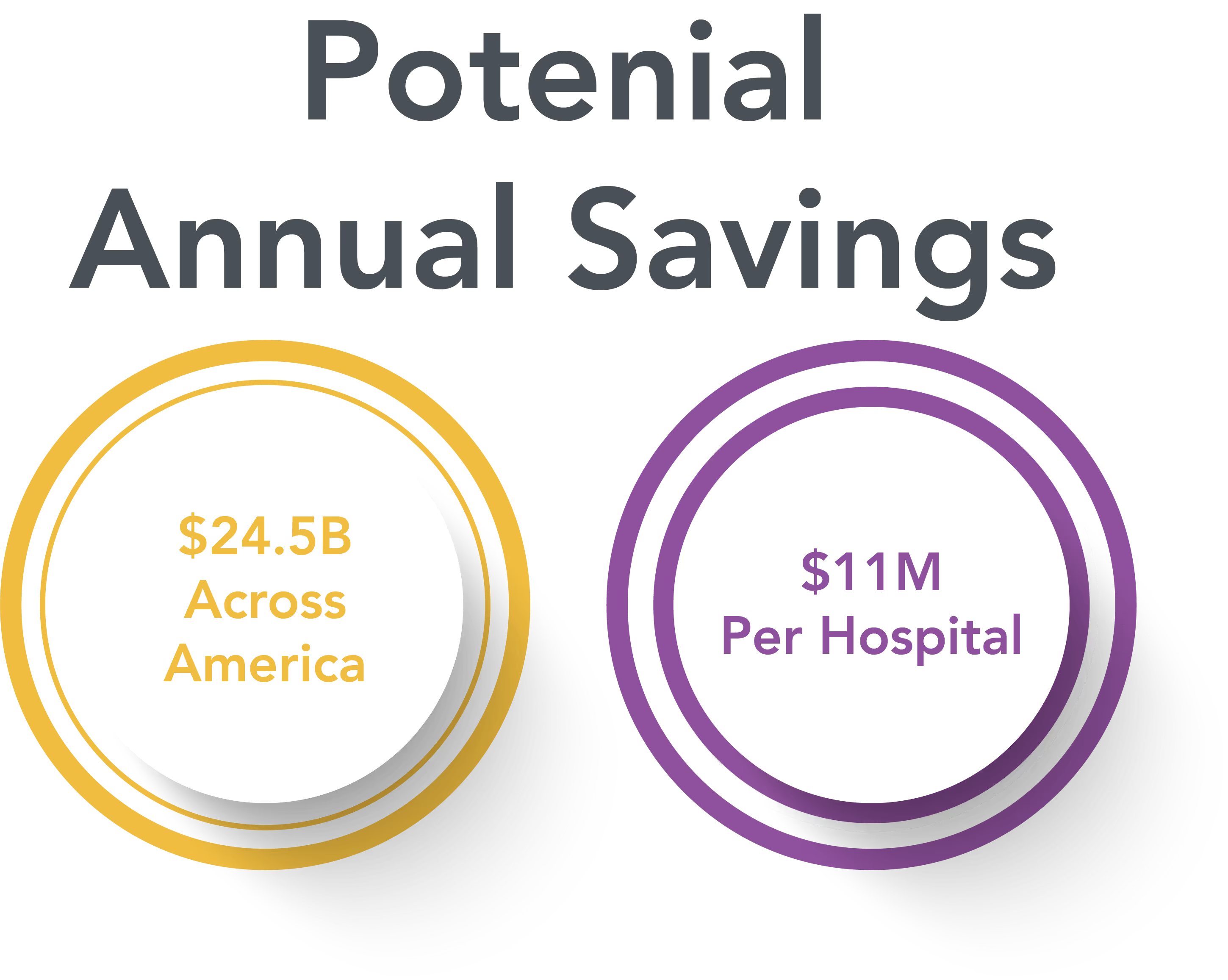

According to a Cardinal Health survey from 2017, 18% of hospital employees have heard of a patient being harmed due to a supply shortage. Also, 25% knew of expired or recalled products being used to treat patients. Inefficiencies and functional gaps in aging supply chain management systems are causing patients to receive less than desirable care. The same Cardinal Health survey showed that 20% of a physician's time at work is consumed by supply chain tasks rather than caring for patients or working on research. Hospitals in America could save $24.5 billion annually or approximately $11 million per hospital each year by eliminating supply chain inefficiencies. To generate these estimates, hospitals with high performing supply chains were compared with all others, which proves that these savings are realistic and attainable without sacrificing overall hospital performance.

Every day, Supply Chain Management errors hinder doctors and nurses’ ability to effectively treat patients. With these issues occurring with such frequency even during normal operations, it is not hard to imagine the widespread Supply Chain challenges that would occur during a crisis, especially a global one. Today, amid a pandemic, hospitals must function as efficiently as possible. Doctors and nurses want to focus their attention on patient care rather than the availability of supplies, but this is not always possible.

The COVID-19 pandemic dramatically disrupted the medical supply chain. Demand for masks, antibiotics, and other supplies skyrocketed as medical staff, first responders, and average citizens all attempt to protect themselves from exposure to the virus. At the same time, the supply of these items has plummeted. China, the world’s largest supplier of masks, was forced to close factories to prevent the virus’s spread. These factory closings in China have limited the supply of antibiotic components to India, which is the world’s primary producer of antibiotics. As Indian government officials attempt to handle the pandemic in their own country, they have begun to restrict the number of antibiotics that are exported. To make up for the lack of supplies available to be imported, American companies stepped up, put their normal business operations on hold, and began making ventilators and masks using any available supplies and equipment. Medical Supply Chain Management systems must be able to adapt to these unusual circumstances to procure the appropriate amount of supplies based on the changing demand and sourcing from new or unusual vendors. While the supply shortages caused by COVID-19 have grabbed media attention recently, the reality is that supply chain management issues existed in healthcare long before the pandemic forced them into the spotlight.

Hospitals must be able to overcome challenges and respond quickly and effectively during a crisis to provide the best care for those affected. People are getting dangerously ill and dying from coronavirus. Caring for patients and saving lives must be the priority for doctors and nurses. But they cannot devote their full attention to patient care unless they first have confidence in the integrity of the supply chain, certainty about the availability of drugs, PPE and other equipment, and confidence in the accuracy of the data that manages it all. The supply chain must be managed effectively by a Supply Chain Management System to allow doctors and nurses to focus on patient care with assurance that they will have the necessary and proper supplies to do so.

We continue to move forward with the hospital ERP implementation, even amid this pandemic. Virtually, I am still working alongside my colleagues in the hospital system, testing new scenarios, and working through evolving requirements. I am lucky to be on a team like this, uniquely positioned to follow the stay at home orders while still contributing to the future of healthcare. Together, we are transforming the enterprise and putting a supply chain solution in place that will prepare the hospitals for the next challenge. This work will ensure that at the go-live date doctors and nurses will be able to trust that the supply chain is being managed effectively, reducing the risk of mishaps, and accelerating the response to health emergencies. This current pandemic proves just how significant the work we are doing is and why it is essential for us to continue working on the project. When the new Oracle Cloud implementation goes live, the hospital will find itself not only with streamlined reporting, supply and demand predictions, and supplier regulations but also with reduced operating costs.

Hospital supply chain management systems must be able to handle complexities, whether those complexities come from a global pandemic or regular daily activities. Definian migrates the data that drives these systems and ensures that they will function as efficiently and accurately as possible. With these systems in place, doctors and nurses can provide the high standard of care that we all hope for with the peace of mind that they will have the supplies needed to do so. While the recent pandemic highlighted the challenges facing healthcare supply chain management systems, these challenges existed all along. For hospitals to provide the best possible patient care, they must have an effective supply chain management system. Understanding the clinical impact of an SCM solution continues to motivate my team and I to keep working hard, especially through the COVID-19 pandemic. We know that when the project is complete it will make a real impact to alleviate stress and reduce extra work for doctors and nurses. Simultaneously patients will receive better care because of streamlined operations. Because of our efforts to migrate to Oracle Cloud, the hospital will be in a better position to manage the next supply chain disruption. Our Definian team is proud to work on a project that will have such a long-lasting, positive impact.

Austin, Rob. “Hospitals' Supply Chain Savings Opportunity Jumps to $25.4 Billion a Year.” Guidehouse, 18 Oct. 2018, guidehouse.com/insights/healthcare/2018/supply-chain-analysis?utm_source=pr&utm_campaign=sc18.

Calculating Oracle Cloud Data Migration Effort

Oracle Cloud Data Migration Effort

If you're implementing Oracle ERP, SCM, Finance, HCM, Sales or any other combination of Oracle modules, it's of critical importance to review all activities and corresponding effort of each one well in advance to the start of the implementation.

When planning and estimating the data migration there are 5 main phases of data assessment, data quality, data transformation, data load, and the data validation that should occur during the course of an implementation. These phases plus timeline, expertise and a several other factors make up an accurate data migration estimate and help keep the project on track. Depending on your landscape and retention requirements, a sixth requirement of systems retirement might also be required.

Building A Data Mesh With Starburst

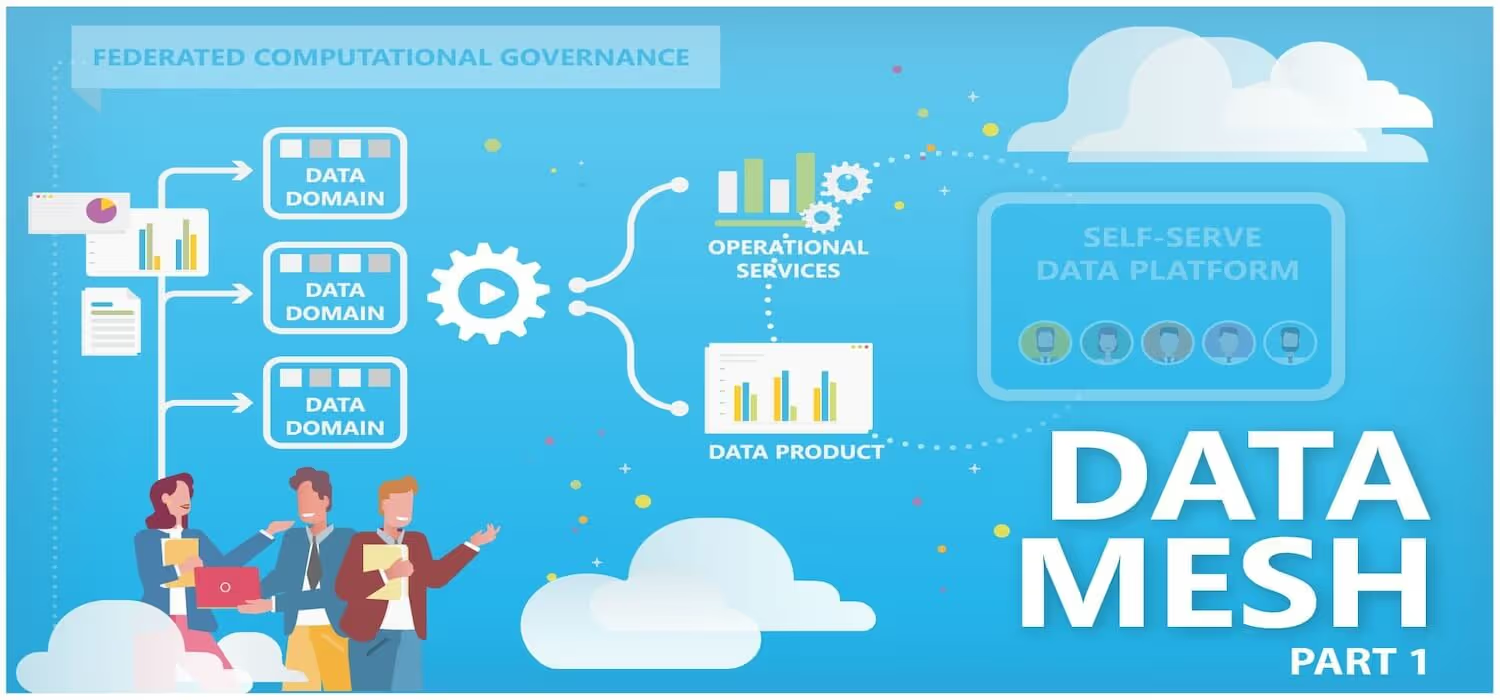

Data mesh is a new, decentralized approach to data that allows end-users to easily access data where it lives without a data lake or data warehouse. Domain-specific teams manage and serve data as a product to be consumed by others. Its objective is to allow for data products to be created from virtually any data source while minimizing intervention from data engineers.

Data mesh has four principles to achieve this objective. The principles are:

- Domain-Oriented Ownership

- Data as a Product

- Self-Service Data Infrastructure

- Federated Computational Governance

Starburst can be used to achieve a data mesh. The following sections outline how Starburst can be used in alignment with each of the four principles of data mesh.

Domain-Oriented Ownership

In a data mesh, data teams are organized by domain, which is another word for the subject area. Teams publish data products that other teams can access and use to derive their own new data products. Starburst’s goal is to allow teams to focus less on building infrastructure and data pipelines around serving data products and more on using familiar tools such as SQL to prepare data products for end-users.

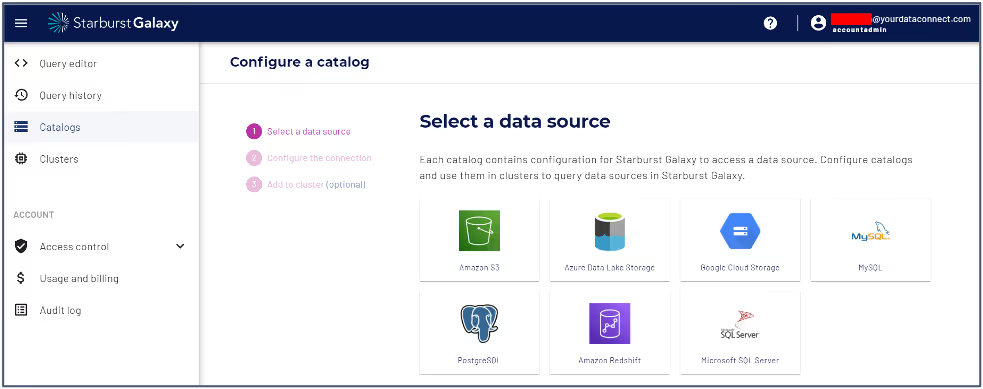

To achieve this, Starburst provides a large set of connectors that allows each domain to connect to data wherever and in whatever format it may live using a SQL query interface.

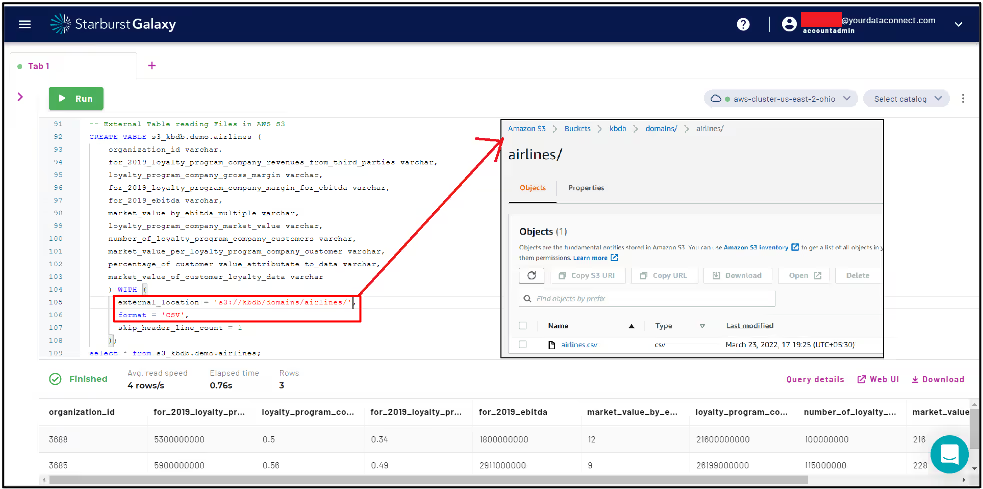

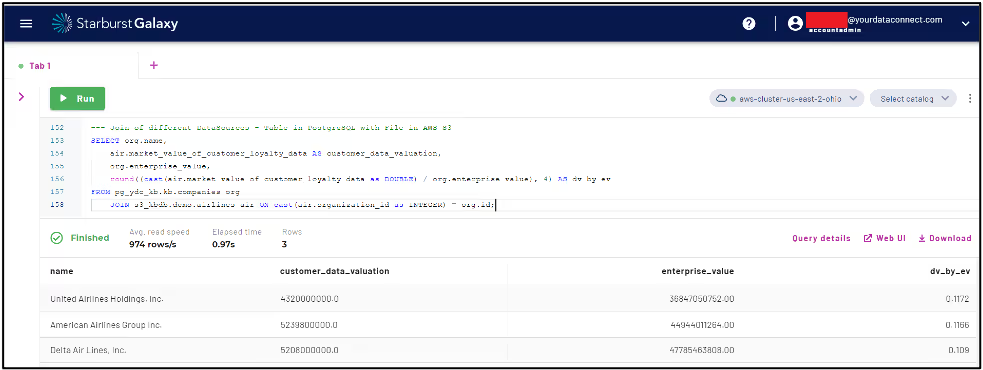

Figure 1 shows various example data sources that can be accessed from Starburst.

Data as a Product

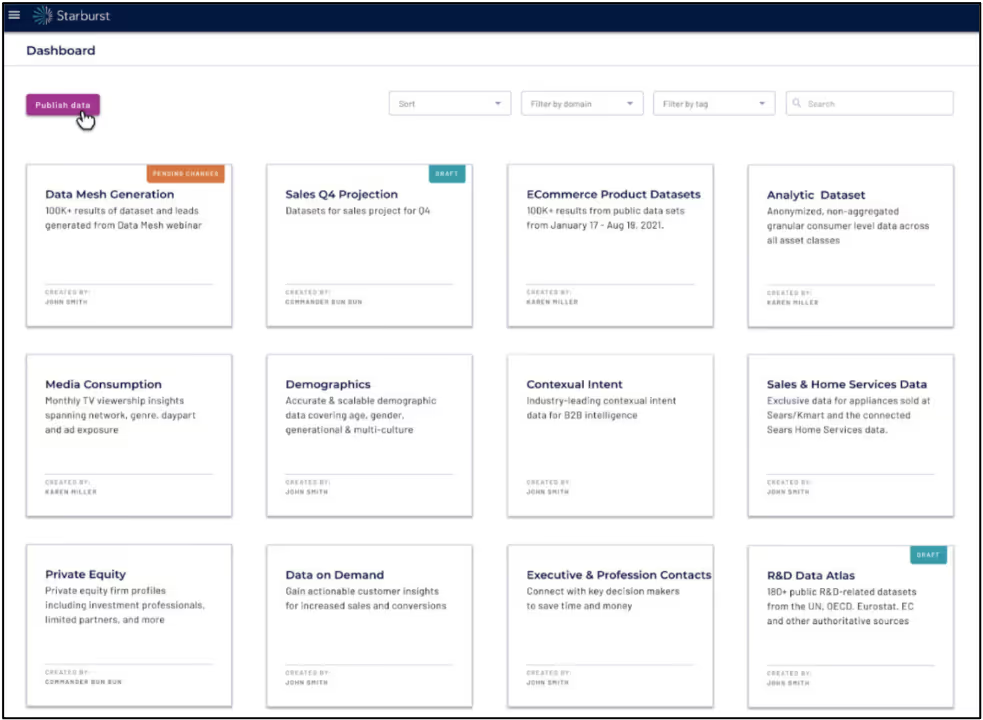

After connecting to a data source in Starburst, Starburst allows you to curate data products from it for other users to access.

Users can browse the published data products as shown in Figure 2.

Self-Service Data Infrastructure

Starburst’s SQL query interface allows users to discover, understand, and evaluate the trustworthiness of data products. Figure 3 shows an example of using the SQL query interface to query an Amazon S3-based data product.

Using the SQL query interface, you can also join data products from different technologies together. For example, Figure 4 shows an example of joining together a PostgreSQL-based data product with an Amazon S3-based data product on a common field. The result of this join can be considered a new, derived data product that can also be registered in Starburst.

Federated Computational Governance

Data mesh proposes a federated model for data governance that focuses on shared responsibility between the domains and the central IT organization in order to adhere to governance, risk, and compliance concerns while allowing adequate autonomy for the domains.

Starburst provides connectors and access to various data governance and data catalog tools such as Collibra and Alation to help users discover, understand, and evaluate the trustworthiness of data products.

Starburst also significantly reduces the need to create copies of data between systems as Starburst’s query engine can read across data sources and can replace or reduce a traditional ETL/ELT pipeline. Copying data also requires reapplying entitlements, which can result in potential opportunities for a data breach; with Starburst that risk is minimized simply because fewer copies of the data will exist since data is mostly queried at the source. This concept, known as data minimization, means data privacy, security, and governance are more achievable goals in organizations that embrace Starburst together with data mesh.

Sources

Https://Www.Starburst.Io/Resources/Starburst-Data-Products/

Https://Blog.Starburst.Io/Data-Mesh-And-Starburst-Domain-Oriented-Ownership-Architecture

Https://Blog.Starburst.Io/Data-Mesh-And-Starburst-Data-As-A-Product

Https://Blog.Starburst.Io/Data-Mesh-Starburst-Self-Service-Data-Infrastructure

Https://Blog.Starburst.Io/Data-Mesh-Federated-Computational-Governance

Data Monetization Blog - Insurance Industry Perspective

Every organization has areas ripe for driving monetization from internal data and information. Take the Insurance Industry as an example.

The success of an insurance company lies in its ability to assume and diversify risk. This means being good at underwriting, pricing, and managing risks and claims which will effectively drive market share and maximize returns on reserves. But let’s look at some common claims related data issues that may be impeding these initiatives.

- Mismatches of Losses against Written Premiums: Losses may be being paid where the corresponding premium isn’t being collected, or perhaps for business agents are not licensed to write. (i.e. state limitations) This can impact the adequacy of existing rates and future ratemaking. It can also lead to inaccurate DOI reporting.

- Incorrect Cause of Loss and Coverage Combinations: Claims adjusters may be picking invalid combinations. (e.g. Coverage Type is Bodily Injury, Cause of Loss is Hail) These types of issues create inaccurate loss reporting which directly impact loss reserves and loss ratios. Furthermore, the accumulation of incorrect data results flawed development patterns used in actuarial models.

- Overpayment of losses on Policy Limits: The company may be paying out too much in losses, due to improper governance of losses being matched to incorrect sub-limits for bundled policies. Again, this can lead to the some of the same issues noted above.

Working with your business we can help you build and substantiate business cases to support your existing priorities, or help you identify areas of opportunity. Examples might include increasing revenue per policy holder, accurately recording the average cost per claim, maximizing return on surplus, or lowering loss ratios.

Here is our 5-step Data Monetization process to identify and drive your value-added opportunities:

- Identify the relevant stakeholders to gather input and insight

- Review existing or help build out new use cases to grow revenues, reduce costs, or manage risk

- Develop business cases by identifying root causes and critical data drivers, establishing assumptions and quantifying financial impacts

- Execute each business case, by implementing data governance and data management activities

- Track benefits realized from executable data monetization activities against planned assumptions

Let us help you reach your potential!

Data Conversion Lessons Learned at the State of Iowa

The State of Iowa and Workday in conjunction with governing.com shared the State’s journey to the cloud over the past several years.

During the presentation, Matthew Rensch reviewed the State’s Workday implementations at the DOT, ISU, and the State of Iowa Government and shared lessons learned over the course of the projects. One of the three most important lessons learned was regarding data conversion. This is what he had to say:

“Data Conversion is another piece. It was probably the single largest headache for us at the DOT.

Understanding what our legacy data was, how it was used, what it was defined as, and how it fits into Workday is not something you want to do on your own. It is not something you want to brute force through.

Your IT shops will definitely tell you, ‘Oh yeah, we know where our data is. We know what our data is. Once you start to dig into it, you will quickly find that it’s not that simple and it’s not that easy. Again, this is another partner that we partner with. We’re partnering with a company called Definian out of Chicago.

And I’ll tell you, on the DOT implementation, the single point of headache and problem we had was with the data conversion efforts. I have spent 1/10th of the time on data conversion efforts for the entire state then I did for one agency at the DOT.

The data conversion piece of it has been the easiest thing so far because Definian came in, they worked our staff both business and IT. They went through the workbooks, they understood what our current data was, and they did the work to figure out how the data fit into Workday.

They did all of the technical work and what they would pass it back to us are those things that come back as errors for us to fix. So, something that didn’t map, we go out and look to see and go out and fix those. Before we were doing it all. Now, we’re only doing small piece and this part of it has been huge.“

While we’re honored about the amazing unsolicited feedback regarding our services, the data difficulties at the DOT Matthew discussed are the same difficulties that most organizations encounter during implementations and should be focused on when planning an implementation.

Contact us for more information about Definian's Workday data migration services.

Project Snapshot: Workday Data Migration for a State Government

Client: State Government with Antiquated Legacy Data Landscape

Background

The state is running across nine disjointed, antiquated, and disparate ERP applications for HCM and finance across 70+ agencies. Faced with a series of evolving business requirements, increased maintenance costs, loss of technical mainframe knowledge, and difficulty hiring/keeping staff that is willing to use these old systems, the State cannot successfully run its business. In order to improve their operations and address these issues, the State embarked on a transformation initiative that will consolidate their business processes and data into a single instance of Workday.

Project Challenges

- Mainframe with missing and inaccurate COBOL copybooks

- Lack of technical resources that understand mainframe data structures

- Data issues from lack of data validations and field re-purposing over time

- Data integrity issues across legacy Benefits and HR applications

- Compressed timelines caused by budget constraints

Key Solutions Summary

- Data migration software that automatically accesses raw EBCDIC data without programming

- Automated processes that identify copybook and EBCDIC data mismatches

- Automated profiling provides data statistics about field usage

- Data migration experts guide business resources through tight timelines, maintaining focus to meet the tight schedule.

- CTQ (Critical-to-Quality) validations ensure the data is Workday ready prior to each tenant build

Data Migration Check List for Oracle ERP Cloud Implementations

If you’re implementing Oracle Cloud ERP, data can be a serious pain point unless the right people, process, and technology are in place to extract, transform, cleanse, enrich, and load the data. The following checklist leverages Definian's experience implementing dozens of Oracle projects that can help your organization prepare it's upcoming Oracle Cloud ERP implementation.

Assess the Landscape

Start planning the data effort by assessing what you have today and where you are going tomorrow. While frequently glossed over, spending time assessing the landscape provides three prime benefits to the program. The first benefit is that it gets the business thinking about the possibilities of what can be achieved within their data, by outlining what data they have today and what data they would like to have in the future. Secondly, this exercise gets the organization engaged on the importance of data and instills an ongoing master data management and data governance mindset. The third benefit is that it enables the team to start to seamlessly address the major issues that programs encounter when assessment is glossed over.

- Create legacy and future state data diagrams that capture data sources, business owners, functions, and high-level data lineages.

- Outline datasets that don’t exist today that would be beneficial to have in Oracle Cloud.

- Ensure the future state landscape aligns with data governance vision and roadmap.

- Reconcile legacy and future state data landscapes to ensure there are no major unexpected gaps.

- Capture the type, volume, and load method for the different types of attachment/document data that will be required to reside in Oracle.

- Perform initial evaluation of what can be left behind/archived by data area and legacy source.

- Perform initial evaluation of what needs to be brought into the new Oracle based landscape and reason for its inclusion.

- Profile the legacy data landscape to identify values, gaps, duplicates, high level data quality issues.

- Interview business and technical owners to uncover additional issues and unauthorized data sources.

Create the Communication Process

Communication is a critical component of every implementation. Breaking down the silos enables every team member to understand what the issues are, the impact of specification changes, and data readiness. (Read about the impact of siloed communication.)

- Create migration readiness scorecard/status reporting templates that outline both overall and module specific data readiness.

- Create data quality strategy that captures each data issue, criticality, owner, number of issues, clean up rate, and mechanism for cleanup.

- Establish data quality meeting cadence.

- Establish a cadenced meeting with Oracle Support to discuss critical Service Requests (SRs) and ensure any Oracle-related roadblocks will be resolved in a timely manner.

- Define detailed specification approval, change approval process, and management procedures.

- Collect the data mapping templates that will be used to document the conversion rules from legacy to target values.

- Define high-level data requirements of what should be loaded for each Pod. We recommend getting as much data loaded as early as possible, even if bare bones at first.

- Define status communication and issue escalation processes.

- Define process for managing RAID log.

- Define data/business continuity process to handle vacation, sick time, competing priorities, etc.

- Host meeting that outlines the process, team roles, expectations, and schedule.

Capture the Detailed Requirements

Everyone realizes the importance of up-to-date and comprehensive documentation, but many hate maintaining it. Documentation can make or break a project. It heads off unnecessary rehash meetings and brings clarity to what should occur versus what is occurring.

- Document detailed legacy to Oracle Cloud data mapping specifications for each conversion and incorporate additional cleansing and enrichment areas into data quality strategy.

- Incorporate when Oracle’s quarterly patches will occur in each POD into the project schedule to ensure any updates to FBDI/HDL templates are identified and are reflected in the mapping specs, conversion programs, etc.

- Be cautious when scheduling test cycles or go-live around quarterly patches.

- Document system retirement/legacy data archival plan and historical data reporting requirements.

Build the Quality, Transformation and Validation Processes

With the initial version of the requirements in hand, it's time for the team to build the components that that will perform the transformation, automate cleansing, create quality analysis reporting, and validate and reconcile converted data. To reduce risk on these components, it's helpful to have a centralized team and dedicated data repository that all data and features can access.

- Create data analysis reporting process that assists with the resolution of data quality issues.

- Build data conversion programs that will put the data into the Oracle Cloud specific format (FBDI, ADFdi, HDL, etc).

- If necessary, review Oracle documentation or submit service requests for any unexpected findings when working with Oracle delivered formats or load programs.

- Incorporate validation of the conversion against Oracle configuration from within the transformation programs.

- Enable pre-validation reporting process that captures and tracks issues outside of the Oracle load programs.

- Define data reconciliation and data validation requirements and corresponding reports.

- Build query-based reporting that extracts raw migrated data out of Oracle Cloud to streamline validation and reconciliation process.

- Verify data quality issues are getting incorporated into the data quality strategy.

- Confirm any delta or catch-up data requirements are accommodated within the transformation programs.

Execute the Transformation and Quality Strategy

While often treated separately, data quality and the transformation execution really go hand-in-hand. The transformation can't occur if the data quality is bad and additional quality issues are identified during the transformation.

- Ensure that communication plans are being carried out.

- Capture all data related activities to create your conversion runbook/cutover plan while processes are being built/executed.

- Create and obtain approval on each Oracle load file.

- Run converted data through the Oracle load process.

Validate and Reconcile the Data

In addition to validating Oracle functionality and workflows, the business needs to spend a portion of time validating and reconciling the converted data to make sure that it is both technically correct and fit for purpose. The extra attention validating the data and confirming solution functionality could mean difference between successful go-live, implementation failure, or costly operational issues down the road.

- Execute data validation and reconciliation process.

- Execute specification change approval process per validation/testing results.

- Obtain sign-off on each converted data set.

Retire the Legacy Data Sources

Depending on the industry/regulatory requirements system retirement could be of vital importance. Because it is last on this checklist, doesn't mean system retirement should be an afterthought or should be addressed at the end. Building on the high-level requirements captured during the assessment. The retirement plan should be fleshed out and implemented during throughout the course of the project.

- Create the necessary system retirement processes and reports.

- Execute the system retirement plan.

Following this checklist can minimize your chance of failure or rescue your at-risk Oracle ERP Cloud implementation. While this list seems daunting, rest assured that what you get out of your Oracle implementation will mirror what you put into it. Time, effort, resources, and – most of all – quality data will enable your strategic investment in Oracle Cloud to live up to its promises.

Workday Data Migration Services for Government

How will you ensure your data is Workday ready?

Workday’s functionality will transform and accelerate your finance and HCM operations. However, the implementation will only be successful when your data is Workday ready. Data is a strategic asset that drives organizational performance and the implementation’s critical path. Data issues will delay your implementation and the effectiveness of your Workday solution. To maximize the efficacy of Workday, ensure the implementation timeline holds true, and each tenant build is successful, data needs to be delivered through a predictable, repeatable, and highly automated process.

- What is your data migration methodology?

- Do you have resources to document, build, maintain, and execute the data migration?

- Are your data preparedness tools and templates built to get you ahead of data issues?

- How will you know that your data is both functionally and technically correct prior to loading into Workday?

Why do governments choose Definian to ensure their data is Workday ready?

Definian's Workday data migration specialists, methodology, and Applaud® data migration software seamlessly fit into Workday’s implementation standards

- Enable SMEs to focus on improving the organization and the efficacy of the Workday solution

- Comprehensive reporting ensures data audit, cleansing, and reconciliation metrics are captured at department and project levels

- 100’s of pre-configured Workday specific validations accelerate data timelines and get the team ahead of data issues

- Robust payroll parallel analysis ensures that Workday payroll processes match legacy processing to the penny

- Access mainframe EBCDIC data without having to program a single line of code

Contact us for more information.

Top 7 Oracle Cloud Data Migration Challenges

To realize the power of Oracle's Cloud applications, it is critical that the underlying data is clean and correct. Whether implementing ERP, HCM, SCM, Financials, Sales, or any other Oracle Cloud module, the data migration into those modules is a risky process. Data migration is the leading cause of delay, budget overrun, and overall pain on most implementations and understanding the top challenges before you start your implementation will be critical to the project's success.

This article and presentation share real world experiences that organizations encounter while migrating into Oracle Cloud. Use this information to prepare for your implementation or contact us to discuss how Definian can address them for you

1. Oracle’s In Control

The biggest challenge we’ve seen customers struggle with: In the Cloud, Oracle’s in control.

This starts with a paradigm shift in how you think about requirements gathering. Previously, you might have customized your solution to fit your business practice – going into Oracle Cloud, you should be looking for ways to adapt to Oracle Best Practice and utilize configuration and personalization to make it your own.

As part of your transition, you need to ensure that you are reflecting these same changes and adaptations in your Data Conversion requirements, which should be driven by these business decisions.

Next, Oracle controls your pods (or environments). Pods refreshes are executed by Oracle and must be scheduled weeks in advance, pending Oracle’s schedule. Refreshes can take anywhere from 24-48 hours, depending on the volume of data in the pod. The more data, the longer it takes.

If you need to change a schedule pod refresh, you have to give Oracle advance notice and you may not be able to simply push it back a day – the next slot might be a week or two later than you had planned.

One of the major limitations of converting data to Oracle Cloud is the Pod refresh schedule. In a traditional on-prem scenario, you might ask your DBA to back up your target environment right before doing a data conversion – or even to back up a specific table “just in case”. Then, if something goes wrong during conversion, you can quickly restore the backup and try again without losing too much time.

In Cloud – you can’t do that. If something goes wrong, you’re stuck with it until you have your next refresh scheduled and configured, with the lead time wait that entails. Because of this – its imperative that as much validation is done on the data prior to load as possible, to really get the most out of your refresh cycles.

One of the key benefits of Oracle Cloud is that it is constantly evolving, with monthly and quarterly software patches. Your schedule needs to take into account when these patches are scheduled, as you won’t be able to use your system during that period. Additionally, these patches can introduce changes which impact the data migration process. These changes are not always communicated in the patch notes – sometimes FBDI templates may have additional fields added (sometimes in the middle of the file rather than at the end) or the actual interface load processes may change. You may not find this out until you review your converted data and notice something has changed.

This is getting better – earlier releases of Cloud had would have these kinds of changes more frequently, but it is much more stable now. That said, you need to be aware that anything could change at anytime.

Finally, as we mentioned before, once your data is in – its in. You can’t back it out, you can’t use SQL to mass update it as easily as you could in an on-prem world, and there may or may not be an ability to mass-upload changes depending on the area.

When setting your schedule, try not to have UAT right before a quarterly patch comes through – any unexpected changes caused by that patch won’t be reflected in your conversions before going into PROD.

2. Square Pegs, Round Hole

One of the first challenges you’ll face when migrating data to Oracle Cloud is actually rooted in your current data landscape. Every company has a unique data landscape - how well do you really understand yours? Where are all the places data resides today? Do you know about the custom database the sales department built to manage a specific task? What about that department who decided to “innovate” and re-use fields for something other than their intended purpose? Is that documented anywhere? Have you acquired companies who might be running on different platforms than your main enterprise, which now need to be combined?

If you can’t answer these questions, you’re not alone! These may seem straightforward, but they’re often anything but. I’ve lost count of the number of companies we’ve talked with who uncovered critical data sources – or even complete sets of data! – days after they turned on their new system and “went live”.

At the extreme end, we had a client who had to bring an ex-employee back out of retirement to support a new ERP implementation, as this employee was the only one who knew anything about how their very old legacy system worked. Absolutely nothing was documented, and the legacy system was significantly customized – meaning there were no references publicly available to support the transformation exercise!

A slightly less extreme example we ran into more recently involved a client running on an antiquated mainframe system, where the one employee who knew the system was nearing retirement. It was a race against the clock to get out of the system before that happened, as their 500 page user manual consisted of illegible hand written notes.

Many companies aren’t running just one system today - if you grew through acquisition (or even if you didn’t), you may have a different ERP for each company. There are probably Access databases or Excel files in play across the business. What about third party add-ons or workarounds bolted on to your primary data source? All of these need to be wrangled into a single set of data as part of the migration. This might require merging records across sources, harmonizing duplicates or combining individual fields.

The sooner you start understanding your specific landscape, the better prepared you’ll be when you find those blind spots.

Locking down the data sources is only the first step. Once you know where your data is, you need to work out how it’s being used. Every legacy system structures their data differently and is often customizable.

On top of that, unless you’re going through an upgrade of Oracle EBS to Oracle Cloud, there is generally a major structural disconnect between what you have today, and where you’re going tomorrow. Even between EBS and Cloud there are areas which have been reworked / redesigned or restructured, and that has to be taken into account as part of the migration. If you have multiple data sources, that’s multiple sets of restructuring.

To take a simple example – let’s consider Customer data. Each ERP system structures Customer data in a different way. SAP and PeopleSoft customers are stored and maintained in a completely different manner than Oracle Cloud customers. Every other platform you can consider will also have their own way of structuring this data. As part of the migration, you need to transform what you have today into what you need tomorrow.

A lot of companies we work with are doing their first major implementation in years, meaning they are running on systems which have been around for a long time. In an on-prem world, this often means they’ve been customizing these systems for a long time. All of this has to be unraveled when its time to move to the Cloud.

We have a long term client we’ve been working with for years, as they’ve deployed a single instance of Oracle to their locations around the world. Over the years, we’ve combined more than 58 legacy sources in 39 sites over 17 countries. With so many sources, a lot of work went into ensuring that the migration for each consolidated and structured the disparate legacy data into the same format – regardless of what it looked like in legacy. Even when sites were running on the same legacy platform, there was a lot of variability in their conversion processes. Four of the 39 sites were running MFGPro, but each of them were using it differently from one another and had a ton of unique customizations. Aligning them into Oracle took four completely separate processes to avoid unexpected results.

3. Stringent Load Criteria

With Cloud, Oracle does provide tools to try and make the loading of data more ‘user friendly’, with the aim of allowing organizations to handle this task on their own. If only it was that simple. There are two main load utilities to leverage – FBDI (File Based Data Import) and ADFdi (ADF Desktop Integration). The templates outline what to do once populated – the trick is getting legacy data to match the format, content and structure expected by Oracle Cloud

Both FBDI and ADFdi enforce basic data integrity validation and structural cross-checks to prevent invalid data scenarios. If these rules are violated, the data will not load – leaving you with a sometimes cryptic error report to decipher and correct for. If you’re used to fixing things “live” – or pushing the data in with the plan to deal with data quality issues later – you may find yourself with load files which won’t load.

Even if you have data which is perfectly clean, you may still encounter issues with your FBDIs. Any configuration which doesn’t match your file will result in a load failure (trailing spaces in configuration are fun to track down!). Required fields which aren’t populated on the file will result in a load failure, even if they are something you haven’t used in the past. Files with too many records sometimes cause loads to fail or timeout (though this differs from file to file). There can also be diminishing performance returns if an FBDI has too many rows.

FBDIs may seem like they make data migration a no brainer – but if you’ve not done the work in advance to make sure that the data in the templates is clean and consistent, you can end up going through multiple iterations of this process to get a file that will load successfully. It is extremely easy to burn a ton of hours caught in the load process, fixing issue after issue.

4. Incomplete Functionality

At some point as you’re starting to plan and prepare for your implementation, you’ll find yourself face to face with challenge number 3: Missing (or Incomplete) functionality. Oracle Cloud is very much a work in progress.

New features and functionality are constantly being released as part of Oracle’s quarterly patches. While the constant enhancements help keep the solution fresh, it does pose a challenge when something you need isn’t yet available. We’re not going to talk about every feature which might not exist yet – but we are going to highlight a few things which impact data migration

While many areas are supported by FBDI loads, others are not. For example, Price Lists can only be loaded 1 at a time, making it very difficult for clients with large volumes of price lists, especially those with lots of configured items. If you have attachments (be they item, order or invoice attachments), you will likely find the process to load them involved and complicated. If you’re implementing Oracle Cloud PLM, you may find that while there is a Change Order FBDI process, it doesn’t work as intended without changes to the embedded FBDI macros.

There are also areas where the functionality may seem to exist – but isn’t actually functional. An example here are Item Revision attributes. That is, attributes defined at the Item Revision level. While the FBDI makes it seem like this is possible during conversion, they don’t actually do anything! Our client ended up having to assign these attributes at the master item level, not the revision level as they had wanted.

Another client ran into a challenge trying to migrate their serialized items. The Oracle FBDI only allows you to load a range of sequential serial numbers – and not serial numbers which are not sequential.

Finally, and maybe weirdest of all, is a challenge we’ve encountered recently, where the customer load process stopped working one day. Checking the load files, we confirmed everything was as it should be and reached out to Oracle to understand what was causing the failure. Oracle didn’t have a definitive answer as to what we were seeing, and suggested a reboot of the Oracle server. This worked – temporarily. When we ran into the same issue on another pod, a reboot didn’t resolve the issue. We’re still not sure what the underlying issue was, or if the recent quarterly patch might have addressed it.

As we said, Oracle Cloud is a work in progress, and as with any software of this magnitude there are going to be issues and kinks to work out. Service Requests (SRs) are key – working with Oracle to troubleshoot and determine how to address the issues you encounter. If you’re loading inventory and the load results in errors, an SR is the only way you’re going to get the interface table purged to try the load again.

Some of the FBDIs are more ‘solid’ than others. In general, the Financials and HR FBDIs are currently more stable than the manufacturing and project based templates. As more companies start implementing those modules, those FBDIs should evolve and start to become more stable. That said, even ‘stable’ processes have been known to have issues.

5. Cryptic Error Messages

The first time you set out to load your data to Oracle Cloud, you will very likely have some (or all) of your records fail, and find yourself faced with an Oracle error report. Depending on what type of data you’re loading, the error reports can look very different both in format and content. Some error reports are very straight forward and easy to read and understand. Others…not so much.

At times, Oracle error reports contain cryptic error messages that are hard, if not impossible, to understand and resolve. We’ve even ran into cases where the import shows the load was successful, but no data was actually loaded. Turns out, there are some load files that have ‘silent’ errors – showstopping load errors which are never reported or communicated, but which stop the loads in their tracks.

One of our favorite error messages, which we ran into during a customer load, was “Error encountered. Unable to determine cause of error.” That one was super fun to troubleshoot!

Also fun are misleading error messages. That is, an error message that is completely unrelated to what the actual problem is. We had a purchase order conversion failing, which the error reported as being due to a currency conversion error. This was a bit perplexing as all the POs and the financial ledgers were in USD – there shouldn’t have been any currency to convert!. We reached out to Oracle for some guidance, and they had no idea what the issue was. Eventually, we determined that the issue was being caused due to the external FX loader, being used to load currency conversion rates. Once that was paused, the Pos loaded without a hitch. The problem wasn’t with the PO load – the problem was caused by the FX loader conflict.

Even when errors are reported more clearly, they don’t always point to the true underlying issue in the most direct way. For instance, when loading projects, you may run into issues if the project manager was not active on the HCM side as of the project start date. If the current project manager was assigned after the inception of the project, this is always going to lead to a load failure. The message you’ll see reported is “The project manager is not valid”. If you know to check the dates, you might get to the bottom of the issue quickly. If you don’t, and see that the project manager exists and IS valid today, it may take you a bit to realize that the issue is truly that they weren’t valid as of the project start date.

6. Cloud Database Access

As you’re working through the errors, you’re likely going to run into this next challenge: accessing the Oracle Cloud database. If you are coming from a background of on-prem data migration, this might be a shock as you can’t directly SQL query the database to quickly look into specific data concerns, to mass update records, to check record counts, etc.

There are ways around this, primarily through leveraging customer BI publisher reports to look at specific query conditions. Another option are PVOs, or Public View Objects, which are pre-built view queries for key areas. However, they don’t pull all rows and fields from the underlying base tables so if you need something other than what’s pre-defined, you might not be able to find it without building a customer report that doesn’t show you the full picture.

If you’re working through error reports to address load failures, it’s very difficult and almost impossible to update, correct or back out data once it’s loaded. Some areas do have the ability to be updated via FBDI, but not all do. To get around this challenge, our company built hundreds of pre-validation checks to run on the data before attempting to load and a utility that can query the Cloud outside the application. This ensures the load will be successful with minimal errors, and no need to back out or mass-update data.

7. Scope Creep

Once you think you’re done and all your data is loaded into your test POD, business will start to test and work with the data. As the business starts to discover the power of Oracle Cloud applications, new business requirements are discovered that impact the original specifications and scope.

For example, one of our clients had a multi-country tax reporting requirement for a single legal entity. They designed a solution to handle this scenario but through their testing, they determined a more efficient way in which to structure their tax information. This resulted in changes to not just how their customer data was structured, changing attributes from the account level to the site level, but also impacted all their downstream conversions. Including Customer Item Relationships, Open AR and Receipts, Sales Orders and Contracts, just to name a few.

This small change had a big impact and is just one example.

There are additional complexities to consider when multiple Oracle Cloud applications are being implemented. On one engagement, there were multiple but related initiatives happening on separate schedules – Oracle Sales Cloud and Oracle ERP. The Sales Cloud Prospects were implemented without considering the ERP parties. When ERP users logged into the system for the first time, thousands of duplicated parties existed due to these sales cloud prospects. Once schedules aligned, complex cross-team deduplication processes had to be designed late in the project lifecycle…. Increasing data team workload.

Sometimes scope is impacted by areas beyond the core Oracle Cloud solution. Third party interfaces can come with their own requirements based on what they consume from your Oracle data hub.

At one client, we converted additional items to support an Oracle Cloud to Wavemark integration. However, the initial integration failed. It was determined that Oracle needed to store intraclass UoM data for the Wavemark integration to be functional. This entirely new conversion object was not part of the Client’s original data migration scope.

These three examples are just tip of the iceberg and every project provides it’s own unique challenges. You don’t always know what you don’t know.

Oracle Cloud ERP Implementation for a Global Aerospace and Defense Company

Project Summary

A division of a multi-billion dollar global aerospace and defense company needed to consolidate their 14 different business data sources onto a single integrated platform. The purpose was to help modernize and optimize their business operations, streamline the on-boarding of new acquisitions, and improve analytical insights. They selected Oracle ERP Cloud as their solution and engaged Definian to support the data conversion requirements as data cleansing and harmonization were a critical concern.

“Your ‘can do‘ attitude really helped keep things moving and your ability to organize 35 simultaneous conversions was pretty amazing!” – PTM Lead

Project Risks and Mitigation Strategy

- The client was utilizing a homegrown ERP system to manage their manufacturing data. Without client technical resources, Definian worked with the business to compare the front-end with the back-end data structure and to determine the data mapping required for Oracle ERP Cloud

- A large portion of legacy data was maintained across numerous spreadsheets. The manual nature of the legacy data resulted in mistyped, incomplete and incorrect information. The invalid data scenarios were identified as part of Applaud’s comprehensive error reporting, providing the Client data confidence well before Go Live.

- Supplier and customer data was duplicated both within and across the 14 data sources. Additionally, the existing data did not meet Oracle’s data requirements or structure. Definian deduplicated and restructured both suppliers and customers as part of the automated conversion process, ensuring only unique suppliers and customers were brought forward into the new Oracle solution.

- Existing supplier and customer addresses consisted of unverified free text with no internal consistency. Definian’s address standardization process standardized and validated addresses while providing audit trails, ensuring transparency every step of the way.

- A major source of duplicated customer data was driven by complex and inconstant legacy contract pricing business processes. Definian’s de-duplication engine identified, consolidated and harmonized these inconsistencies to allow the client to gain insights to the real overall customer spend.

- The existing General ledger and Oracle ERP Cloud ledger store transactions at different precision levels. Definian created detailed reports to help the business quickly isolate and address the resulting imbalances within each accounting period.

- Business requirements for On Hand Inventory serial numbers varied greatly between legacy and Oracle solution. Definian developed the conversion such that all On Hand Inventory was split into individual quantities associated with each serial number to bridge the gap without losing sight of the assigned serial numbers.

- All master data (customers, suppliers, etc) was loaded to production four weeks prior to transactional data (sales orders, purchase orders, etc). During this transition period, all changes in the legacy systems needed to also be made in Oracle ERP Cloud. Definian’s delta processing reported both newly created and changed master data to ensure Oracle Cloud remained up to date in the interim.

Key Activities

- Identified and documented requirements for converting data from up to fourteen legacy sources, including unsupported homegrown solutions, to Oracle ERP Cloud.

- Identified, prevented, and resolved problems before they became project issues.

- Facilitated discussions between the business, the functional team, and the load team to coordinate requirements and drive data issues to completion before each test cycle and Go Live.

- Quickly adapted to changing requirements and proactively identified and addressed downstream implications, keeping the project on schedule.

- Harmonized, cleansed, and restructured customer and supplier data through Definian’s flexible de-duplication process such that only clean, unique records were converted.

- Standardized and cleansed addresses while providing transparency to the business.

- Utilized robust data profiling and error reporting to assist the business with data cleansing, data enhancement and data enrichment prior to Go Live.

- Fully automated all data transformation activities, removing error prone manual steps from all processes and ensuring consistent and predictable results.

- Tracked records throughout the data transformation process to aid with post-project auditing. Every record was traceable each step of the way from legacy to target.

- Identified changes made in legacy during the cutover period, enabling the business to account for these changes prior to Go Live and eliminating dual maintenance concerns.

The Results

From unraveling a homegrown ERP system to cleansing duplicate data, Definian proactively identified and resolved all data issues well in advance or go live, supporting a smooth transition to the Cloud. Definian improved overall data quality with an early focus on data and through a combination of data harmonization, address standardization, and pre-validation error handling. Definian’s detailed record tracking provided the business with everything they needed to support post-project audits and ensured everyone knew exactly how every scenario was handled. Definian’s comparison reports eliminated the concerns of dual maintenance during the four-week cutover period, providing peace of mind that everything was captured, and nothing was missed.

By engaging Definian’s data migration services, the client was able to achieve a smooth and successful Go Live on time and on budget. With 100% of converted data successfully loaded and verified, the business could begin leveraging their new Oracle ERP Cloud solution from Day One.

The Applaud® Advantage

To help overcome the expected data migration challenges, the organization engaged Definian International’s Applaud® data migration services.

Three key components of Definian International’s Applaud solution helped the client navigate their data migration:

- Definian’s data migration consultants: Definian’s services group averages more than six years of experience working with Applaud, exclusively on data migration projects.

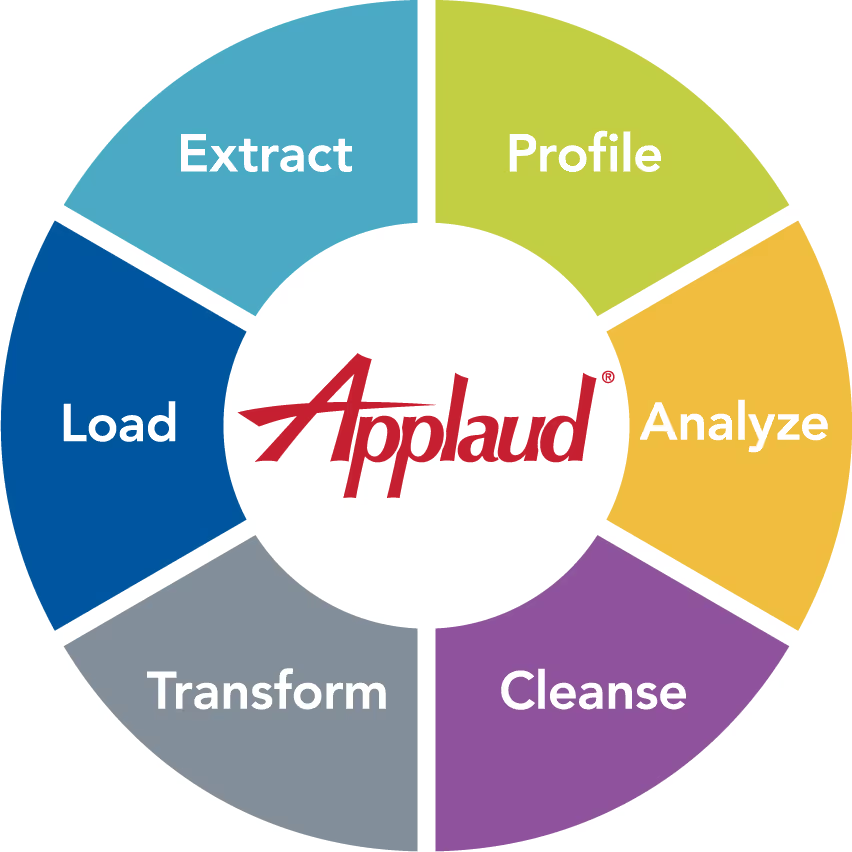

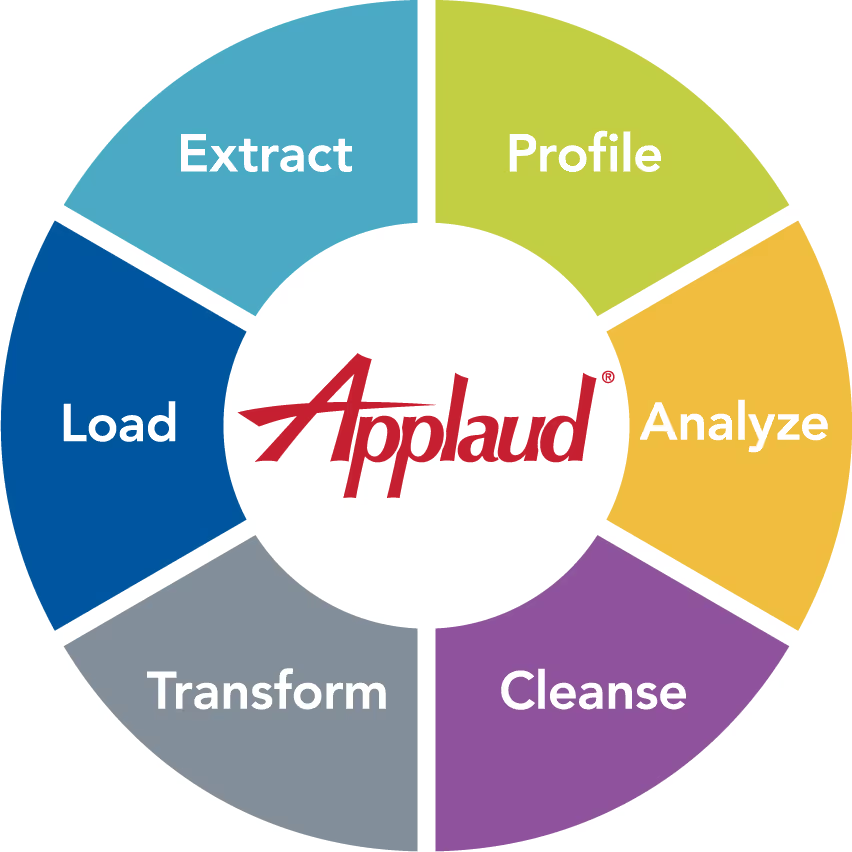

- Definian’s methodology: Definian’s EPACTL approach to data migration projects is different than traditional ETL approaches and helps ensure the project stays on track. This methodology decreases overall implementation time and reduces the risk of the migration.

- Definian’s data migration software, Applaud®: Applaud has been optimized to address the challenges that occur on data migration projects, allowing the team to accomplish all data needs using one integrated product.

Gaining a Competitive Edge Through a Comprehensive Data Approach

It has become increasingly difficult for Implementation Partners to distinguish themselves from their competition as Cloud solutions have eliminated many of the previously available customizations.

System Integrators’ web pages have interchangeable information and buzzwords, forcing individual partners within these firms to find additional ways to distinguish their services from competitors on every unique pursuit. Clients continually struggle with data. Definian’s Implementation Partners find including a proven, predictable, repeatable, and highly automated data migration process as part of the implementation can differentiate them.

On Cloud implementations, the struggle with data is amplified by the shorter time frame, stricter data quality requirements, lack of environment control, and immature application load programs. Our partners tell us that the increased tensions and staff burnout strains the Client relationship, delays subsequent phases in the roll-out, and decreases the opportunity for future collaboration. Once Implementation Partners see that data does not need to be painful, they bring us in on each engagement because organizations are more than willing to pay for a better overall implementation experience and improved data quality.

In their own words, partners tell us that our services “keep things moving,” “improve the team’s quality of life,” “shorten the length of data conversion,” “make it look easy and fluid,” and “make it so I don’t have to worry about data anymore.”

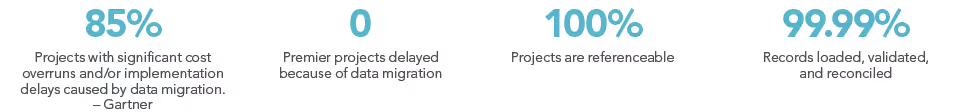

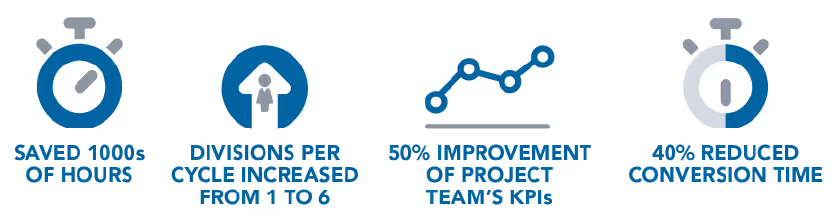

Concrete, measurable benefits are found in situations where Definian was brought midway into struggling projects. Compared to leaving the data cleansing and transformation activities to the Client, Definian’s services realized the following measured benefits to our Clients and Implementation Partners.

Definian joined an implementation for a Fortune 500 industrial manufacturer that had repeated delays, severe data quality issues, and an incredibly complex legacy data landscape. Throughout the implementation, Definian replaced approximately 30 Client resources with 6 consultants and increased the entire implementation team’s productivity by 50%, bringing them their first successful go-live after a year and a half. The end result was that Definian was able to cleanse, transform, and consolidate 58 legacy applications, across 39 sites, in 17 countries.

After suffering several project delays, the leading manufacturer of US steel was having difficulty scaling their data conversion process to meet project timelines. In order to ensure that the project was to be delivered on time, they brought in Definian to perform the data migration. Definian increased the number of divisions per cycle from 1 to 6 and enabled the time between go-lives be compressed from 6 months to 3 months. Definian’s four consultant team reduced the Client’s data migration team by 7 FTEs and saved thousands of hours across the approximately 100-person project team.

After two failed go-live attempts, Definian was brought in to bring consistency and predictability on a project for a Fortune 500 auto parts manufacturer. Definian reduced conversion test cycle time by 1-week (40%), improved the overall data quality, and ensured that the third go-live attempt was a success.

The Bottom Line

These results are achieved through a combination of specialized consultants, data approach, and proprietary software through the following activities:

- Ensuring the business requirements and transformation documentation is properly created and maintained

- Converting data early in the project, allowing the Client to see real-world data in the new solution

- Defining and managing a data quality strategy that focuses the Client on making business decisions and not updating thousands of records in legacy data

- Delivering on the 5 C’s of data migration - Clean, Consistent, Configured, Complete, and Correct

- Implementing and managing frequent and numerous conversion specification changes that occur throughout the project

“Data is always an issue, but Definian makes it so I don’t have to worry data about anymore, so I bring them in every time.”

– Partner, Big 4 consulting firm

The Applaud® Advantage

To help overcome the expected data migration challenges, the organization engaged Definian’s Applaud® data migration services. Three key components of Definian International’s Applaud solution helped the client navigate their data migration:

- Definian’s data migration consultants: Definian’s services group averages more than six years of experience working with Applaud, exclusively on data migration projects.

- Definian’s methodology: Definian’s EPACTL approach to data migration projects is different than traditional ETL approaches and helps ensure the project stays on track. This methodology decreases overall implementation time and reduces the risk of the migration.

- Defnian’s data migration software, Applaud®: Applaud has been optimized to address the challenges that occur on data migration projects, allowing the team to accomplish all data needs using one integrated product.