Articles & case studies

Back to School: Data Migration for a Texas-Based Higher Education Institution

Launching a Workday Implementation Amid COVID-19

This college system’s data modernization initiative began in April 2020, about one month after COVID-19 restrictions went into effect nationwide. Accordingly, we quickly adapted to the changing nature of work; college leadership recognized that COVID-19 increased the need for ERP (Enterprise Resource Planning) transformation and immediately embraced the benefits of the remote work model. We conducted requirement gathering sessions virtually, bringing an intense focus to each meeting with minimal downtime between sessions. We collectively understood that the future of education and other critical industries will depend on cloud technology–we were already witnessing this shift with the rapid rise of remote learning. An ambitious effort today would benefit college students and employees for years to come.

Informed by Personal Experience

As this Workday implementation rapidly ramped up, I realized that I had more intimate knowledge of this institution’s data challenges than I originally anticipated. Like hundreds of colleges and universities, this client was still operating with outdated software for Human Resources, Payroll, and Student Services. BannerWeb was the legacy system at this college –the same system that I navigated as a college student and campus employee to pay tuition, update personal information, retrieve tax documents, and log hours worked. I had dealt with the limitations of the legacy system firsthand: frequent glitches with time entry, difficulty navigating to the desired web page, and the antiquated appearance of the system itself. As a result, I understood that a successful transformation to Workday would mean greater functionality and better usability for faculty, staff, and students. My personal experience with the Banner front-end allowed me to better understand how to profile the data and ultimately execute data conversion objects on our accelerated project schedule.

Solving Administrative Challenges in Higher Education

During the data assessment period, we discovered anomalies and business processes that had to be addressed as part of the transition to Workday. For example, college faculty have frequent position changes that coincide with changing academic terms. Although repeated position changes are standard in higher education, it poses technical challenges for a software implementation. Workday requires that an employee must be hired into an active Primary Position to load their benefits, direct deposit information, personal information, and education/trainings. Many faculty no longer held the position that they were initially hired into; as a result, we had to use a combination of placeholder positions and carefully sequenced data loads to ensure that all associated employee information was accurately converted from Banner to Workday.

Other data issues emerged through our data profiling and analysis, which we addressed using our Applaud® software and keen knowledge of Workday. Our team identified many cases where employees had positions starting before their hire date. Based on how the Banner system was being utilized, the employee’s hire date would be updated after a position change at the college. This business process resulted in certain employees with position start dates that predated their hire date. To address these cases, we dynamically identified these individuals and pushed the Workday Position Start Date to be on/after than the worker’s Most Recent Hire Date. Additionally, old benefit enrollment records for retired employees were still flagged as active in the legacy system. This poses an issue if an employee returns to the college after their initial retirement—these workers are called Active Retirees. Accordingly, we made sure not to convert benefit records associated with their prior tenure at the college.

Definian used hundreds of Critical-to-Quality checks gathered from previous Workday implementations to ensure that data were ready for loading. These validations examine relationships between benefit enrollments and an employee’s active position, report addresses that do not meet Workday standards, and verify effective dates for logical consistency. These automated data checks accelerated the project by identifying data issues early, before they could derail the project schedule.

Accelerating the Project Schedule

From our initial onboarding, we were able to deliver Workday-ready files in less than five weeks. We breezed through the Foundation build with high load percentages thanks to our Critical-to-Quality validation checks and amassed Workday expertise. We completed the Foundation build one week ahead of schedule, saving time and money for our higher education client. Across the End-to-End, Parallel, and Gold builds, we averaged a 98.49% load success rate. This accuracy in data conversion also saved the college time in the validation process, as they had high confidence that their existing data were correctly reflected in Workday.

Preparing for the Future of Higher Education

Many colleges have not upgraded their data architecture in decades, leaving them to operate on outdated mainframe systems. Moving to the Workday cloud solution positions the college well to scale as they add new campuses, employees, and students. The end-user experience will also be enhanced, alleviating the issues that I noticed in my undergraduate days. Ultimately, performing this data cleanup exercise while transitioning to Workday will allow the college to have a more robust, maintainable data repository in the years to come.

Workday Student: The Next Phase

In addition to moving over the college’s HR (HCM) and financial data to Workday, the next wave of the college system’s data transformation initiative involves implementing Workday Student. This upgrade will vastly benefit the over 50,000 students who attend courses at the college annually. The college system will be prepared to handle the modern challenges associated with maintaining and effectively using employee and student data. We look forward to the continued success of our client as they tackle new technological challenges and serve their community.

How Inaccurate UOM Data Can Break Your Supply Chain

Unit of Measure (UOM) is an attribute that describes how an item is counted. When we consider sundry items like a gallon of milk, a pair of gloves, or a case of beer the concept of UOM is deceptively simple. However, for manufacturers, hospitals, and retailers, Units of Measure can make or break their supply chain solution. Inconsistencies in UOM data across functional areas or gaps in how units are converted can create data exceptions in procurement, inventory, and other operations. This could lead to incorrect inventory counts, delayed orders, and storeroom confusion.

Why would a single item have multiple Units of Measure Anyway?

An individual item can often be represented using different units of measure based on the context. Medical exam gloves provide an intuitive example, they are procured in bulk by the case, stocked by the box, and used by the pair. A single item can have multiple units of measure because employees in diverse job functions – like procurement, storeroom, or nursing – interact with the same item in distinct ways.

Intraclass UOM Conversion explained

Converting across units of measure is a necessary part of supply chain management. Continuing the example with gloves, let us say the storeroom managers in the hospital calculate they need 12 more boxes of gloves. Since gloves are only sold in cases a conversion from boxes to cases is necessary, 1 case of gloves equals 10 boxes of gloves. Consequently, 2 cases of gloves are needed to fill the need for 12 boxes of gloves.

Converting between cases and boxes is an example of an intraclass UOM relationship. The intraclass moniker means that the separate units of measure being considered are of the same class, in this case the class is quantity.

Interclass UOM Conversion explained

Additionally, some items have interclass UOM relationship to convert between different classes of measure - like from quantity and volume. For example, consider vials of medicine. For certain types of medicine, the stocking unit of measure is ‘vial’ and this makes perfect sense as a stocking unit considering how vials of medicine are individually stored in trays and racks. However, for clinical purposes the unit of measure is often milliliter. For the system to work with both units, an interclass UOM relationship needs to be set up to determine how milliliters equates to each vial. This ratio will be different from item to item so a unique interclass UOM is set up for each item that requires it.

Criticality of accurate UOM data

It is impossible to order, receive, or stock items in a unit of measure that has not been assigned to the item. For example, if a purchase order is for a ‘pack’ of masks, but the item is only set up in the system with the ‘each’ then the purchase order will fail or go on hold. Inventory and procurement data is often fed to boundary systems (Epic, Ivalua, WaveMark, and others) that manage inventory or contract information. The unit of measure used needs to remain consistent across these interfaces or have clearly defined rules for how the units are converted.

Inaccurate UOM relationship data can cause supply chain snafus. Consider what might happen if the ‘case’ you orders contains 10 ‘boxes’ but you expected it to contain 100! Inaccuracies like this could throw off operations and require expensive rush deliveries to resolve. Whether your business is implementing a new supply chain software solution or implementing a master data management strategy having a approach to identify and solve UOM data issues is critical to your success.

5 Project Management Mistakes in ERP Implementations (And How to Fix Them)

1. Not Compromising on Management Approach

There are 2 major project management approaches in software implementations: the waterfall model and the agile methodology. Willingness to compromise on an approach that balances waterfall and agile techniques will likely give you and your project the best chance for success.

The waterfall model is linear—all requirements are gathered at the start of the project and the rest of the project continues sequentially. Agile, however, assumes that requirements will change over the course of the project without necessarily committing to concrete deadlines. A rudimentary waterfall approach would ignore much of what is learned through the progressive evolution of the project. Without course correction, a strict waterfall approach results in an end design that fits requirements as they were initially understood but fails to meet true business use cases. Taken to the extreme, an agile approach could be just as disastrous, leading to a continual stream of change requests and never-ending development. Get the best of both worlds by adopting a waterfall approach for project kickoff and the initial test cycle before transitioning to an agile approach.

2. Trying to Satisfy Everyone

The goal of your organization's ERP implementation could be to reduce operational software and hardware costs, position your systems for streamlined mergers and acquisitions, or to gain functionality. Regardless of the strategic goal, keeping end users and other stakeholders happy is a valid objective. However, trying to satisfy everyone will lead to project delays. Key project decisions may result in one team's desired outcome, while another team may not agree with the approach. When project leadership acknowledges sacrifices, reiterates the project mission, and displays confidence, the entire project team will feel like they are making progress—even if they disagree with individual decisions.

3. Inadequate Resourcing

The managers, analysts, and subject matter experts most valuable to the ERP implementation are the same people who carry the most day-to-day operational responsibilities. Inevitably, project teams work overtime supporting both the implementation and business operations. To avoid burnout, project management needs to assign and train backup resources wherever possible. Furthermore, project test cycles should be scheduled outside of known busy times, such as month-end close. People on the ground floor are often the best judges of adequate vs. inadequate resourcing. By providing an avenue for expressing resource concerns, project management will be able to identify and resolve issues before they become delays.

4. Succumbing to Analysis Paralysis

Analysis paralysis occurs when so many options are presented that it delays decision making; this is famously known as the paradox of choice. Open-ended questions and discussions are valuable tools for initial discovery and design, but they should progressively become more focused as the project develops. When necessary, leadership can course correct by encouraging discourse to determine the best-suited outcome for the organization. If individual decision-makers are frequently getting stuck, try tailoring messages to succinctly present the available options as a numbered list; this enables the decision to be made with a single word response. For technical or configuration issues, software vendors and system integrators often have the subject matter experience to guide decisions towards the best solution. Management should set an expectation with their vendors that they will speak with authority and lead the discussion to workable solutions.

5. Letting Morale Plummet

Your project team will work long hours and individual contributors will not always get what they want. Additionally, there are stressful go-no-go decisions, accountability, and a 24-hour go-live schedule to consider. Many users have the hurdle of learning a new software while simultaneously helping implement it and also still carrying out their “regular” job responsibilities. For these reasons, your entire team will feel stretched thin—there is a significant risk that morale and work quality will plummet. Recognizing team members for their accomplishments, fostering collective ownership, and encouraging open communication will power you through project difficulties. Additionally, leadership can share and encourage safe, clinically proven, non-pharmaceutical interventions that optimize performance and help people cope. No-cost interventions include breathing exercises and power poses,.

What this Means for the C-Suite and Technology Leaders

Your organization’s IT (Information Technology) transformation does not solely depend on technical resources. Flexible methodologies, an understanding of psychology, high morale, smart resourcing, and effective leadership are needed to make complex projects successful. IT leaders who care about their team, optimize team performance using the latest findings in psychology, and think critically about the human-technology interface have the power to transform their organization’s operations for years to come.

Podcast: The People Place

Premier International (now Definian) CEO Craig Wood was recently featured on the People Place Podcast with Karen Kimsey-Sward, a production from Dale Carnegie Chicago in which Chicagoland business leaders share knowledge gained over their amassed experiences. Craig imparts insights gathered on his own journey, including the importance of relationship building, candid communication, and empathetic leadership.

Discover the decisions made 36 years ago that are still having a positive impact on employee retention, culture, and performance at Premier International (now Definian). Listen until the end for a frank discussion weighing the challenges and opportunities all companies face around inclusivity, COVID-19, differing leadership styles, and much more.

Building the Connectors for Leading Data Platforms

Client: A Leading Data Intelligence Platform Technology Company

In a rapidly evolving tech landscape, one leading data technology firm faced a pressing issue: the need to increase its flagship software product's compatibility with diverse data sources. While they had put together a connector framework, it was immature, and help was needed to get integrations in place while they focused on the base framework.

As Definian engaged, our task revolved around several key activities aimed at streamlining connectivity solutions:

Connector Development: Leveraging our MetaMesh technology, we focused on crafting connectors for high-priority customer requests. Our goal was to address demands swiftly and effectively.

Continuous Improvement: Alongside connector development, we identified gaps and areas for enhancement within the framework, helping the Client’s product development team create a clear path for ongoing refinement and optimization.

Support Model Establishment: To increase operational efficiency, we established and managed a support system that ensures seamless functionality and user satisfaction.

The enhanced compatibility this client’s data intelligence platform now has against diverse data sources has vastly increased the number of end clients they can serve. Since this platform can now work with more varied and complex data landscapes than before, it has increased the number of opportunities available to the client to drive revenue growth.

As a result of this initiative, we not only solidified our position as a strategic partner but we have also fostered a robust culture knowledge exchange between organizations. Together, we synchronized our efforts to improve integrations with forthcoming Artificial Intelligence and analytics engines and are actively discussing additional ways to facilitate data exchange and future-proof our client's platform.

Model Governance Aligning Information Governance With Model Risk Management

As organizations continue to use data to drive value, the need to understand, manage, and protect data assets has become increasingly critical. Many organizations have instituted data governance programs to manage their data more collaboratively and transparently. Simultaneously, it seems, data has gotten more complex as machine learning algorithms drive insight at scale, introducing new data risks, particularly in the financial industry. Regulators responded by imposing controls designed to instill a more disciplined approach to model risk management.

The goals of information governance and model risk management are closely related—to deliver consistent, trustworthy, and reliable data for improved business intelligence. Unfortunately, at many organizations these two data management approaches are often misaligned: roles and responsibilities often conflict, leaving stakeholders across the organization confused about how to apply required governance and model management standards.

Today, when a global pandemic has called into question the models financial institutions have traditionally relied on, bringing these two disciplines into alignment is imperative for institutions that want to verify that their high risk models are performing correctly and in accordance with enterprise-wide data governance standards.

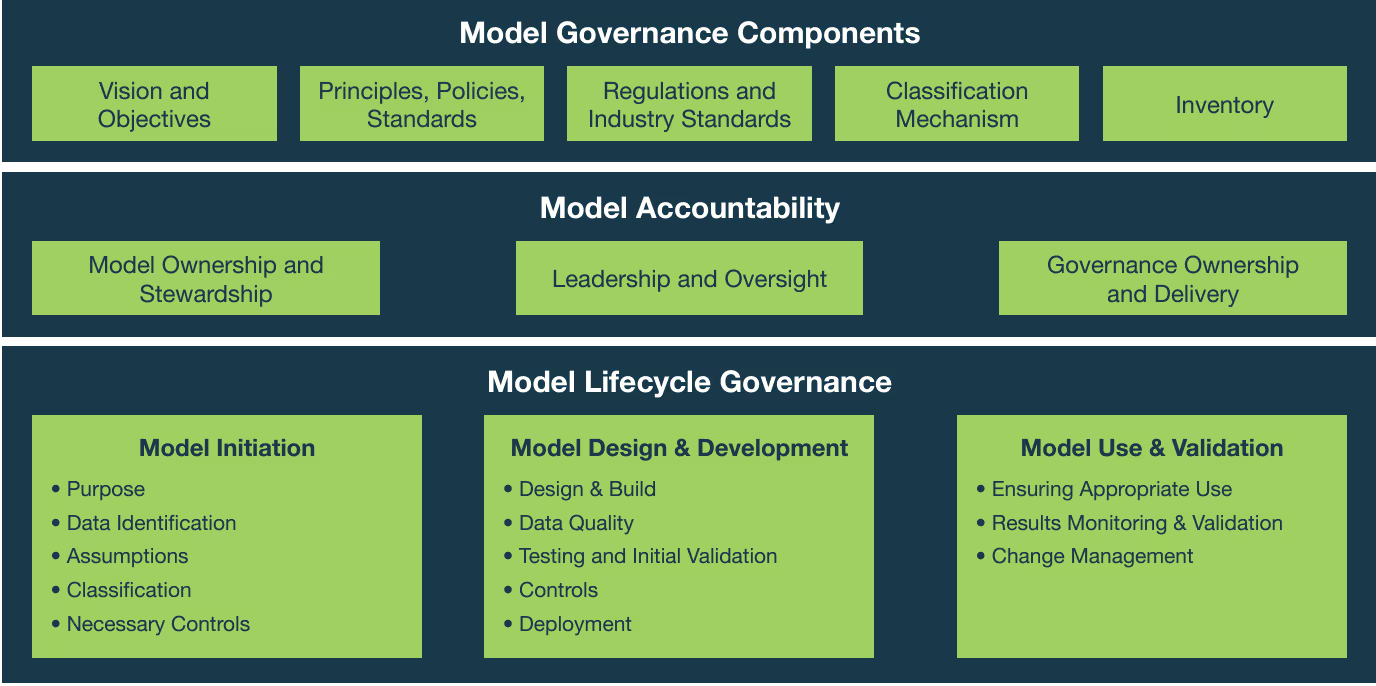

The Model Governance Playbook

At Definian we provide a proven, systematic approach for reducing model risk through more transparent and collaborative model governance.

Our Model Governance Playbook helps organizations align enterprise data governance standards with model risk management guidelines. We engage stakeholders across your organization to:

- Collect and verify principles, policies and standards for managing model risk

- Develop an enterprise inventory along with the metadata you will need to maintain it

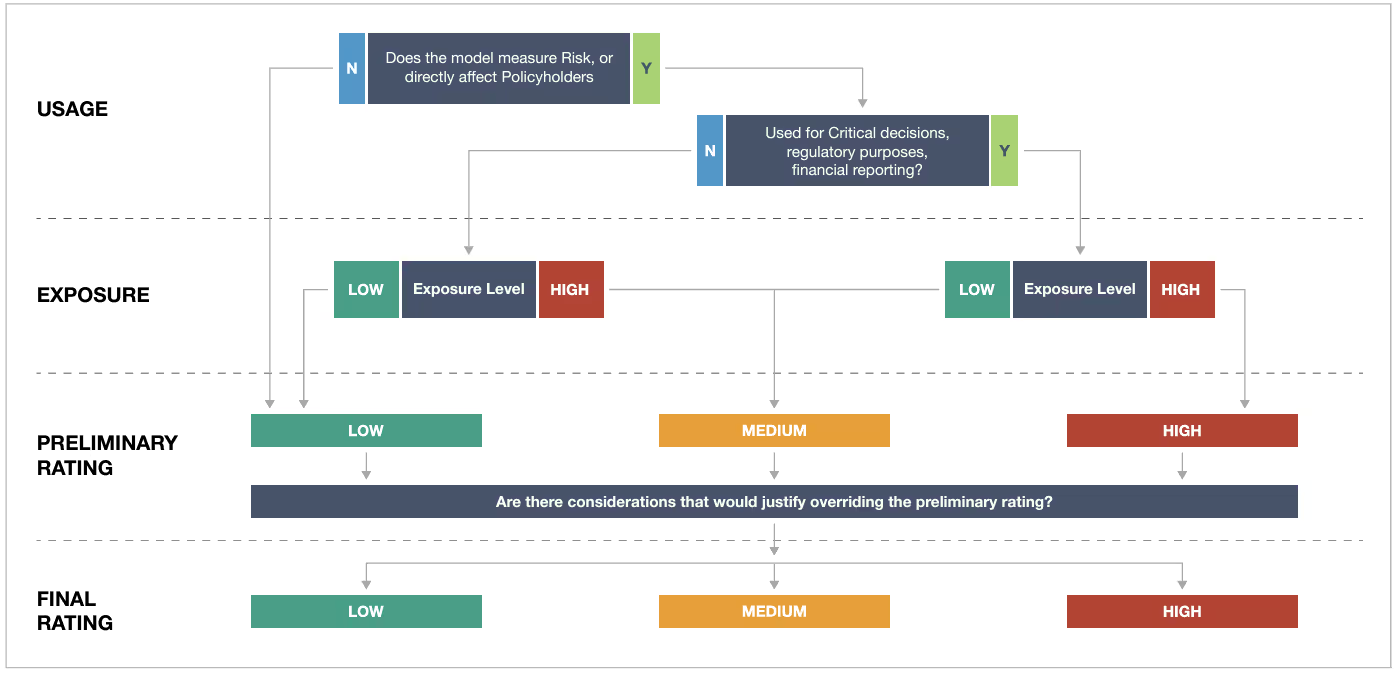

- Design a risk classification mechanism to determine the level of model risk

- Create an operating model for managing risk across the model lifecycle

- Manage risk more reliably at every stage, including model initiation, development, use, and validation.

Benefits

At a time when financial institutions are being called on to adjust their data and methodologies to reflect a post COVID-19 world, aligning enterprise-wide data governance policies with model risk management will deliver:

- Benefits At a time when financial institutions are being called on to adjust their data and methodologies to reflect a postCOVID-19 world, aligning enterprise-wide data governance policies with model risk management will deliver:

- Improved compliance with both information and model governance standards

- Better alignment of roles and responsibilities

- Enhanced compliance with regulatory policy

For more information about how your organization can begin aligning its governance initiatives to deliver more compliant, accurate, and trustworthy data to stakeholders, call us today.

Data Products Configuration in Collibra Data Intelligence Cloud

In the context of data mesh, "data products" refer to self-serve, domain-oriented data assets that encapsulate a specific business capability.The concept of data mesh, popularized by Zhamak Dehghani, emphasizes decentralizing data ownership and treating data as a product.

By adopting the principles of data mesh, organizations aim to overcome challenges related to data silos, centralization, and scalability, ultimately improving the agility and effectiveness of their data capabilities.

5 steps to configure Data Products in Collibra

- Define Data Products

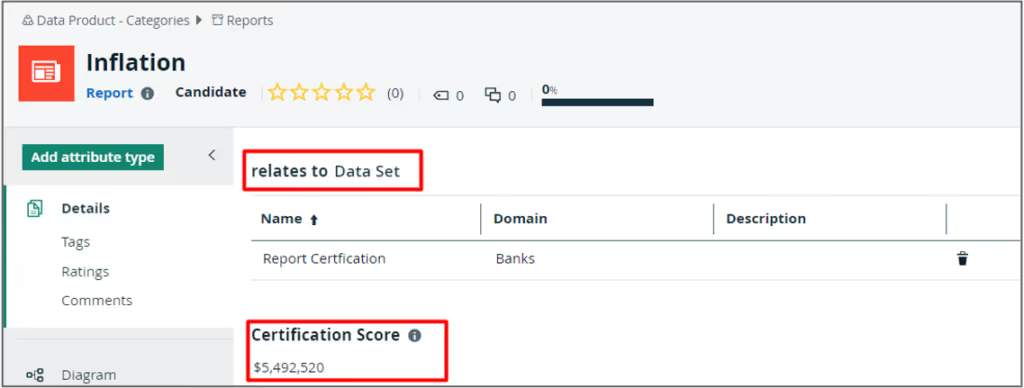

A business intelligence report may be classified as a data product. Figure 1 shows a PowerBI-based Inflation report in Collibra. The report also includes a custom attribute for the value that has been assigned to the report.

- Develop Derived Data Products

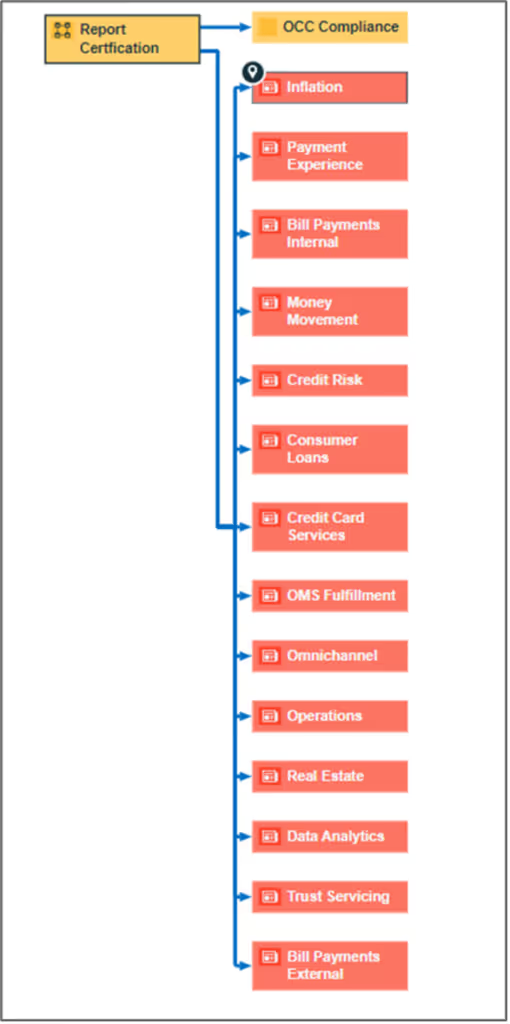

Derived data products are based on one or more data products. For example, a bank has an Office of the Comptroller of the Currency (OCC) Compliance data product, which is derived from the Report Certification data product, which is derived from several report data products including Inflation (see Figure 2).

- Establish Data Quality Scores for Data Products

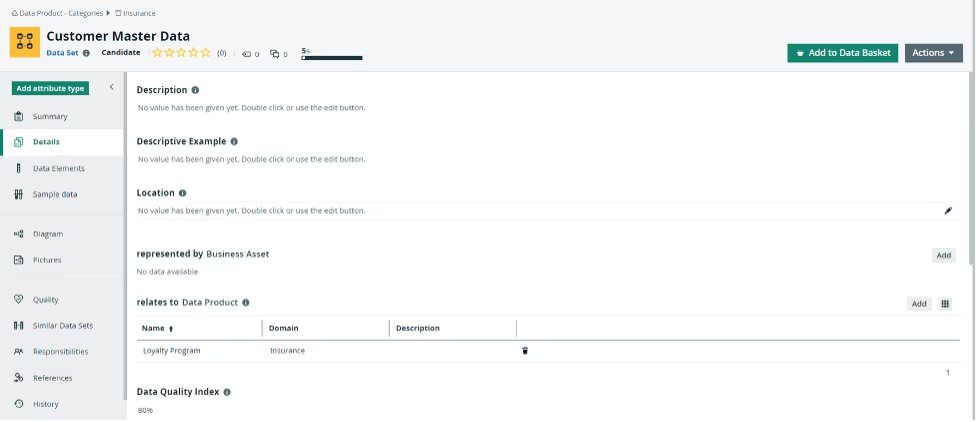

The data quality score from Collibra OwlDQ may be appended as a custom attribute to the data product. For example, the data quality index for the Customer Master data product is 80 percent (see Figure 3).

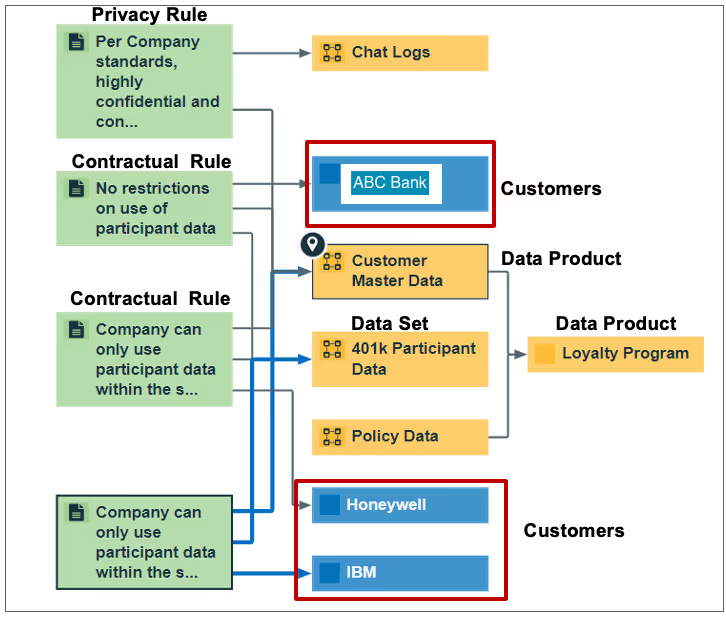

- Agree on Data Privacy Rules for Data Products

Data products need to be constrained by data privacy rules. For example, the Chat Log data product is constrained by a rule that highly confidential data such as Social Security Number must be masked before usage for analytics (see Figure 4).

- Create Customer-Specific Contractual Rules for Data Products

Data products also need to be constrained by contractual rules that are specific to customers. For example, an investment manager may have customer contracts that permit differing levels of data usage. The investment manager’s contracts with IBM and Honeywell only permit usage of 401(k) participant data within the app. However, the contract with Information Asset does not have any such usage restrictions (see Figure 5).

Rescuing a Workday Implementation - Securing Benefits for First Responders

The City of Dallas embarked on its Workday implementation in early 2018. Before Definian was contacted, the city had already struggled through 3 test cycles on their own, averaging 10 weeks on each and completing only two. Delay after delay caused frustration within the city. The project to modernize Dallas’s Payroll and Human Resources was floundering. The situation became dire when first responders felt the impact of the city’s antiquated systems.

Data Issues Make Headline News

Data issues and inconsistencies within Dallas’ legacy Lawson system were the main cause of the delays. However, project delays were a minor issue compared to the litany of problems brought to public attention by the president of the fire fighters association in February of 2019. The president reported to local news outlets that Dallas firefighters were not being paid the proper amounts, merit raises were not being paid on time, and that health insurance was not being administered correctly.

Gathering Political Will

City administrators knew that project direction had to change quickly, as the livelihood of thousands of fire department personnel depended on solving this systemic issue. The help they needed came when the Workday system integrator referred the city to Definian. Citing years of successful collaboration, the system integrator knew that Definian could address Dallas’ data issues and steer the project back on course. Next came days and nights of planning, after-hours text messages between the Dallas CIO and Definian leadership, and accelerated negotiations to figure out the best path forward. City administrators cut through red tape with true Texan determination and successfully added contracting with Definian to the city council agenda in March 2019.

The Cleanup Begins

Definian spearheaded the clean-up of Dallas’ legacy benefits systems and took responsibility for generating the workbooks needed for the Workday data migration. The SQL queries and COBOL programs previously used by the city’s IT (Information Technology) team were converted to Definian’s standard mapping specifications and rebuilt in the Applaud® software within the first month. Definian leveraged its repository of Critical-to-Quality (CTQ) checks specific to Workday, amassed from prior successful Workday HCM (Human Capital Management) Go-Lives, to find data inconsistencies and shortcomings in the data. These pre-validation checks ensured data integrity moving into Workday and enabled the city to accelerate data cleanup.

Analyzing Employee Payroll and Benefits

Applaud made it possible to gather detailed statistics on the data set and zero in on root problems that made headline news the prior month. By comparing employee benefit plans, types, and number of dependents, Definian ensured that providers had the right information to serve city employees. This effort was critical to restoring the payroll and benefits that were promised to firefighters, police officers, and thousands of other city workers. Prior to the Workday go-live, Definian used their proprietary Gross-To-Net (G2N) tool to compare payroll runs in Workday against Dallas’ current payroll system over several pay periods. This robust and flexible reconciliation gave Dallas the confidence they needed in their new payroll system. However, additional challenges awaited the project team on their path to Workday.

Legacy System Gaps

When Dallas began populating Workday withholding orders, they discovered that data dumps out of their legacy Lawson system could never meet Workday technical requirements. The Workday tenant needed more data than what was captured in Lawson. In response, the Dallas payroll team manually entered withholding data into the Workday load workbook. Data entry for employee withholding orders was difficult since an employee could have multiple orders and these orders can be updated over time. These complications, along with the fact that you had multiple people doing data entry, made the effort rife with errors and Dallas needed a systematic way to audit employee withholdings.

Audit Employee Withholdings

To audit withholding data, Definian partnered with the Dallas payroll team to automate comparisons between Lawson and the manually created files. This effort revealed gaps in the manual process, programmatically derived critical information, and resulted in withholding data that could be confidently loaded to Workday.

The Future of City Administration

The City of Dallas realized that the technical challenges they faced were outside their ability. Through careful self-reflection, resourceful problem solving, and contracting with Definian, the city worked towards a solution that would correct mistakes in their payroll and benefits administration. Today, the City of Dallas is running on Workday with greater efficiency, more security, and better administration than ever before. Dallas’ strategic investment will serve them, their employees, and the entire city for many years to come.

Solve Data Failures and Go-Live with Workday

A Growing Company at Risk

Prior to this global transportation/logistics company’s Workday implementation, revenues increased from $500 million to $800 million in a ten-year window. Amid their expansion, a key part of the business fell by the wayside. Significant gaps in data governance and master data management posed the greatest risk to their long-term IT (Information Technology) transformation project.

Discovering Data Governance Gaps

The first priority of their IT project timeline was to decommission their CODA (Unit4) system, the organization’s decades-old financial data storage platform. They elected the Workday Launch approach for this migration, which greatly condenses the project length compared to the traditional Workday framework. Their legacy CODA system did not scale with the rest of the organization; missing functionality and limited data validation in the legacy software led to workarounds and band-aid fixes. The IT department consisted of only 2 employees – virtually the same staffing since the company’s inception – and there were no roles in the organization to ensure data governance. Further complicating matters, a corporate culture that promoted independence and creative problem solving led to unique and undocumented workarounds throughout the data landscape. The organization needed quick and accurate guidance in order to adhere to the tight Workday Launch timeline.

Instilling a Data Mindset

Recognizing their inability to provide clean extracts for their Workday test loads, the organization contacted Definian for help on their in-flight implementation. Company leadership necessitated that Definian quickly generate data in the right format for Workday. CODA’s largely free-form column table structure led to several challenges: establishing how the legacy data was used in addition to determining the mappings necessary to move their existing data into Workday. Definian encouraged the organization to first understand their existing data landscape, which would then allow them to make more informed strategic decisions about how Workday will be configured and ultimately used. After much collaboration through extensive mapping sessions, the organization understood their own data better than ever before. Now the hard work could begin.

Undoing Years of Lax Data Governance

The supplier master conversion posed the greatest risk to the project, as the CODA legacy system did not have the functionality to set up separate remit-to supplier sites for a single top-level supplier. For years and years, the organization re-entered the same supplier master records whenever a new address was encountered (oftentimes the same address with a minor adjustment). It was even possible to have conflicting information like contact information, payment terms, and freight terms across these duplicate supplier records. Workday would not be able to support this structure; thus, consolidation of these supplier records was required. Definian built data conversion programs in Applaud® to dynamically merge legacy supplier records. Definian leveraged its repository of Workday Critical-to-Quality (CTQ) checks, amassed from prior successful Workday Financials implementations, to identify data inconsistencies and shortcomings that would inhibit records from loading to Workday. These pre-validation checks were instrumental in ensuring data integrity in Workday while also allowing the client time for cleanup.

Correcting Problem Data

Supplier Contracts were also a source of great risk to the project timeline. These contracts were managed in a separate SaaS (Software as a Service) system as well as in several manual spreadsheets. The different sources of Supplier Contracts were largely unchecked and not in sync. Definian’s data profiling and analysis reports revealed where start and end dates mismatched the terms of the lease contract, which greatly plagued their initial load to Workday. The client was able to clean up their legacy data with the help of Definian’s reports; subsequent loads had substantially fewer errors, which positioned them for success leading up to the Gold Build (Production).

Going Live

In just 7 months’ time, Definian spearheaded data conversion for 3 test cycles, which resulted in a successful Workday Financials go-live. The aggressive timeline and quick turnaround were possible because of Definian’s lengthy experience managing data risk and our ability to accelerate projects with our proprietary Applaud® software. Shortly after the go-live onto Workday Financials, the organization acquired another logistics company. Because of careful data migration, the IT infrastructure of this organization is positioned for their strategic goals and continued growth.

Introduction to Data Migration Services for Ivalua

We Take the Risk Out of Data Migration

Whether it is Source-to-Pay, Strategic Sourcing, or Procure-to-Pay, the implementation of an Ivalua system is a major undertaking and data migration is a critical and risky component.

- Data from multiple legacy sources needs to be extracted, consolidated, cleansed, de-duplicated, and transformed before it can be loaded into Ivalua.

- The data migration process must be repeatable, predictable, and executable within a specific timeframe.

- Issues with data migration will delay the entire implementation or Ivalua will not function properly.

- If the converted data is not cleansed and standardized, the full functionality and potential Ivalua has to offer will not be realized.

Data Migration Challenges

Many obstacles are encountered during an Ivalua implementation, each increases the risk of project overrun and delay. Typical challenges include:

- High levels of data duplication across disparate legacy systems.

- Unsupported and/or misunderstood legacy systems, both in their functionality and underlying technologies.

- Legacy data quality issues – including missing, inconsistent, and invalid data – which must be identified and corrected prior to loading to Ivalua.

- Legacy data structures and business requirements which differ drastically from Ivalua’s.

- Frequent specification changes which need to be accounted for within a short timeframe.

The Definian Difference

Definian’s Applaud® Data Migration Services help overcome these challenges. Three key components contribute to Definian’s success:

- People: Our services team focuses exclusively on data migration, honing their expertise over years of experience with Applaud and our solutions. They are client-focused and experts in the field.

- Software: Our data migration software, Applaud, has been optimized to address the challenges that occur on data migration projects, allowing one team using one integrated product to accomplish all data objectives.

- Approach: Our RapidTrak methodology helps ensure that the project stays on track. Definian’s approach to data migration differs from traditional approaches, decreasing implementation time and reducing the risk of the migration process.

Applaud Eliminates Data Migration Risk

Applaud’s features have been designed to save time and improve data quality at every step of an implementation project. Definian’s proven approach to data migration includes the following:

- Experienced data migration consultants identify, prevent and resolve issues before they become problems.

- Extraction capabilities can incorporate raw data from many disparate legacy systems, including mainframe, into Applaud’s data repository. New data sources can be quickly extracted as they are identified.

- Automated profiling on every column in every key legacy table assists with the creation of the data conversion requirements based on facts rather than assumptions.

- Powerful data matching engine that quickly identifies duplicate information across any data area including supplier, item, contract, and manufacturer records across the data landscape.

- Integrated analytics/reporting tools allow deeper legacy data analysis to identify potential data anomalies before they become problems.

- Cleansing features identify and monitor data issues, allowing development and support of an overarching data quality strategy.

- Development accelerators facilitate the building of load files and support Ivalua’s nimble solution.

- Process that ensures the data conversion is predictable, repeatable, and highly automated throughout the implementation, from the first test cycle through go-live.

- Stringent compliance with data security needs ensure PII information remains secure.

- Our RapidTrak methodology provides a truly integrated approach that decreases the overall data migration effort and reduces project risk.

Data Risk Factors and Monetary Impact When Resourcing Your Implementation

The importance of accounting for both the benefits and risks while defining the project team is critical to the project’s overall success. Implementation Partners or System Integrators often take a limited role in the data component of enterprise software implementations. While ‘data conversion’ might appear in the Statement of Work for a partner or integrator, they often narrowly define it as the ingestion of data into the new solution. This definition does not include any of the necessary, risk abating activities that support a robust data conversion strategy.

Implementation Partner’s typical data conversion activities neglect the extraction, analysis, cleansing, enrichment, harmonization, governance, transformation, and reconciliation activities that must take place. These additional activities are the most risky and difficult portion of data on an implementation and are notoriously hard to scope and estimate. These difficulties – both in scoping and execution – are the top reasons why many firms shy away from offering a complete data conversion strategy.

After considering the limited data activities included the Implementation Partner’s bid, it is critical to quantify the capabilities, risks, and benefits while planning how to resource your data team. To help calculate the impact of data to the program budget’s bottom line, the following formulas can be used:

- Delayed Test Cycles: Weekly Project Burn Rate (not just data team burn rate) x Number of Weeks delay

- Delayed Go Live: Weekly Project Burn Rate (not just data team burn rate) x Number of Weeks delay\

- Overtime Required to Prevent Project Delays: +20% of data migration budget

- Increased Conversion Load Times Caused by Pre-Validation Gaps: +10-25% of data migration of budget

- Decreased User Acceptance Due to Data Quality Issues: +5-10% of project budget

- Decreased Effectiveness of Solution Due to Data Quality Issues: +5-10% of project budget

- Shared Resource Impact to Other Initiatives: +5-10% of budget of impacted initiatives

- Increased Staff Turnover Caused by Additional Work Responsibilities: +10-15% of data migration budget

In addition to these quantitative risk factors, colleague and industry expert, Steve Novak, documented qualitative data risk factors in an insightful article at https://www.definian.com/articles/the-impact-of-a-failed-data-migration.

Considering the above risk factors, finding the right resources for the team can have a big impact and open new possibilities for taking the new solution beyond its original goals. When considering the mix of internal and external people that handle the data activities, several questions should be taken into consideration:

- What activities can only be handled by someone internal to the organization? (These usually revolve around business decisions and explaining business processes)

- What is the impact to other priorities by assigning internal resource to the project?

- When is the last time the resource worked on similar project?

- Are the resources familiar with data migration best practices?

- Does the organization have right tools to help carry about the profiling, extraction, cleansing, transformation, pre-validation, etc. or will those tools need to be licensed?

To help determine the types of resources that are needed, I authored the following guide that outlines the data resources and this RACI (Responsible, Accountable, Consulted, Informed) matrix regarding data, https://www.definian.com/articles/whos-responsible-for-what-on-complex-data-migrations.

Benefits and Monetary Impact of the Right People, Process, and Technology

The team with the right People, Process, and Technology always opens opportunities for the project. Here are some of the quantitative benefits we’ve identified on projects that we’ve delivered over the years.

- Risk Reduction of at least 50% across all risk factors

- Doubled the KPI's across the 100-person project team with a targeted data team of 8-12

- Enabled a client's data team of 30 people to focus on improving the solution with a dedicated team with 6 consultants

- Shortened cutover time by 25%, increased data quality by 50%

- Simultaneously reduced go-live time between phases by 50% while increasing the number of divisions per go-live from 1 to 3

- Repeatedly saved approximately 320 hours per data area for medium to large organizations through comprehensive pre-validation processing

- Routinely surpass implementation Partner benchmarks by +60% during the initial loads

- While actual results and value vary from project to project, these results show the power of an effective data team.

In addition to these high-level bullet points, there is a lot more that goes into getting the resource mix right for the data team on an implementation, let’s connect and work through the questions specific to your project.

Definian is a data partner that can work with you to scope, staff, and manage data from pre-planning through post go-live data sustainment.

7 Lessons Learned Migrating 1.6 Million Invoices to Oracle Cloud

Working for a world leading medical center meant taking on complex challenges with Accounts Payables. In addition to complexities with patient refunds, legal settlements, and escheatment a full year of historical invoices was required. This ambitious scope would minimize the risk of errant payments post-cutover, satisfy 1099 reporting requirements, and streamline operations. Continue reading for 7 lessons that you can apply to your project today.

1. Take Only What You Need

Requirements gathering lead us to 1 full year of historical invoices, this met important tax reporting needs by the business and protected the hospital from issuing duplicate payments. Because this data was converted for tax reporting purposes and to create warnings about possible duplicate invoices post cutover, we were also able to determine what pieces of information were not needed on the invoice and could remove those complexities from the conversion. For example, we did not need the Invoice to match against a Purchase Order in Oracle, likewise we did not need requester information, excluding these nonessential fields meant the invoice could more easily pass Oracle's validations and we could avoid unnecessary configuration.

2. Optimize the Load Process

Loading millions of records into Oracle Cloud is impossible without optimization. Consider what size batch to group invoices into for loading. For our pod sizing and scope, groups of 100K invoices worked nicely and each step of the load process - loading to interface, loading to base tables, validating invoices, and paying invoices - could be executed in a few hours or less. For the Import Payables Invoices step, it was important to increase the number of Parallel Processes that Oracle would issue. Increasing this parameter above 1 meant that backend Oracle would multithread the load process, using 2, 3, or even 10 engines to load data instead of only 1. It was also critical that the interface table was kept tidy, if a few hundred thousand invoices were left in the interface table and not purged it led to a performance drop or outright failure for the next load.

3. Build Exception Handling into the Conversion Program

With a large enough population of data, anything is possible. Converting a full year of Accounts Payable (AP) history meant we encountered exceptional data issues: conflicting information around check versus electronic payment method, invoices incorrectly left in open status for 10 years, check dates 1000 years in the future, disagreements in header versus line amounts, invoices associated with uncleared checks issued 10 years ago. Error handling and reports must be robust enough identify invalid data scenarios. These scenarios must be redirected to the business experts to plan next steps, agree on overrides, or apply manual workarounds.

4. Convert Master Data Ahead of Time

Converting millions of AP transactions during a narrow cutover window is needlessly risky. Even for an on-premise solution, converting historical data during cutover is difficult, for a cloud solution it is a non-starter. Loading a large volume of historical data requires converting the data early, outside of the cutover window. The benefit to converting high volume data early is that the cutover window does not need to be extended to fit in the hours (or days) of processing time for millions of records. However, this also requires converting supplier data earlier since AP Invoices would be meaningless and fail to load without supporting Supplier data.

5. Your Relationship with Oracle Support is an Asset

Oracle wants your implementation to be successful. In fact, Oracle leads the Cloud SaaS (Software as a Service) industry in having technical information around their solution. Oracle's level of transparency and the available of tools and technical resources makes it possible to solve most problems. As with any complex system, however, there will be issues. Learning how to place Services Requests with Oracle, and what content and screenshots to include in them will save dozens of hours over the course of the project.

6. Review Data Before Attempting to Load

Reviewing data prior to load will ensure your organization can test and eventually go-live with the cleanest possible data. This first level of review ought to confirm expected record counts, corrections from prior loads, basic configuration, and required fields. For large scale projects, automated pre-validation can mimic thousands of checks that normally would not occur until the data is loaded into Oracle staging tables or attempted to pass to base table. By carrying out these checks beforehand, it is possible to keep the Oracle environment pristine until the data and configuration is ready for a clean load with minimal errors.

7. Take Advantages of Descriptive Flex Fields

Descriptive Flex Fields (DFF) can accomplish countless things that Oracle Cloud ERP (Enterprise Requirements Planning) might not handle out of the box. DFF's extend the functionality of the Oracle solution by providing generic fields for your organization to customize. Additionally, they can be used to retain legacy information for converted records. For example, DFF's can be defined to stored legacy key information, which will be useful for the users who may have this information committed to memory. For historical AP data, DFF's were repurposed to store the associated legacy Purchase Order number. This made it possible to retain relevant Purchase Order information without the need to recreate the Purchase Order in Oracle.

Reflecting on our Success

The standard approach for most Cloud ERP (Enterprise Resource Planning) implementations is to carry forward as little historical data as possible. The decision to bring vast quantities of historical data into Oracle Fusion Cloud ERP came after months of discussions and cost-benefit analysis. Our effort to load 1.6 million invoices to Oracle Cloud was successful because of the dedication and commitment of subject matter experts from our client, Applaud data migration software, and the unwavering support of Oracle Corporation and their technical support engineers.