Articles & case studies

Definian Named One of the Fastest Growing Private Companies in America

CHICAGO, August 17, 2021 — Inc. magazine today revealed that Definian is No. 2577 on its annual Inc. 5000 list, the most prestigious ranking of the nation’s fastest-growing private companies. The list represents a unique look at the most successful companies within the American economy’s most dynamic segment—its independent small businesses. Intuit, Zappos, Under Armour, Microsoft, Patagonia, and many other well-known names gained their first national exposure as honorees on the Inc. 5000.

Not only have the companies on the 2021 Inc. 5000 been very competitive within their markets, but this year’s list also proved especially resilient and flexible given 2020’s unprecedented challenges. Among the 5,000, the average median three-year growth rate soared to 543 percent, and median revenue reached $11.1 million. Together, those companies added more than 610,000 jobs over the past three years.

"We are thrilled to be ranked on the prestigious Inc. 5000 list this year," says Craig Wood, CEO of Definian. "Over the past few years, we have received awards recognizing our company culture and now it's exciting to see our company performance celebrated as well. That's a credit to our incredible team at Definian and their commitment to our clients, our organization and each other."

Complete results of the Inc. 5000, including company profiles and an interactive database that can be sorted by industry, region, and other criteria, can be found at inc.com/inc5000. The top 500 companies are featured in the September issue of Inc., which will be available on newsstands on August 20.

“The 2021 Inc. 5000 list feels like one of the most important rosters of companies ever compiled,” says Scott Omelianuk, editor-in-chief of Inc. “Building one of the fastest-growing companies in America in any year is a remarkable achievement. Building one in the crisis we’ve lived through is just plain amazing. This kind of accomplishment comes with hard work, smart pivots, great leadership, and the help of a whole lot of people.”

Inc. 5000 Methodology

Companies on the 2021 Inc. 5000 are ranked according to percentage revenue growth from 2017 to 2020. To qualify, companies must have been founded and generating revenue by March 31, 2017. They must be U.S.-based, privately held, for-profit, and independent—not subsidiaries or divisions of other companies—as of December 31, 2020. (Since then, some on the list may have gone public or been acquired.) The minimum revenue required for 2017 is $100,000; the minimum for 2020 is $2 million. As always, Inc. reserves the right to decline applicants for subjective reasons. Growth rates used to determine company rankings were calculated to three decimal places. There was one tie on this year’s Inc. 5000. Companies on the Inc. 500 are featured in Inc.’s September issue. They represent the top tier of the Inc. 5000.

About Definian

Definian is a technology consulting firm based out of Chicago, Illinois specializing in data migration. The company's innovative services and Applaud® software ensure projects remain on track and reduce overall risk in even the most complex environments. With over three decades of successful execution, our solutions have a proven track record across a wide array of industries and applications. We have the knowledge and understanding to provide our clients with tailored solutions to address their specific data migration requirements.

About Inc. Media

The world’s most trusted business-media brand, Inc. offers entrepreneurs the knowledge, tools, connections, and community to build great companies. Its award-winning multiplatform content reaches more than 50 million people each month across a variety of channels including web sites, newsletters, social media, podcasts, and print. Its prestigious Inc. 5000 list, produced every year since 1982, analyzes company data to recognize the fastest-growing privately held businesses in the United States. The global recognition that comes with inclusion in the 5000 gives the founders of the best businesses an opportunity to engage with an exclusive community of their peers, and the credibility that helps them drive sales and recruit talent. The associated Inc. 5000 Vision Conference is part of a highly acclaimed portfolio of bespoke events produced by Inc. For more information, visit www.inc.com.

For more information on the Inc. 5000 Vision Conference, visit conference.inc.com.

Operationalizing Compliance Controls

At Definian, we understand the challenges associated with the ever changing compliance environment. Whether the compliance is driven by external regulation or by internal corporate strategy, the state of compliance can sometimes consist of unknowns and best guesses.

Organizations may have a clear understanding of what needs to be complied with and the supporting polices, and standards may have been developed. The challenge often facing organizations is ensuring these policies and standards are being adhered to and that the scope of the adherence is comprehensive. This is where controls play a key role.

The development and use controls to oversee the implementation of policies and standards is nothing new. However, merely authoring controls and mandating their use does not ensure they are being followed. How do we know the controls are being adhered to? How do we know the controls are still current? How do we know that controls are aligned with the appropriate subject content?

Recently, Definian partnered with a multinational financial services organization that needed to ensure compliance to financial regulations and internal policies. Their challenge was to ensure the correct controls were overseeing the correct content in the correct procedural context.

Our approach was to look at the various procedural components as distinct business constructs. This included:

- The policies and standards that inform the control;

- The resulting controls;

- The content which is subject to control;

- The processes / procedures that generate or consume the respective content.

We then represented these components as distinct business objects in an appropriate tool, thereby enabling active management and governance including:

- Lifecycle management – The use of workflows to govern the creation, vetting and approval of the business objects;

- Ownership and accountability – The assignment of business object ownership to respective role players;

- Establish lineage – The mapping of relationships between the policies and standards with the controls that enforce them. The mapping of relationships between the controls and the content to which they oversee. The mapping of relationships between content and the processes/procedures in which it is was involved.

Once management and governance were in place, the opportunity to measure and monitor compliance presented itself. A given control could be viewed in multiple contexts; what policy or standard it was monitoring, what content it was overseeing and what processes/procedures were involved. Similarly, a given process/procedure could be assessed for what content it generates or consumes and what controls oversee them.

As noted, the implementation of workflows allowed for the management of business object lifecycles. Workflows were also leveraged to ensure continuous monitoring of the control framework. The recertification of controls was imposed at set intervals and the addition of new content was subject to review to ensure the appropriate controls were associated. Dashboards were created to measure control coverage and identify relevant content not subject to control.

Definianl can help your organization with the development of a control framework including the identification of new controls, the codifying of existing controls and the building of a comprehensive control lineage. Reach out to us today!

How a Go-Live Dress Rehearsal Ensures Cutover Success

Go-live cutover activities are the most important part of an Enterprise Resource Planning (ERP) implementation, right? Cutover is the final push of critical data into production, can we conclude that it must matter the most? That was the opinion I held early in my career and, as a result, I felt that go-live week deserved more of my attention than any other week of the year. Today, however, I emphasize the final test cycle, the dress rehearsal for go-live.

The final test cycle before cutover deserves as much or even more attention than cutover itself. Years of successful data migration projects and countless test cycles have influenced my opinion today. As you continue your own implementation, I hope that you and your team elevate the importance of the final test cycle. I invite you to reflect on my insights below as you lead your project team to the final phases of implementation.

Why do we treat it like a dress rehearsal?

Every successful implementation project has a schedule with numerous test cycles for configuration, data migration, validation, loading and system & integration testing. The last test cycle—the one before the final flurry of go-live activities, is the dress rehearsal. Every action that needs to take place during go-live is replicated during the dress rehearsal. Timing and sequencing are key. Aligning resources and understanding expectations are critical to designing a go-live schedule that minimizes business disruption and maximizes the likelihood of smooth operations post-cutover. If a go-live cutover task is expected to happen at 3 AM on a Saturday, that same activity needs to have resources at-the-ready to execute the plan at 3 AM on dress rehearsal Saturday.

Most project teams put a lot of focus on the final push for go-live (and rightfully so!). From a project management and data migration perspective, I put just as much—if not more—emphasis on the dress rehearsal. As dress rehearsal approaches, the “big picture” finally starts to settle in. After months of test cycles and “more leeway” with timing (think of activities planned over 4 weeks instead of 2 weeks), all our work is locked into a very specific, high-visibility timeline. Dress rehearsal is when we finally learn if our setups, runtime documentation, data dependencies and workflows are truly synchronized.

What does this mean for Project Managers?

As a project manager, dress rehearsal is when my team truly understands the sense of urgency and pinpoint accuracy needed for go-live. The last test cycle is when teammates learn how to “flip a switch” and approach their tasks with focus and intentionality. As we prepare for specific data migration activities, timing is everything. We go over our checklists: are configurations set up; have we received the necessary supplemental files; can we run ETL processes (and support pre-load validations in parallel!) in the allotted time?

My biggest worry is, “What did we collectively miss?” Dress rehearsal is the last time to correct the complex web of activities; my mindset during go-live cutover is that something unexpected will happen. A smooth and successful final test cycle builds confidence in the entire implementation process. When we iron out the wrinkles during dress rehearsal, we prepare ourselves for the inevitable curveball during go-live. Instead of fretting about the schedule and cutover plan, the team already knows that we have excelled under the exact same expectations; it allows us to focus on solutions instead of timelines.

How does this help us approach cutover with confidence?

Once a dress rehearsal is executed successfully, my mind is much more at ease entering go-live cutover. We are one united team, everyone with a clear understanding of expectations. We have the confidence knowing that if (when?) something unexpected happens during go-live, we will rise to the occasion and find solutions. A successful dress rehearsal is an excellent indication that the entire project team can deliver a successful go-live cutover when it matters most.

Phased ERP Go-Live for a Multi-System Healthcare Provider

Background

One of the world’s largest medical centers made strategic plans to upgrade their entire data architecture. This transformation required moving their Enterprise Resource Planning (ERP) system from Lawson to Oracle Cloud and their Supplier Relationship Management (SRM) system to Ivalua. This organization faced serious data conversion issues with their initial implementation in London; consequently, they enlisted Definian to handle their second implementation: performing data migration for their main US campus. Definian addressed data complexities with a methodical and thoroughly validated approach, allowing the client to use the new system with full confidence in the underlying data. As a result of the second effective implementation, the client re-enlisted Definian for their third wave to handle the integration of recently acquired hospitals onto their new data platform. All of these newly acquired medical centers needed to have their data converted to match the specifications of the already live data in Oracle Cloud and Ivalua.

Due to the intricacies of the third wave implementation (large number of conversion objects, high data volume, dependent integrations), the project plan was engineered to include two separate go-live windows. The first go-live (aka the “Winter Release”) would reflect the master data objects in Oracle Cloud, the integration of loaded Oracle master data into Ivalua, and loading all additional source-to-pay data objects into Ivalua. The second go-live would reflect the transactional data in Oracle Cloud, building upon the already loaded master data. This case study will focus on the “Winter Release” go-live: the load of Oracle Cloud Supply Chain conversion objects, the subsequent integration of this data into the Ivalua procurement system, and the load of all Ivalua conversion objects for procurement purposes.

Project Requirements

The “Winter Release” go-live involved six Oracle Cloud data objects (Items, Manufacturer Part Numbers, Supplier Part Numbers, Inventory Org Items, Intraclass UOM, Suppliers) and three Ivalua data objects (Suppliers, Contracts, Pricing). With these data loads, the client wanted to ensure minimal disruption to business users; as such, it was a priority to load the data in an expeditious and creative fashion. The “Winter Release” project plan stated that between Sunday night to Friday morning the team would complete the following: obtain a legacy data refresh, perform conversion runs, preliminarily validate converted data to be loaded into Oracle, load data into Oracle, and validate loaded data. From there, the Ivalua integration would run on Friday to include the recently loaded Oracle Cloud data. All other Ivalua data objects were to be loaded over the weekend so that end users could perform their day-to-day tasks the following Monday.

Key Activities

The data migration team fully leveraged Definian’s EPACTL framework, and Applaud® data migration software by performing the following activities:

We began by extracting legacy data to be used for Oracle and Ivalua data conversions. Through multiple test runs, we were able to reduce the time it took to extract the legacy data by updating table set-up and removing unneeded columns. At the beginning of the project, we profiled and analyzed source data to determine any legacy data quirks and understand how the data should exist in the end-state Oracle and Ivalua systems. We then performed data cleansing which entailed updating legacy data inaccuracies, supplier deduplication, and address standardization. Next, we performed the necessary data transformations specified by the client to be reflected in the Oracle Cloud and Ivalua production environments. In the process, we automated our data conversions to limit manual work. We also used our proprietary Oracle Cloud Critical-to-Quality (CTQ) pre-load validation checks to identify load errors prior to executing data loads. These checks led to targeted error resolution before it was too late, thereby enabling efficient and highly effective data loads within a tight timeframe. Additionally, we updated Oracle load settings to use multiple threads; this slight tweak significantly reduced load times. We performed the necessary boundary system integrations to ensure that the client’s Oracle Cloud data would be reflected in Ivalua and completed all necessary Ivalua data loads.

Our EPACTL process is enhanced by our detailed project management strategies and communicative consultants. Our detailed project plan outlined all necessary steps from data extraction to post-load validation as well as the expected time to complete each step. Additionally, our team was in constant communication with client leads to execute changes and answer questions during the validation process; this allowed us to address issues in real time.

Client Risks and Mitigation Strategies

COVID-19-Induced Challenges

The “Winter Release” go-live was executed later than originally anticipated due to the COVID-19 pandemic. Eight months after the start of the project, project activities were paused; the client’s leadership team determined a new path forward due to COVID-related interruptions.

- Resource changes occurred as a result of the pandemic. Additionally, client SME availability was oftentimes scarce.

- Due to COVID restrictions, the “Winter Release” go-live was executed remotely.

- The production Oracle Cloud environment was already live with data from previous project waves; this data was regularly being accessed by users around the globe.

- The hospital system had a large volume of data including 4.5 million inventory organization item records.

Our solutions

- Definian was a constant across the project, providing support to the client at the start of the project and after the project pause. As a result, Definian consultants had a deep understanding of the legacy data and the objectives of the implementation, which allowed the Definian team to assist with/help drive data validation.

- Definian consultants set up Microsoft Teams channels to increase real-time communication and address any problems expeditiously.

- Definian remained extra vigilant to ensure duplicate records would not be loaded for already existing data.

- We maintained consistent cross-functional communication to guarantee that all necessary processes were turned on/off as needed for successful data loads.

- Definian’s Applaud® software is able to ingest, process, and output large data volumes. We worked with the client to ensure that the data would be exported in a validation-friendly manner.

Data Transformation and Requirements

Challenges

- Incomplete testing and data validation in prior mock cycles led to inconsistencies in financial reports, which were not discovered until later in the project. As a result, this impacted the SCM conversions (Items and Suppliers) as the legacy selection logic needed to be updated to support the client’s necessary financial requirements.

- The client made late changes to data conversion logic (the week before data was slated to be loaded into Production). These mapping updates added extra risk as the new conversion logic was not tested in a development environment.

- Considerations for different types of suppliers being converted including 1099, international, and "confidential" Supplier Types.

- Multiple legacy records were combined into one Oracle Cloud record for Suppliers and Inventory Organizations, adding an extra layer of complexity.

- The Ivalua implementation and integration were not a part of the initial project scope. The inclusion of the Ivalua mid-project required the team to act quickly, executing mapping sessions and conversion builds with fewer mock cycles to test the conversion logic.

- The client had a tight timeline to execute the Oracle Cloud data loads due to the Ivalua integration. The Oracle Cloud loads had to be finished by Friday morning in order to carry out the Ivalua integration Saturday morning and subsequently load the Ivalua data objects over the weekend.

- The project timeline was written with the “Winter Release” go-live overlapping with the UAT test cycle for the April transactional go-live. This overlap added extra constraint on client resources.

- The dual maintenance period was extended due to the phased go-live (the “Winter Release” and later the April transactional go-live). This two-month lag period required that the client carefully track changes in both Oracle/Ivalua as well as their legacy system.

Our solutions

- Definian scheduled meetings to discuss modifications to selection logic and tested the impacted conversions to ensure that the associated code changes resulted in the correct output.

- Definian consultants validated the conversion changes and ensured that the output appeared as anticipated prior to releasing the new set of files to the client.

- Definian provided reports to assist with pre-load validation and answered specific client questions/concerns as they arose.

- Leveraging Definian’s decades of data migration experience, the Definian team was prepared to execute additional data conversions in an efficient, effective manner.

- Our ethos (The Definian Way) involves rising to the opportunity to meet our clients’ needs. We understood the importance of executing the loads on schedule and ensured that the data would be ready for end users come Monday morning.

- Again, Definian rose to the opportunity to meet project deadlines and worked with client SMEs’ schedules to address concerns as they arose.

- Definian supported the client through their dual maintenance period and reported any unexpected data cases on conversion-specific error logs prior to loads.

Results

The large data volume, late requirements changes, as well as shifting resources/timelines added risk to the overall success of the project. However, with a tight project plan, several mock cycles, automated processes, and Definian’s years of data migration experience, the entire go-live process went smoothly from start to finish. Using our Applaud® software, Definian consultants quickly adjusted to changing requirements and supported data validation efforts to propel this successful implementation. All Oracle Cloud data objects loaded successfully and were swiftly validated; these efforts allowed the Ivalua integration and data loads to take place on schedule. The “Winter Release” go-live was an important milestone as it instilled confidence in the project team and laid the foundation for the official project go-live thereafter. Our client’s data is more streamlined than ever and they are able to operate efficiently across campuses and countries!

Data Governance Kick-Off Meeting

Kicking off your organization’s data governance implementation with an introductory meeting is an effective way to ensure stakeholder buy-in and set expectations for things to come. With the proper focus, this meeting can quickly lay the groundwork for the journey ahead. That said, avoid the temptation to cover everything during this meeting and instead limit it to the following sections:

- Introduction

- Primer on data governance

- Thoughts from the group

- Establish meeting cadence

- High level overview of plan + deliverables (time permitting)

Introduction:

At the start of the meeting take a few minutes to get each person’s thoughts about what data governance is and the impact they anticipate it will have on the organization. People have different ideas about what data governance is. Some will think of it as a technical project, others a business process, others something that is contained within an application, and others will think it is only going to be a pain in the butt. The first step to getting people aligned is learning where everyone is starting out.

Primer on data governance:

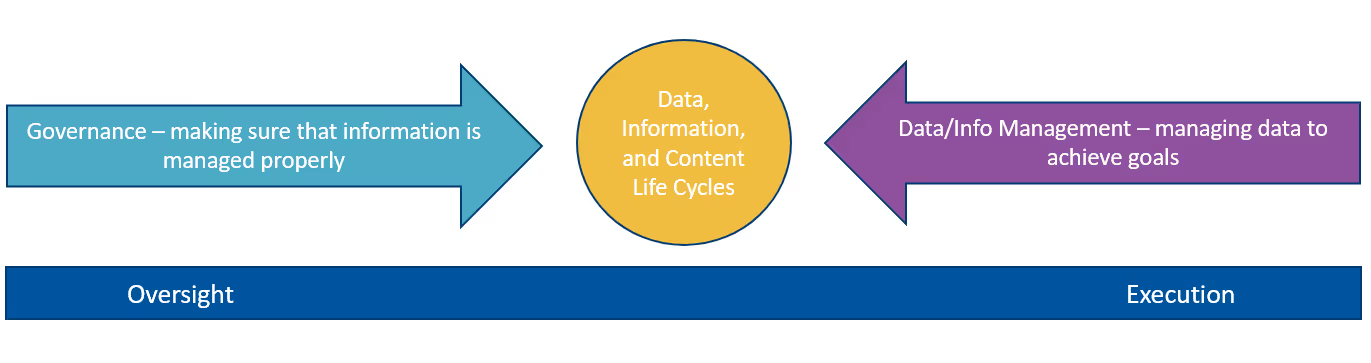

Once we learn the team’s initial perspective of data governance, it is time to put down a central definition and relate everyone’s individual definitions to that definition. DAMA shares a concise arrow diagram that defines and shows how governance fits into the data management landscape.

This diagram is a fantastic tool to pull everyone's definition into something that the entire group can coalesce. From here, we can take the definition a half step deeper into data governance.

I have found it helpful to go through what data governance is and is not. How its purpose is to enable business outcomes and not limit activities, how it is a process and not a project and will require long term commitment from the organization to be successful. It takes a bit of dialogue, but if a group begins to recognize that data governance is more about organization change than just a thing to do, it will have been a successful meeting.

Depending on the group and discussion, this might be all there is time for, and it would be fine to begin closing by establishing next steps and a meeting cadence (more on this below) so that you can maintain momentum without getting stuck at the scheduling step later.

Thoughts from the group:

If there is time left and the group is in good spirits, take a few minutes to get additional thoughts from the group about what they are looking to get out of data governance at their organization. Capture these initial thoughts and tie them to data governance implementation roadmap. Being able to tie back to these ideas later in the implementation will significantly boost engagement and help your team see the impact of their efforts.

Your team may be optimistic and share ideas around positive changes like better data quality and faster reporting. Call out and celebrate this! But your discussion will also include thoughts from those that are more resistant to change. Some folks might even share a negative outlook or express skepticism about the implementation having any impact on their day-to-day role. Even this “negative” idea is important. It will eventually be turned into a positive as it sets up further discussions on how to measure the impact governance is having on the organizations.

High level overview of plan + deliverables (time permitting):

As the meeting ends, take a few minutes to tie the group's thoughts to the next activity or two. Tying thoughts to activities help build the urgency that this activity will generate positive results for the organization and keep people engaged to join the next discussion.

Establish a meeting time + Cadence:

Before the meeting is over, take 10 minutes to establish a suitable time and frequency for the group to meet. Schedule management could be the most difficult aspect of any project. Getting a commitment from group members to meet at a certain frequency while you have their attention is much more effective than doing the calendar hunt to find an open time. Once the time is set, send the meeting invite immediately.

Share a meeting follow up:

Sending a meeting summary is always great practice and sending one after the initial kickoff is of utmost importance. The summary will reinforce the importance, impact, and learnings that came out of your collaboratory meeting. The summary should include:

- Materials that were covered

- The team’s thoughts

- Upcoming project schedule

- Agreed upon meeting cadence

- Issues resolved/outstanding

- Next actions

- Main topic for the next meeting - Identifying gaps/defining best practices

Client Success Story – Enterprise Data Management at Salesforce

Definian partnered with the Salesforce team to create an enterprise tool for all Salesforce internal users that incorporates multiple data sources, styles, and cadences and allows users to browse and interact with essential objects such as data cataloging tools, data governance, and risk management guidelines, and data quality best practices, to name a few. The teams worked extensively to create a unified view to create a data marketplace, with all analytical solutions in one place. This makes it possible for existing data APIs to be discovered company-wide and replaces a previously fragmented experience with a Unified Smart Catalog. The metadata app, knowledge base, and corporate updates can now be accessed with a single click, and users are able to receive self-serve analytics based on usage patterns and trends.

A unified experience for the Salesforce team means an easy-to-use, one stop shop to explore KPIs, key metrics, and top data assets across the company. The data discovery process is easier than ever, adding traceability to monitor usage of data assets, and using modern practices for data orchestration, transformation, storage, warehousing, interactive query, and visualization. The intuitive design of the solution makes the framework scalable, which will serve the company’s ongoing and future projects as well.

The Teams from Definian and Salesforce worked together to plan, develop, and test the centralized user experience that now delivers great value through the following features:

- A single user interface (UI) for Data Catalog Capabilities including Data Discovery, Data Stewardship, and a Business Glossary

- A single view of compliance audits and risk assessments through various reports and dashboards

- A single view of data quality reports and dashboards by business unit

- An intuitive data health view with a quality score

- A newly-enabled knowledge base

- Custom dashboarding capabilities

This project signifies a great partnership with the Salesforce team to work effectively and efficiently with data from myriad sources.

Data Holds Organizations Back

Data Holds Organizations Back

Data is a critical asset for every organization, especially in the modern era. Despite its recognized criticality, many organizations fail to properly manage data as a value-driving asset. According to McKinsey, organizations spend 30% of their time on non-value added tasks because of poor data management; further causing issues, 70% of employees have access to data that they should not be able to see.

Definian focuses on the foundational aspects that strengthen data management at your organization. Our process is geared to render data compliant, clean, consistent, credible, and current. With these measures adequately addressed, you will be confident that your data is secure, increasing in value, and powering insights across the enterprise.

Why Data Impacts Performance

DEGRADATION

Depending on the entity, data degrade at a rate of 4-20% a year. Without active mitigation and monitoring, data will constantly decrease in usefulness.

QUALITY

An insufficient data quality strategy plus growing data volumes means that inaccurate data are affecting your analytics and are causing a manual data cleanup burden on your team.

INCOMPLETE METRICS

The principle of what’s not measured is not managed applies to data. By not tracking important data-related metrics, organizations don’t know the impact of ongoing data management gaps.

SECURITY

Without role-based security policies that meet regulatory, contractual, and ethical requirements, organizations put themselves at significant risk.

COMPLEXITY

As data velocity accelerates and unstructured data is continuously entered into the system, data lakes start to turn into data swamps.

LACK OF STANDARDS

Data entered and maintained by different people, departments, and applications can slow operations and pollute analytics.

SILOS

Data silos that persist between departments slow business operations, increase integrity issues and prevent the data access that is needed.

LINEAGE

When data moves across the landscape, its requirements change. The smallest error or update can have significant ripple effects.

CULTURE

An organization that is not prepared for technological change will be ill-equipped to effectively manage their data in the future.

Modernizing Data Landscapes Since 1985

Prepare Your Organization

- Assess your current data landscape, processes, and governance policies

- Develop a tailored data improvement roadmap

- Help prepare for the organizational change to spur adoption of the new polices and processes

Execute Improvement Roadmap

- Ensure the data vision aligns with business objectives

- Facilitate organizational change

- Implement technical components for data security, quality, interoperability, etc.

Secure data across the organization

- Instill confidence that the team has access to quality data when they need it

- Assure that your data are compliant with regulatory, contractual, and ethical obligations

- Promote a culture that uses data to improve operations, increase sales, and reach better decisions across the enterprise

Mastering the Item Master: A Tour de Force Wrangling Unconventional Data

A dramatic redesign of the Item Master proposed

Global supply chains have changed dramatically over the last few decades and constant innovation is required to keep the American steel industry competitive. Strategic investments in Enterprise Resource Planning (ERP) software is one way that our client – a Fortune 500 steel manufacturer – is extracting more value from the same raw materials. As part of a global rollout of Dynamics 365, the Item Master for a particular cold finishing mill required a dramatic redesign.

One of the main objectives of this initiative was to build a true item master across the entire line of business to improve their reporting. This was defined as a collection of 21 individual attributes including shape, size, chemical composition, length, and others. Any distinct combination of values for these 21 attributes represented a unique item.

One piece of the puzzle

This cold finishing mill represents only one of the dozens of divisions implementing Dynamics 365. This steel company is vertically integrated – steel cast at one mill may ultimately find its way into this cold finishing mill where it can be turned, ground, rolled, chamfered, or annealed. A goal for this global implementation is to integrate the Enterprise Resource Planning (ERP) systems across the divisions to streamline steel production and finishing. That said, implementing Dynamics 365 for this cold finishing mill required meeting the requirements and matching the conventions set by divisions already live in Dynamics 365. This mill was just one piece of the puzzle.

The sites that had already successfully migrated to D365 were all made to stock and migrated off a common legacy system. This made the process efficient and repeatable; however, this division practiced a ‘made to order’ business strategy, meaning all material was made based on the specifications defined by the customer. This meant the functional business requirements were going to differ vastly from the already live divisions and need to be fluid enough to accommodate a wider range of customer demands.

Overcoming documentation gaps

Decades ago, the mill purchased mainframe servers and leveraged in-house knowledge to build their supply chain management system from scratch using COBOL. The truly one-of-a-kind solution that ran this mill helped them maintain operational excellence and profitability through turbulent years for the American steel industry. Today, however, the hardware is at risk of failing, the software cannot scale, and the talented resources that built the legacy system are nearing retirement. Worse still, the documentation for this complex system is sometimes buried between lines of COBOL code, relegated to tribal knowledge, or is missing entirely.

The robust Dynamics operations solution requires more detailed data on the production of the finished products, namely formulas and routes. However, this data did not exist in a structured fashion in the legacy system. This required a much deeper dive by Definian and the technical team to understand how this data could be extracted and migrated to the new system.

Reverse engineering: How we used transactional data to define items

The unique operating model of this steel mill meant that there was no true item master in the legacy system. Deriving an item master and having it integrate seamlessly with the other divisions became a colossal task in data analysis, cleansing, and conversion.

The process began with a review of all required transactional data. Analysis of the data behind nearly one hundred thousand sales order lines allowed Definian to build the finished goods, hundreds of thousands of lines of production scheduling to create WIP parts, and years of purchase history to derive the raw materials.

However, the redesign did not end with the Item Master. With the item master was now complete, the formula and route data was also needed. The team faced similar challenges here true master data did not exist in the legacy system for formulas and routes. Read more about how we solved these challenges here. Adding to this complexity, were customer specifications for highly regulated industries and critical applications that we discuss further here.

Success Today and for Years to Come

Since go live, the client is now able to identify the specific products they buy and sell, while still delivering the same experience customers have been accustomed to for years. In addition, the facility now has a true accounting of their production processes, something that was previously confined to the minds of a few planners. And finally, with such a drastic modernization of their technology, the client location is finally able to report on an enterprise level, rather than the silo they operated on for decades.

If you have questions about data management, data quality, or data migration, click the chat bubble below. I'd like to learn about them and work with you to figure out solutions.

Assessment of a Multi-Application Implementation at a Multi-National Company

Experiencing significant issues prior to User Acceptance Test, a multinational company brought Definian in to assess the data migration for complex multi-application. The project was in danger. There was no confidence in the data migration process or the data itself. The process took too long to execute, the data quality was bad, real data had yet to make it completely through the process, and something needed to be done to get it back on track. Our goal was to figure out what that something was and to see what could be implemented prior to UAT.

The assessment lasted about 3 days, but during that time we were able to host 22 hours of interviews with 23 different team members, call out project strengths, and identify the major issues that were affecting the project.

During the initial interviews with the team, it was clear that their greatest strength was their technical knowhow. The team was savvy and whip smart on every individual technical aspect that needed to bring the system to life. The major issues stemmed from organization’s culture and would not be an easy fix.

The primary problem was culturally everything was someone else's problem. Individuals were confident their own slice was working perfectly, but data was always someone else's problem. Project leadership dished off leadership and management accountabilities to others, who pointed to others, who pointed back to the first group we talked with. When discussing a problem, process, or even general status, someone else was being set up to be the fall guy. While this level of avoidance was atypically high, there were valid reasons for the team to skirt responsibility. The organization was constantly on the lookout to cut costs and lay people off. During the assessment, we learned that the company had laid off a chunk of the workforce including some subject matter experts to pay for the implementation. This justifiably fed into the team’s fearfulness and poisoned many aspects of the organization.

Branching off the "it's not my problem culture", communication between each component and disparate application of the implementation was siloed. Data requirements required by the third hop weren’t communicated down to the data source. The group on the 3rd hop were raising flags, but without clear coordination across teams, those flags were not being seen. In conjunction with the siloed work streams, several disparate independently operated project plans had been created. This siloed approach prevented the different aspects of the project from coming together at the appropriate times and prevented the ability to track the critical path across the program. If one track had an issue, spec change, or just an upcoming vacation, the program didn’t have a way to calculate the impact.

The third major issue, which is also a byproduct of culture, was that the data governance council was dissolved prior to the start of the implementation. This fed into the “not my problem” culture. Without clear data accountabilities, everyone ran away from data, since everyone knew it would be one of the most difficult aspects of the project to get right. Without the governance rules of the road and oversight, there wasn’t a global data vision, unified standards, comprehensive policies, lineage tracking, quality metrics, etc. During the assessment, the data governance council was in the process of being restarted, but culturally it would be difficult to make the council effective due to a fear of accountability.

While the client was looking for an easy button solution that could get them back on track in a matter of a couple weeks, it wasn’t going to be possible in that time frame. Before the team could uncover any remaining technical gaps, the cultural, project management, and data governance issues needed to be addressed. I wish there was a successful turnaround story for this project, but shortly after the assessment, the organization went through another round of significant layoffs and the project was cancelled.

Please take these lessons from another company and apply to your organization. If these issues can happen at a globally recognizable company, they can happen anywhere. Technically, this group was incredibly talented and had all the technical pieces to be successful. It was the fearful culture, siloed management, and lack of governance that drove their issues. In many failures, it is the non-technical pieces that are the most difficult to get right.

If you have questions about data management, data migration, data governance, building data cultures, or you just love data, let’s get together. My email is steve.novak@definian.com.

7 Barriers to Successful Data Migration

Successful completion of a data transformation and ERP (Enterprise Resource Planning) implementation will provide huge advantages to your organization. These include optimized business processes, better reporting capabilities, robust security, and more. But before you can realize these benefits, your organization must rise to the challenges ahead.

Below, we share 7 noteworthy obstacles to successful data migration which we have culled from our decades of experience converting data to Workday, Oracle Cloud ERP, Oracle EBS, Infor MS Dynamics, and other technologies.

1. Difficult Legacy Data Extraction

Logically, one of the first steps in data migration is determining which legacy data sources need to brought into the new system. Once this scope is determined, it is crucial to decide how that information will be extracted. While extraction could be straightforward for some data sources, it could be onerous for others – including those hosted by third party vendors or data stored in older mainframe servers.

To facilitate legacy data extraction, ask yourself and your team the following questions:

- How well do we know our existing data system?

- Which members of your organization have intimate knowledge of your system’s nuances and current usage?

Definian utilizes our central data repository, Applaud®, to house data from each of your distinct legacy data sources. Additionally, we can directly connect to your ODBC system, seamlessly translate EBCDIC data, build utilities to pull data from many cloud data sources, and quickly incorporate new data sources as they emerge from your shadow IT (Information Technology) infrastructure.

2. Decision Making and Mapping Specification Challenges

To make your company’s existing data compatible with your new ERP solution, hundreds of tables and fields will need to be mapped and transformed from your “legacy” system to your “target” system. It is important to consider what data you want to appear in your target system. Do you need to bring over certain historical data? Do you want terminated employees or closed purchase orders to exist on your new platform? These are examples of questions that you should ask (and answer) in the requirements gathering. These decisions require input from both the technical team and subject matter experts (SMEs) to ensure overall accuracy in conversion and functionality. Note that these specifications can evolve throughout test cycles or as new requirements are understood and progressively elaborated.

Additionally, legacy-to-target crosswalks are necessary to map existing values into their accepted equivalents in the new system. This can span everything from simple translations for country codes and pay terms to an entire revamp of your company’s Chart of Accounts. These crosswalks also change as the project progresses and the final solutions begins to take form.

Definian helps facilitate decision-making with decades of data migration experience. We document field-by-field level transformations in our Mapping Specification documents; these mappings are archived, updated, and saved as requirements change throughout the project.

3. Complexity of Selection and Transformation Logic

Complex conversion logic is often necessary to get the desired outcome in the new data system. For example, selection logic between Suppliers, Purchase Orders, and Accounts Payable Invoices is closely interrelated and may need to address outliers like prospective suppliers, invoices on hold, and unreconciled checks. Failure to handle all data situations could lead to open transactions being orphaned in the legacy system and difficulties passing audits. Additionally, fields required in the target system might not exist in the legacy system and could require a complex derivation from several related fields. To effectively document the mapping specification, your organization’s technical and functional teams both provide insight into what is needed from each converted record.

Definian’s Applaud® software is tried and true for effective coding of data transformation logic. We systematically process data on a record-by-record basis using predetermined requirements and mappings. The output data is in a ready-to-load format compatible with your new data solution.

4. Communication Gaps

Communication between your organization’s functional and technical resources, the data conversion team, and the System Integrator can be challenging. It is especially important for data migration projects to ensure that all team members are aligned for the highest level of data accuracy in the new system. Miscommunications across the project team can lead to data errors and project delays. It is crucial that all team members know the current status of each conversion within the data conversion domain. Cross-functional issues related to mapping decisions, acceptable values for fields, and functionality within the new data system arise all the time. Communication within cross-functional meetings is constant and is necessary to ensure that each component of your data conversion team is on the same page.

Definian stays on top of all data conversion tasks to ensure that each party knows its current status and pending decisions. We actively track each data conversion object to ensure that all necessary meetings are scheduled, and follow-up items are addressed. No matter the medium, we pursue issues until we achieve resolution and document pertinent decisions made in the process.

5. Difficulty Validating Converted Data

After following the conversion requirements to prepare data, there needs to be a way to validate that the data has been handled accurately (according to the requirements) and is fit for its purpose (fills business needs). This process can be obstructed by availability of resources across your organization as well as knowledge of what to look for and approve. It can also be difficult to compare legacy source data with corresponding converted data because selection logic and mapping logic can be difficult to fully replicate.

Definian produces error reports for each conversion detailing missing or unmapped fields, unacceptable values, and more. We also produce validation reports that use a 3-way match to compare each record between the legacy data, target table, and extract provided by the System Integrator. These files aid our clients in identifying what fields need to be cleaned up or modified to have a successful load.

6. Limited Knowledge of Target System

Clients usually do not have upfront, advanced knowledge and experience working with the data system that they are trying to implement. Your organization may be often unfamiliar with the data migration process as well as certain nuances of your new data solution. Each data system has its own requirements for a successful data load, and they each have their own front-end functionality. Both factors need to be understood by the project team to map, test, and validate the converted data.

Definian utilizes our system-by-system validations built over years of successful project implementations. Our consultants possess years of experience with a given target system, guiding our clients to understanding the nuances of their new data solution and helping them achieve their overall project objective.

7. Project Delays

There are many components of your project that can contribute to project delays, including lack of available resources, difficulties understanding data requirements, or an unreasonable/compressed timeline. Once delays occur, it is oftentimes more costly for your organization and can disrupt the overall rollout of your new data solution. Some implementations are never able to fully recover from delays and the additional costs associated, leaving them ill-prepared to move to their new data system (if at all).

Definian is a Client First organization. Our skilled consultants go above and beyond to make sure our data migration tasks are completed on time and with great accuracy.

Celebrating Success

If you can overcome these obstacles (and potentially others), you will be on your way to a successful data migration project. You and your team will expend effort, time, and resources throughout the implementation process, so it is important to reap the benefits of your new data system after go-live! Make sure you celebrate your success and remember the challenges you had to overcome to get here. Congratulations!

Smart Meters Implementation using Informatica

Smart meters tracks the energy consumption on the system in which it is connected to. It tracks the power consumed and sends the real time data which can be used for creating insights on energy optimization. It also helps to control the power consumed by each customer and can be controlled remotely. Data governance around smart meters using Informatica involves implementing processes and policies to ensure the quality, security, and compliance of data generated by smart meters.

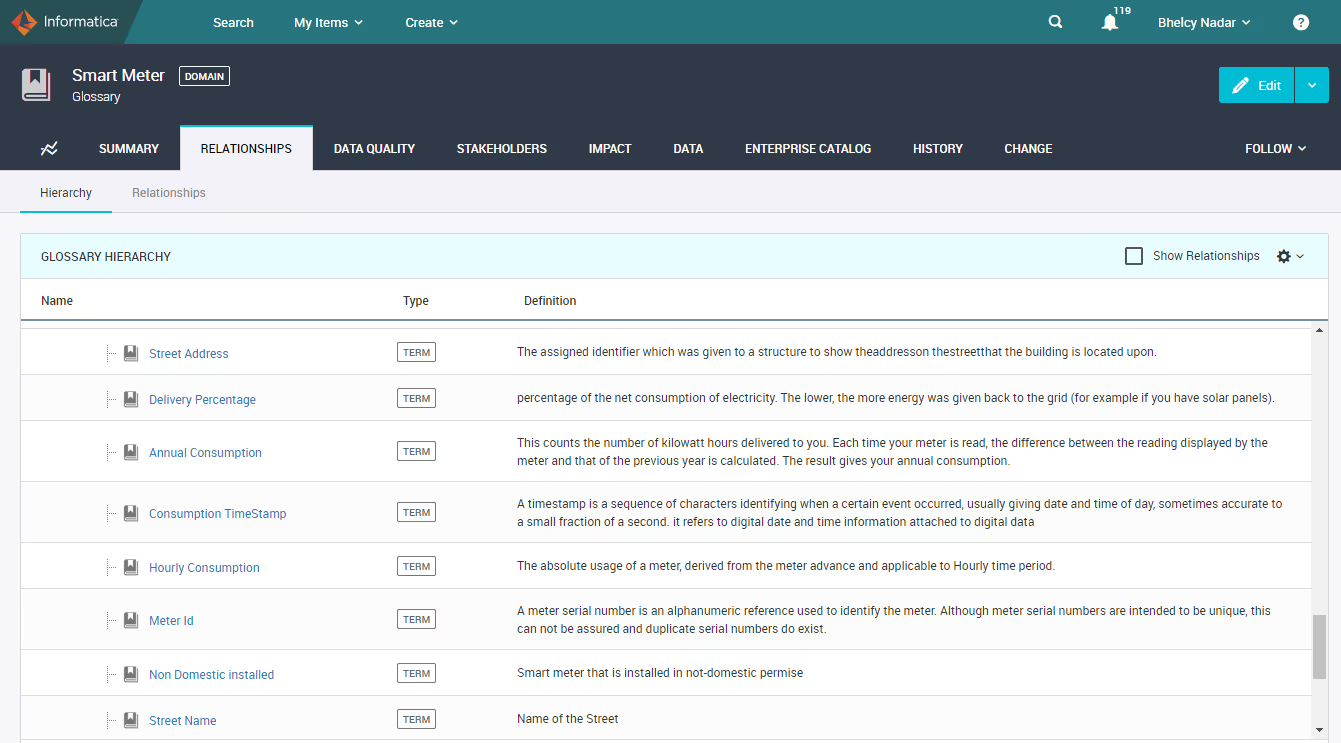

By utilizing Informatica tools we can define the business glossaries related to smart meters in Informatica Axon, show its association with the technical metadata (tables, columns) from Informatica Enterprise Data Catalog and see the lineage that shows data movement around smart meters across the different source and target platforms like file systems and databases. Figure 1, shows the Glossary taxonomy created in Informatica Axon.

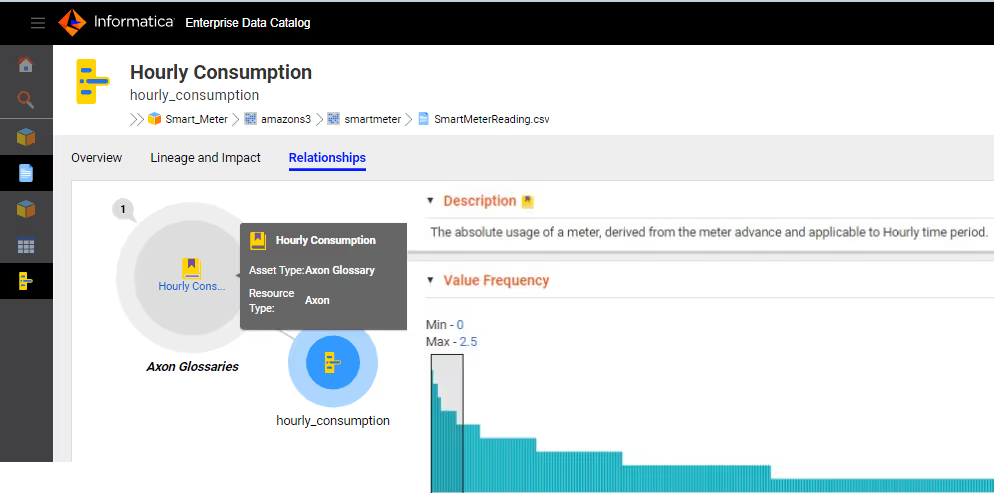

Figure 2, shows the association of Axon business term hourly consumption as business title to the technical field in Informatica Enterprise Data Catalog. This can be done manually or by enabling the business glossary association. It also shows the description populated from Axon and the value frequency of the data.

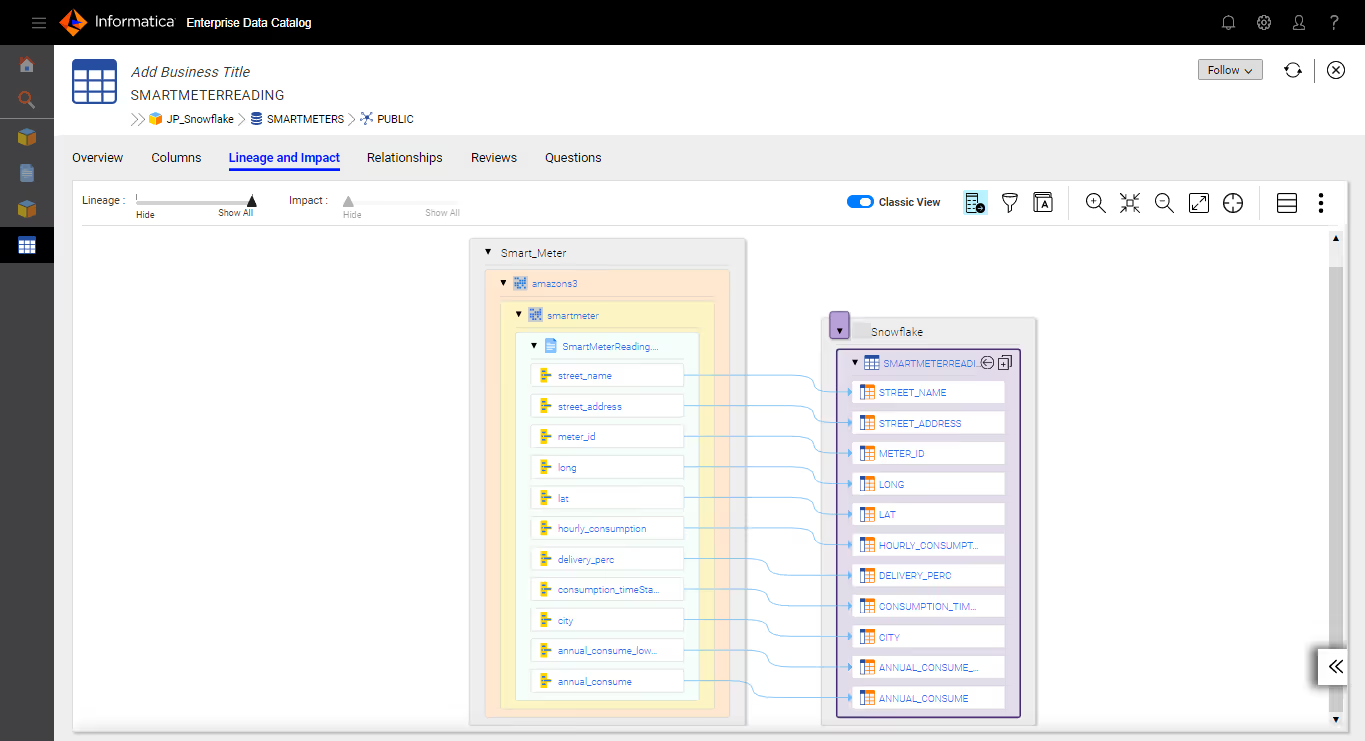

Figure 3, shows lineage and impact analysis between AWS S3 file system and Snowflake in Informatica Enterprise Data Catalog.

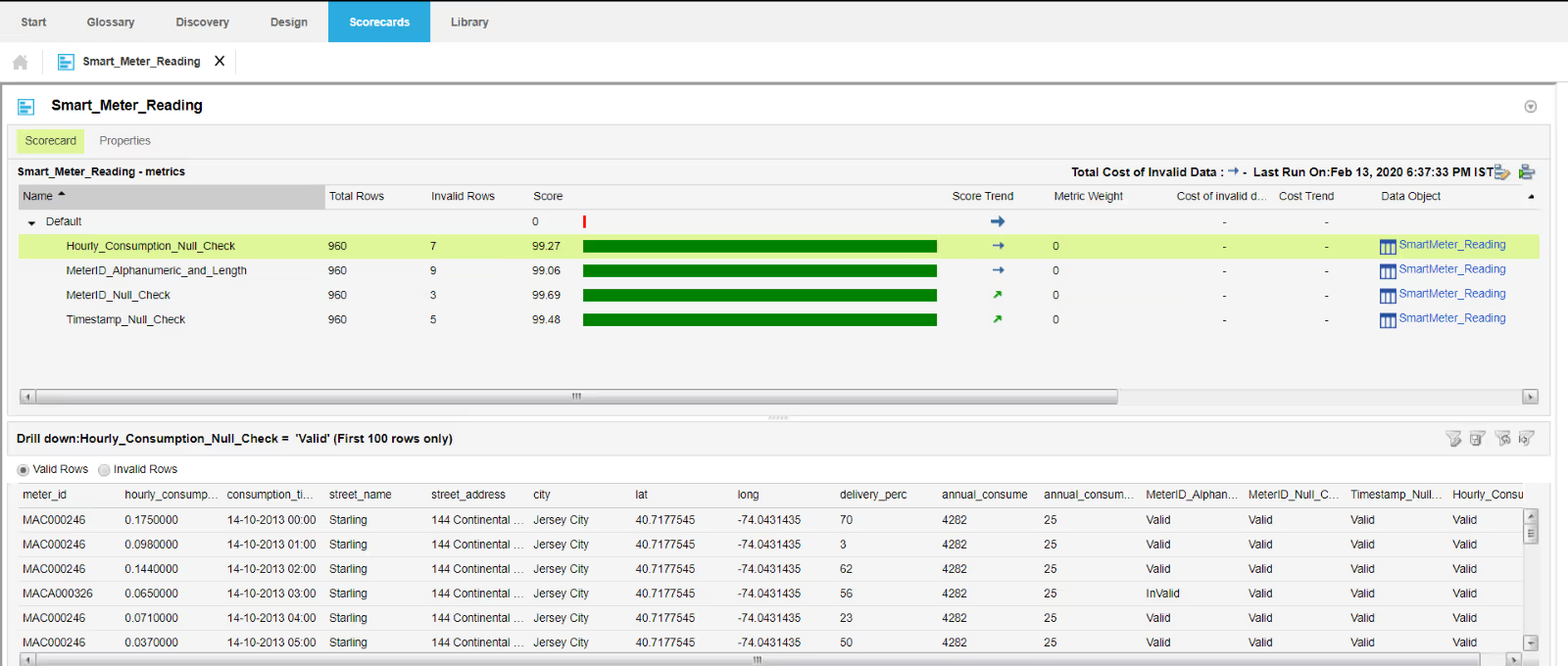

One of the most important functions of smart meters is the recording of hourly consumption done by the consumer. This data is essential as the supply of electricity can be monitored. By using Informatica Big Data Quality we can profile the dataset and execute technical data quality rules to monitor and identify exceptions within the data sets. The technical data quality rule defined in Informatica Data Quality has an expression defined to validate the data. This rule is related to the local data quality rule created in Informatica Axon where the results will be updated based on the schedule. If there happens to be an outage in the supply the data quality rule will recognize the exception. This is further updated in the local data quality rule of Informatica Axon. Based on the change in result a workflow will be triggered to notify the steward about the exception occurred. Figure 4, shows the exceptions generated in Informatica's Data Quality platform.

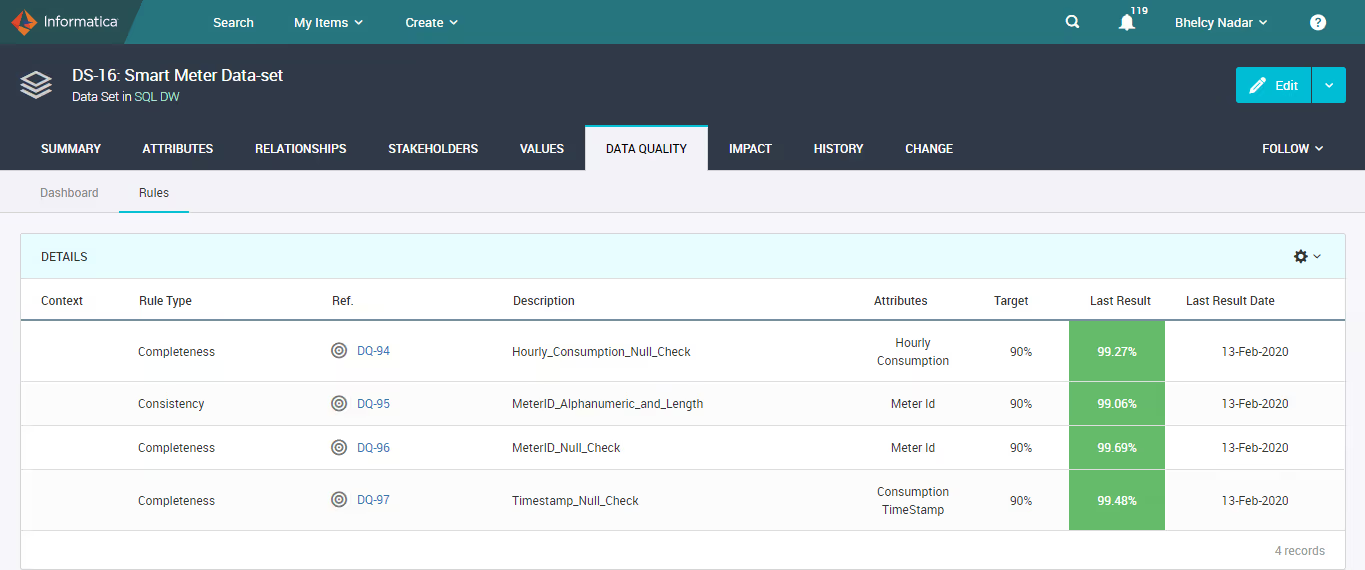

Figure 5, shows the results updated in Axon local data quality rule.

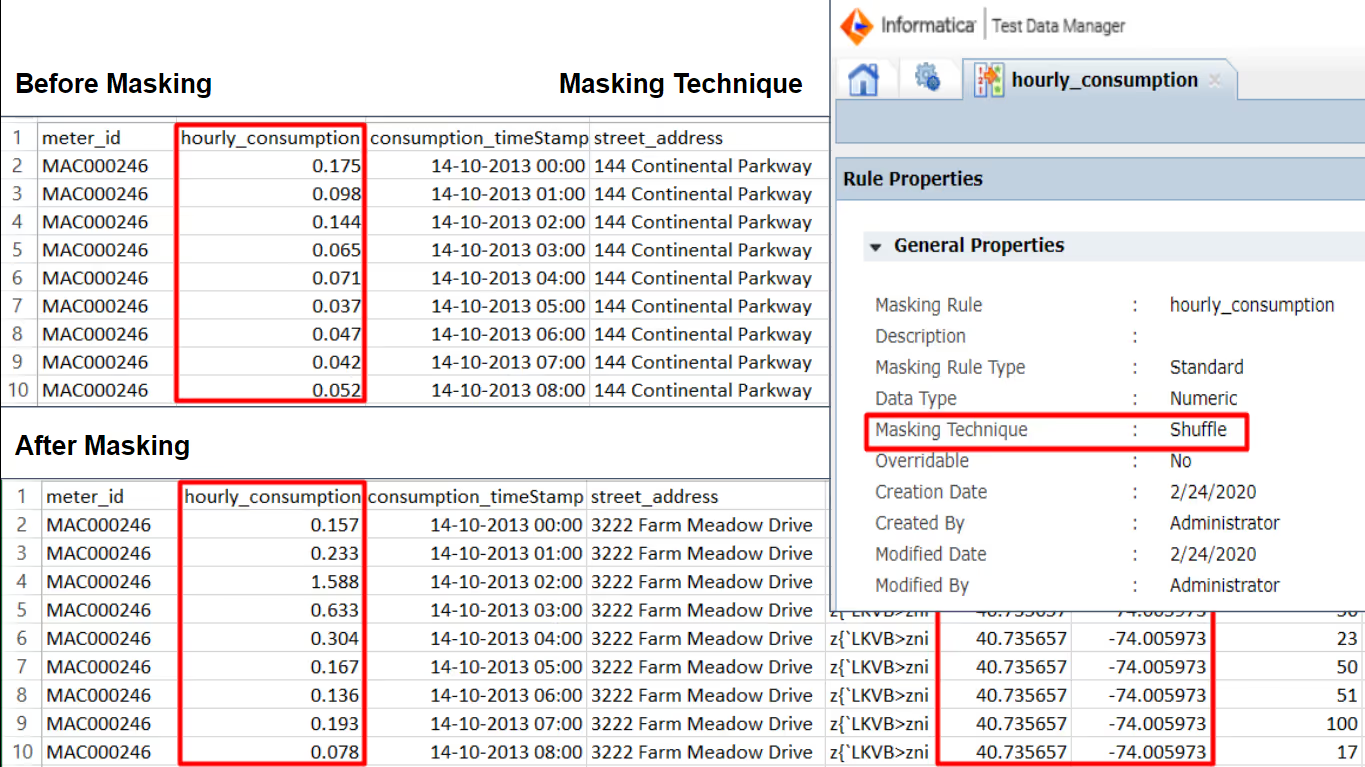

The smart meter data will have information of the consumer like the meter Id the subscriber’s usage patterns which is highly sensitive. By using Informatica Data Privacy Management we can scan the data and flag the information as sensitive. This helps in understanding the sensitive data and further masking it by using extensions created for Informatica Test Data Management. Figure 6 shows the masking technique and the data before and after masking.

Behind the Scenes: 7 Things We Do Before a Successful Test Cycle

Making a first data conversion test cycle a success requires good planning and proactive work. Here, we take you behind the scenes of 7 key steps that we do at Definian to help make your test cycle a success.

1. Identify similarities

Serving as a strategic partner for clients with multi-year, multi-division ERP rollouts often means we encounter similar requirements across different parts of the business. To minimize cost and accelerate development, we review existing documents and conversion code and leverage as much prior art as possible when building something new for the client. It helps that our main competitive differentiator– Applaud® - is a rapid application development environment that enables our team to centralize development and quickly identify and reuse relevant code.

Business Challenge: Definian converted Customer Master data for multiple sites of a single client over several years. Each site’s Customer Master came from the same legacy system and most of the conversion requirements and programming was reusable from site to site. But a new set of sites came into scope that used a Customer Master totally different from the earlier sites. Drivers related to the load application were updated at the same time that these complex new sites came into scope. It turned out that these updates broke the previous process of loading data to the target database. This failure required a new load application to be used. The client was faced with the challenge to maintain their aggressive go-live schedule while addressing the complexities arising from the newly introduced legacy system and technical changes to the load process. Definian's ability to test load processes ahead of time prevented unexpected issues from emerging during the first test cycle for the new sites.

2. Generate “pre-load” conversion error reports

Another competitive differentiator for Definian is our repository of “critical-to-quality”(CTQ) checks that identify and report data issues. Our CTQ tool validates that data is structurally valid and that it fits configuration. For a basic example, this validates that customer site level information appropriately ties to the customer header and that the pay term for the customer is configured. These CTQ checks identify data issues before we even push data to the target system. These checks are carried forward from project to project to decrease development time and improve the results for our client. By doing this, we can quickly identify numerous issues that need to be addressed. To contrast, standard load programs typically only identify one issue at a time and must be rerun repeatedly after solving each successive issue.

3. Spearhead data cleanup activities

To maximize the success of your test cycle you need to first improve the quality of the data. The legacy system is rife with issues, and this is often one of the reasons to implement a new ERP solution. Definian’s best practices approach to data migration integrates data profiling, analysis, and cleansing with the data conversion itself. Many data cleanup activities can be fully automated and are carried out in the conversion code and appear in the target system. Automated cleansing could include translating atypical code values to the desired target value or merging duplicate customer or supplier records. Other cleanup activities can be reported before and during the test cycle for manual cleanup in the legacy system – often driving business value. Examples of these manual cleanup activity include closing old order or invoices that have been left in open status or filling in required fields.

4. Mockup records for test loads before development is complete

While conversions are still being developed and legacy data is being cleaned up another key activity is to mockup sample data to test the load process. This step to reveals if there are additional dependencies or configurations required that have not been previously identified. Dependencies are critical to an implementation because any delay to a master data (or any object that has downstream dependencies) will lead to delays in later conversions. For instance, Sales Orders cannot be loaded until Customers are successfully loaded.

Business Challenge: Load requirements are not always clear. In one example, a client used alphanumeric identifiers for their Accounts Receivable (AR) transactions. The alpha characters were an important feature of their legacy identifier because it classified the transactions and drove business processes. The division considered the letter a required attribute. The target transaction identifier was also defined as a character field, so it seemed that there would be no issue with retaining the original alphanumeric values. However, Defnian’s preemptive load tests discovered that the load program pushed the transaction identifier into a second field, a document number, which was defined as numeric. This meant that the document “numbers” with letters would fail to load. Testing the load program before going into the test cycle gave us the opportunity to address this issue and figure out a solution for the client. Had the load program not been tested until the integration test cycle, AR would not have been ready for the integration test.

5. Report and escalate issues and new questions arising from test loads

Documenting findings, noting configuration gaps, and identifying necessary scope changes are all part of the progressive elaboration of a project. This part of the process will vary based on what tools are available, but no matter the method, it is important to track issues and decisions to help with successive test runs. Inevitably, questions will arise later in the project as to why something is being done the way it is and a test management tool (e.g. Jira, HPQC, DevOps, etc.) will have the answer.

6. Facilitate client review of data before it is loaded

When it comes to full test cycles it is critical for the business team to review the data to make sure that what is being loaded matches expectations. Inadequate testing is one of the primary sources of project failure, which is why we serve as our client’s partner throughout their testing and review. Definian speeds this process by providing a series of reports that help connect the dots between the legacy and the target system. This usually includes an extract of the data being loaded in excel format with key legacy fields and descriptions for key coded fields, a record count breakdown and error reporting. Ultimately, this approach demonstrates that converted data fits the requirements and can reconcile back to the legacy data source and satisfy auditors.

7. Optimize runtime performance

Even in the earliest phases of the project, our team is already thinking about cutover and go-live. To accommodate aggressive schedules and minimize business disruption, we optimize the conversion process to run as quickly as seamlessly as possible. Often, this requires that we parallelize high-volume portions of the data extraction from the legacy system and the data load to the target system. Sometimes, system configuration impacts load throughput or fine tuning of batch sizes can save precious hours from the cutover schedule. Whatever it takes, we understand the importance of a timely go-live and strive to keep business shutdown as brief as we can safely accommodate.

Enter your test cycle with confidence and focus

By carrying out the preparatory steps listed above, Definian minimizes data risk from your transformation project. This allows the business to focus on learning and optimizing the new ERP system, instead of chasing data issues. Less distractions for the wider project teams means that more time can be spent on functionality, configuration, training, and the future or your enterprise.