Articles & case studies

Developing a Data Strategy and Data Observability Capabilities to Address Data Risk factors for a Leading Airline

Background

After resolving an unauthorized data incident that was reported on the nightly news, our Client, the CISO of an airline, needed to prevent future breaches by getting their arms around data risk across the enterprise.

To identify PII and confidential data distributed across many sources in a dynamic data landscape, the airline needed to understand where those critical and sensitive data assets reside and develop capabilities that identify and monitor data access gaps as they arise.

Four objectives needed to be met to complete this initiative.

- Catalog sensitive data across structured and unstructured data throughout the organization.

- Identify and resolve any gaps within the data access controls within Azure.

- Create a process that constantly monitors data risk and pushes out alerts when a critical gap is detected.

- Create an observability portal that shows an at-a-glance data risk score, the current gaps, the severity of each gap, and a mechanism for correcting each gap.

Why Definian

Our Client chose Definian as their partner for this sensitive work because of our expertise in data strategy for major financial institutions and our deep technical knowledge in building underlying connectors for leading data platforms.

The Work

To meet the first objective, BigID was deployed to scan, discover, and catalog the sensitive data. BigID is the leading product in automated data discovery and classification and was the standout choice for uncovering and monitoring unstructured and structured data throughout the organization.

With the sensitive data catalog in hand, we reviewed the access controls within Azure and immediately began to address any data sensitivity access gaps. As we resolved the gaps in the technical controls, we worked with our Client to address the data governance processes that led to the gaps.

With the current gaps resolved, we developed a process that would provide a proactive backstop to address future issues. There are two components to the backdrop. For the first component, Definian integrated Azure data access controls and BigID's ongoing scan results. This integration connects and analyzes the metadata from each solution and pushes out real-time alerts when a critical gap is detected. The second component was to utilize the integration to power a risk observability portal.

The risk observability portal enabled an at-a-glance assessment of the current data risk levels across the organization. The observability portal has two main features. The first is a risk score calculated by analyzing the various types and numbers of gaps. This score instantly communicates current risk levels and trends. The second feature displays the details behind the gaps and a mechanism to correct them within that single screen.

Results

With this additional observability capability, our Client is confident in always knowing their overall risk exposure. They now have a comprehensive process that tracks the unstructured and structured data across their dynamic landscape and a mechanism for quickly addressing gaps.

Data Governance and Data Quality Program for Retail Holding Company

Background

A rapidly growing Dubai-based retail-oriented holding company faced increased data regulations as it expanded its business interests in the Middle East region. It needed to establish a more robust and proactive Data Governance, Quality, and Privacy program to accelerate compliance with these increased data regulations.

The main objective of this initiative was to establish a Data program across 15 Operating companies with business interests in over 20 countries.

The Challenge

Beyond the organizational complexity of coordinating across the 15 Operating companies, our Client faced a rapidly evolving regulatory landscape around consumer data and privacy. Each operating company had a low data quality maturity that limited the ability to control and prevent known compliance issues. Lastly, the organization needed an in-house capability to set up and operate a complex program and gain support.

Why Definian

Our Client selected Definian to partner with on this initiative because of our experience building and facilitating complex data governance and quality programs. That expertise provided the confidence that Definian could build a flexible, democratized program to keep the organization ahead of their regulation requirements.

Primary Deliverable

As we engaged with our Client, we assessed current maturity levels and cataloged the data governance, privacy, and security requirements. Using the assessment results, we built a hub-and-spoke Data Governance model supporting all business units. This model enabled each business unit to operate independently while meeting common standards established at each hub.

As part of the data governance program's setup, we guided our Client through a data governance and quality platform selection process. Together, we evaluated several alternatives to ensure that the platform aligned with the Client's evolving needs and future vision. Using the platform, Definian connected, scanned, and collected metadata for 109 systems. Through those scans and data governance operations, 600 Critical Data Elements were identified.

With the governance operations and platform operational, the project's next step was to address the significant data quality issues. With limited Client resources, our Client turned to Definian to set up and operate a Data Quality as a Service program. Combining automated tools and Definian's consulting team, we quickly started the data quality improvement process while collaborating on formal organization-specific requirements. Additionally, we rolled out DQ dashboards tailored for each operating company.

Next steps

While much work remains, the organization has significantly increased its data maturity. It's equipped to more readily do business in more countries and adapt to changing regulations. Our Client now also has the data confidence to take advantage of more advanced ML/AI capabilities, which will accelerate their organizational growth.

Premier International (now Definian) Shines in Built In Awards 2024

We’ve got some amazing news to share. 🎉 Premier International (now Definian) has been recognized by Built In, who just announced their 2024 Best Places to Work Awards! And guess what? We didn’t just make the list once—we’ve been recognized in multiple categories, showcasing our commitment to fostering an exceptional work environment and employee-centric culture.

The company has secured coveted positions in the following Built In Awards:

- Chicago's Best Midsize Places to Work (#5)

- Chicago's Best Places to Work (#14)

- US Best Midsize Places to Work (#31)

- US Best Places to Work (#59)

This is truly a testament to the incredible values-driven environment we’ve built together—a place where everyone feels valued, supported, and excited to come to work every day.

What got us here? Well, Built In’s criteria isn’t just about the perks (though our snack game is pretty strong). They look at everything—from how we compensate and support our team to the flexibility we offer and our commitment to diversity, equity, and inclusion.

Built In determines the winners of Best Places to Work based on an algorithm, using company data about compensation and benefits. To reflect the benefits candidates are searching for more frequently on Built In, the program also weighs criteria like remote and flexible work opportunities, programs for DEI and other people-first cultural offerings.

We believe that by attracting and retaining top talent, we are in the best position to serve the clients who rely on us to help with their mission critical data requirements. We are so proud of Built In’s validation of what we have created.

We are always looking for top talent to join our team, if you’re interested in exploring our current openings, please visit our careers page.

From 18 Disparate Systems to a Unified Workday Platform: Digital Transformation for a $3.5B Healthcare Provider

The Challenge

A leading $3.5B healthcare provider in the New England area was operating with 18 fragmented systems across its hospitals and administrative units. Each system managed employee, supply chain, and finance data independently. This led to duplicated records, inconsistent data, and limited visibility into critical information.

Many of these systems were vendor-managed or required manual data extraction, which introduced discrepancies in file structures and frequent delays. To complicate matters further, the organization was preparing for an open enrollment cycle with a new benefits provider. This created an additional layer of risk, with little room for error or delay.

The organization needed to migrate all Human Capital Management (HCM), Payroll (PAY), Supply Chain (SCM), and Finance (FIN) data into a single Workday tenant without disrupting operations, compromising data quality, or overwhelming internal teams.

The Solution

Definian was brought in to lead the full data migration effort, including extraction, cleansing, transformation, and validation. Our team managed data across 18 legacy systems, covering over 16,500 employees, 3,500 contingent workers, and 5,800 suppliers.

We used structured automation, reconciliation reporting, and a clear governance model to guide every phase of the migration. Core steps included:

- Profiling and cleansing legacy data, including addresses and duplicate records

- Automating transformation logic to standardize inconsistent formats

- Running parallel conversion cycles for HCM, PAY, SCM, and FIN to accelerate testing

- Implementing strict file review workflows with vendors and third-party administrators

- Delivering custom validation reports to reconcile legacy and Workday data sets

We also adjusted sequencing to align with the benefits enrollment timeline, running additional test cycles to ensure accuracy without delaying go-live.

The Results

The migration was delivered on time, within budget, and with minimal disruption to internal teams.

Key outcomes included:

- 100% data load success for Core HCM

- 98%+ data load accuracy across all objects

- Successful migration of over 27,000 records, including employees, suppliers, and items

- 1,179 of 1,192 data defects identified during testing were resolved

- Zero disruption to open enrollment activities

- Client teams were able to focus on validation while Definian handled the technical heavy lifting

By working closely with the client’s HR, finance, and supply chain leaders, and collaborating with the global system integrator, Definian ensured the migration aligned with Workday’s delivery methodology and the client's operational goals.

The Impact

The healthcare provider now operates on a unified Workday platform that improves accuracy, transparency, and decision-making across functions. With consistent, high-quality data in place, the organization is better positioned to scale, comply with regulatory standards, and deliver better outcomes for employees and leadership alike.

Client Feedback

- “Couldn’t have asked for a better team on this project.” – SCM Functional Lead

- “Your responsiveness and precision made a huge difference.” – Sourcing Executive

- “Definian’s patience and partnership helped us navigate complex data challenges.” – Assistant Director, HR

Reducing Integration and Analytics Costs for a Fortune 500 Cloud Solutions Provider

Background

A Fortune 500 CRM (Customer Relationship Management) and cloud solutions provider faced the complex challenge of exponential growth in integration, compute, and data warehousing costs caused by the rapid acceleration of data volumes across its data landscape. The organization needed to move its data and analytics from Snowflake to a custom-built Hive solution on the Amazon AWS (Amazon Web Services) platform to reduce costs and improve performance.

Why Definian

While the Client has significant in-house data analytics and cloud infrastructure skills, they needed data engineering expertise to complete this initiative in the desired time-frame. The client had previously worked with Definian on data governance and integration projects, and through that experience, knew Definian had the necessary skills, accelerators, and methods to carry out this project efficiently and effectively. This was validated by Definian's three decades of experience building complex data engineering solutions.

The Project: A Joint Effort Across Four Milestones

Like many large transformative initiatives, this project simultaneously posed significant risk and value. To reduce project risk and maximize value along the way, the initiative was split into four distinct milestones. This modular approach enabled the Client to realize value throughout the initiative without disrupting current processes. It also enabled the Client and Definian to focus their energy on their respective strengths.

Being a pioneer in cloud applications and data modeling, the Client owned the design and development of the new analytics platform. The Client leveraged Definian’s data engineering solutions to minimize development time and maximize data pipeline throughput. While Definian upgraded the data pipelines that fed their analytics platforms, the Client focused on data models and cloud architectures.

Milestone 1: Migrate Jitterbit Integrations to AWS Glue

The project's first milestone focused on replacing approximately 300 Jitterbit integrations that connected the Client's operational data to their primary analytics data stores in Snowflake and Redshift. To help keep this milestone on track, Definian used its integration design frameworks and reference library to reverse engineer the poorly documented legacy Jitterbit integrations and replicate them in AWS glue.

Milestone 2: Design and Build the Pipelines for the Future State Data and Analytics Platform

While the Client focused on designing and developing the Hive database in AWS infrastructure,Definian designed and built the future state integration framework and process. Collaborating closely with the Client, Definian enhanced the integrations from Milestone 1 to easily re-point to the new analytics warehouse during cut-over. Additionally, Definian and the Client found opportunities to rationalize and improve the performance of existing integrations. As part of the improvements, Definian increased pipeline efficiency by transitioning/mirroring the ETLs from AWS Glue to Apache Airflow.

Milestone 3: Migrate from Snowflake to Hive

With the new analytics platform operational, it was time to migrate the data and shut down Snowflake. Definian built a Snowflake to Hive pipeline to execute the migration using PySpark in Apache Airflow. This approach maximized throughput and minimized development time. To reduce downtime during the cut-over, Definian and the Client collaborated on a tight cut-over plan. The execution of the plan exceeded expectations, resulting in no downtime and an on-time go-live.

Phase 4: Consolidate Data Silos

After the new analytics platform went live, the last step was consolidating and decommissioning additional data silos into the new analytics platform. Definian designed the pipelines and processes for this last step to enable the Client to self-execute the plan when ready. When the Client was ready to migrate, Definian provided as-needed back-up to the Client.

Impact: Improved Data Pipelines, Improved Data Analytics, Lower Costs

This complex initiative enabled long-term sustainable analytics capabilities for the Client. They have a pathway for more intelligent AI, sharper analytics, and data-driven decisions. The new data pipelines in Apache Airflow run at a lower cost and greater efficiency than the prior Jitterbit framework.

Building the Data Integration Capabilities for Leading Data Privacy Platform

Introduction

A quickly growing data security, privacy, compliance, and governance software provider found itself facing a significant hurdle: their cutting-edge data discovery algorithm couldn't easily work with the systems their customers already used. To keep growing and attracting new clients, they needed a swift solution to improve how their software could integrate with a variety of other metadata management technologies.

Choosing Definian for the Solution

The reason they turned to Defnian was clear: Definian had a strong track record of engineering seamless integrations for Fortune 500 organizations and other leading data platform solutions. With three decades of expertise in making legacy and modern technologies work together, Definian was the standout partner for this initiative.

Setting Project Goals

The goal for this initiative was twofold. The project's main aim was to create bi-directional integrations with Alation and Informatica EDC. These two initial integrations would enable the Client’s data discovery algorithm to create a unified data catalog that includes sensitive and PII information in common customer environments. The secondary aspect of the project would set the foundation for rapidly incorporating additional integrations into the core product, enabling the Client’s data engineering team to continue focusing on improving their core product's data discovery capabilities.

Outcome: A Game Changer

Since the initial engagement, the partnership has continued to expand the product’s integration capabilities to accelerate the implementation process and serve their customer’s increasingly complex data privacy, security, and governance requirements. The results helped propel this prominence in the marketplace:

- It earned a spot as Gartner Magic Quadrant leader.

- Household names like American Airlines, Discover, and Dell came on board as clients, drawn by how well the software could connect with tools they already use.

- Foundation is set to enable exponential increase in integration capabilities through a soon-to-be-released marketplace.

Celebrating Principal Mike Mulhern's 25th Anniversary

Today, we celebrate the 25th anniversary of one of our Principals, Mike Mulhern. In addition to supporting dozens of end clients over his tenure, Mike bridged the gap between our consulting and software departments and guided the development of Applaud over the years. In our latest Premier International (now Definian) ProFiles feature, Mike shares his experiences and insights.

Q: Mike, what brought you to Premier International (now Definian)?

A: This was my first real job out of college. I graduated from the University of Illinois at Urbana-Champaign in 1998 with a degree in Civil Engineering … but that wasn’t my passion. I had no idea what I wanted to do with myself when I grew up and suddenly, I was out of time to figure it out. Computers and programming, and optimization of anything were always hobbies. I love building things.

After moving to Chicago upon graduation, I picked up a newspaper and sent out resumes to ten companies. Premier International (now Definian) responded! I didn’t make the connection until recently, but this is kind of prophetic. I had a summer internship at the Illinois Department of Nuclear Safety during which I assisted one of the engineers in analyzing some data from a radiation detector. We parsed the data with QBasic and then fed it into a program called PSI Plot. PSI Plot was outrageously slow, so I moved the installation to a RAM drive making the analysis possible. Little did I know I’d spend 20 years parsing, reformatting, and analyzing data in addition to figuring out how to make things go faster.

Q: How has your role evolved over time since you’ve been here?

A: When I started, I was your typical entry level Associate Consultant learning Applaud wondering what I was getting into. My first projects involved updating systems for Y2K, then it was maintenance and development of HR benefits systems followed by a full blown data migration.

The first 2-3 years carried a steep learning curve, but soon I was leading projects. By about 2007 I was delivering data migration projects for clients with no one in the office really even aware of what I was doing day-to-day. The autonomy was great, and I got to work with and learn from some really fantastic consultants with very different backgrounds outside of Premier International (now Definian). I was also very interested in the Applaud software and knowing exactly what it was doing. That led to greater involvement with the software team and some great collaborations on features. Eventually, I even got the privilege of managing the software team, which was one of the more rewarding things I’ve done here.

In parallel to all of that was IT. When I started, there was really no one doing anything IT related, so I picked it up as a side task. We had 2 servers and a bunch of desktop machines. About 19 years in, it became more than a full-time job managing IT policies, infrastructure, services, inventory, and information security. Shout outs to Don Brown and Jonathan Garcia for helping to keep me sane.

Q: What are your goals for the next few months/years?

A: Premier International (now Definian) is primed for expansion right now and looking to expand our international footprint. I need to elevate our IT support structure to accommodate that growth and ensure compliance. We’re also targeting some automations to eliminate some of our manual processes allowing us to better scale. Beyond that, there’s some “continual improvement” goals on a number of fronts, including security and information security awareness.

Q: What is your favorite part of working at Premier International (now Definian)?

A: I really enjoy the variety of things I get to do here, the continual influx of problems to solve and the freedom to approach them in my own way. I’m not a person who could do the same thing every day or follow someone else’s script. I need to be mentally engaged, figuring something out, and producing something that I feel is valued by others.

One day I’m writing a script to interact with a web service. The next day I’m troubleshooting a software problem. After that I’m developing security training and writing an IT policy. The next day I’m collaborating on a new software feature or teaching a consultant how to do something that I did 10-15 years ago. I’m always eager to learn something new, to figure out how to make something work, or to create something. The variety keeps it interesting.

Q: What piece of advice would you give to your younger self?

A: Buy and hold, Nvidia, Google, Apple, and Microsoft. Seriously, there are 2 things that immediately come to mind.

The first would be to “Ask more questions”. In general, everyone is afraid to look dumb by asking the wrong question, but if you don’t ask, you won’t ever know. If you ask a lot of questions and strive to understand a client’s problem, you can often produce a better solution than what you were originally asked for and deliver it faster with less re-work. Everyone wins!

The second would be to “Underreact” to adversity. Adversity in this case being either seemingly ridiculous/misguided requests or attacks from others. This is something I learned from our founder Jim Hempleman, that I’m admittedly not always good at. It’s usually better to listen, express concerns about something you disagree with, and then later come back with a well thought out response than it is to come out swinging or walk back a knee jerk reaction. While there are times when need to speak out loudly, that person who hated you may end up your friend once you prove that you have the same interests at heart.

Q: If you could have any superpower, what would it be?

A: I would like to a be human lie detector. Life would be a lot more fun if you knew who was being honest.

Q: What’s the last song you listened to?

A: “Other Side of the Rainbow” by Extreme. Extreme was popular in the late 80s/early 90s and kind of fell off my radar after that. Last year, they put out an album called “Six” which is really good. The songs are great but cross some genre boundaries. “Other side of the Rainbow” is a very catchy ballad that will stick in your head.

Q: What, if anything, are you currently binge-watching? Or reading?

A: Over the last few years, I have binge re-watched all the different Star Trek series, Babylon 5, the Stargates, Firefly, and everything Star Wars related. I’m a sci-fi junkie. I’m currently watching “The Witcher” on Netflix which is a departure from the theme but very good!

Thanks for chatting with us Mike, and congratulations on 25 years at Premier International (now Definian). We are so glad you are part of our team!

.jpeg)

Shifting Perspective: Your Data is Not Garbage

Data is not garbage. Could it be better? Could you get more insights from it? Absolutely. That is the opportunity lurking within the data. As data leaders, we should push forward the idea about unleashing the potential within your data versus disparaging it. This mindset shift enables the ability to see past the immediate imperfections and into potential for refinement and discovery.

A real-world example of the impact of the different approaches is two separate clients that had significant duplicate and disjointed supplier data. One client framed the issue as having crap data that needed to be cleaned up and the other framed the issue as the opportunity to gain a 15% reduction in their raw material cost. The difference in how this situation was framed directly impacted the engagement, the energy at every interaction, and effectiveness of the initiatives. Nobody wants to work in crap, but everyone wants to be part of something that will have a significant impact.

Understanding the Value in All Data

The term “garbage data” inherently suggests that certain datasets are, from the outset, of no value. This is a misconception. All data, when approached with the right tools and mindset, holds potential insights. The challenge often lies not in the data itself but in our methods and perspectives towards analyzing it.

When we label data as “garbage,” we risk overlooking opportunities for learning and growth. Instead, viewing all data as a resource waiting to be properly tapped encourages a culture of innovation and problem-solving.

The Growth Mindset and Data Analysis

Carol Dweck’s concept of the “growth mindset” – the belief that our basic qualities are things we can cultivate through our efforts – applies perfectly here. Viewing “garbage” data through the lens of a growth mindset enables data professionals to see past the immediate imperfections and towards the potential for refinement and discovery.

Here are a few strategies to reframe how we approach less-than-perfect datasets:

- Align with Strategic Objectives: Focus on what can be achieved to drive engagement and funding. Executives don’t want to fund consolidating supplier data, but they do want to fund ways to reduce raw material spend.

- Recognize Quality Requirements Differ: Data might be fit for purpose for one aspect of the business, might not be the case for another. The operational side of the house has different data requirements than the analytics group.

- Identify Opportunities for Improvement: What can “garbage” data teach us about our data collection processes, and how can we improve?

- Foster a Collaborative Approach: Engage with your team in brainstorming sessions on how to tackle challenging datasets and the potential that is contained within.

- Data Is Ongoing: Data is a product that can always be improved. Adopting a Plan, Do, Check, Act process fosters a growth mindset.

Concluding Thoughts

Let’s retire the term “garbage data” from our professional vocabulary. Let’s view every dataset as a stepping stone toward deeper insights and knowledge. By adopting a growth mindset towards data, we empower ourselves and our organizations to explore, innovate, and achieve our goals.

By reconsidering how we refer to and think about our data, we open up new avenues of opportunity and learning. The next time you’re tempted to label data as “garbage”, pause and reconsider. What additional opportunity might you find with a change in perspective?

Premier International (now Definian) Acquires Information Asset

CHICAGO, July 22, 2023, Premier International (now Definian), a technology consulting firm specializing in solutions that reduce the risk associated with complex data challenges, today announced the strategic acquisition of Information Asset (“the Company”), one of the nation’s top data governance and risk management firms. Premier International (now Definian) is a portfolio company of Renovus Capital Partners.

Founded in 2012, Information Asset primarily focuses on data risk, privacy, governance and monetization. Its solutions enable enterprises to evolve data governance from a conceptual idea to practical implementation, which helps foster more accurate, faster and overall improved decision-making for their clients. Information Asset serves a roster of well-known Fortune 1000 customers across various industries, including financial services and healthcare, among others. The Company also has deep relationships and partnerships with leading software platforms such as Informatica, Alation, BigID and Collibra.

Definian offers innovative technology and consulting services through its team of business consultants, software developers, subject matter experts, and its proprietary software tool Applaud®. The combined capabilities of Definian and Information Asset will enable customers of both organizations to access enhanced offerings, as well as streamlined efficiency from having their data needs handled by a single partner.

“The addition of Information Asset to Definian significantly expands the depth and breadth of our capabilities, enabling organizations to unlock the full potential of their data,” said Craig Wood, CEO of Definian. “Our combined expertise and software solutions in data migration, data risk management, and data governance will reshape norms by delivering comprehensive solutions that boost value while minimizing risks. We are thrilled to welcome Sanjeev and the Information Asset team to Definian.”

Sanjeev Varma, CEO of Information Asset, added, “We are very excited to combine forces with the Definian team, allowing us to provide enhanced solutions for our customers across the entire data value chain. Together, we will further the value we provide customers each day by helping them drive business growth, innovation, and competitive advantage. Our team is enthusiastic about what’s next and what’s possible as part of Definian.”

As a platform portfolio company for Renovus, Definian has been actively identifying acquisitions that will help expand its current product and service offerings. Manan Shah, a Partner at Renovus Capital Partners, noted, “We are excited about Definian's strategic acquisition of Information Asset. Adding a trusted and experienced offshore delivery team has been a major goal for Definian, and the acquisition brings complementary, high-value capabilities by providing customers with new offerings across the data lifecycle.”

Terms of the transaction were not disclosed.

About Definian

Definian is a Chicago-based technology consulting firm offering solutions that reduce the risk associated with complex data challenges through innovative technology and consulting services. The company's innovative services and Applaud® software reduce the overall risk in a technology transformation and ensure projects remain on track in even the most complex environments. Founded in 1985 and with over three decades of successful execution, Definian's solutions have a proven track record across various industries and applications. For more information, please visit Definian.com.

About Renovus Capital Partners

Founded in 2010, Renovus Capital Partners is a lower middle market private equity firm specializing in the Knowledge and Talent industries. From its base in the Philadelphia area, Renovus manages over $1 billion across its three sector focused funds and other strategies. The firm’s current portfolio includes over 25 U.S. based businesses specializing in education and training, healthcare services, technology services and professional services. Renovus typically partners with founder-led businesses, leveraging its experience within the industry and access to debt and equity capital to make operational improvements, recruit top talent, pursue add-on acquisitions and oversee strategic growth initiatives. For more information, please visit renovuscapital.com.

Data Migration Checklist for D365 Implementations

Assess the Landscape

Start planning the data effort by assessing what you have today and where you are going tomorrow. While frequently glossed over, spending time assessing the landscape provides three prime benefits to the program. The first benefit is that it gets the business thinking about the possibilities of what can be achieved within their data, by outlining what data they have today and what data they would like to have in the future. Secondly, this exercise gets the organization engaged on the importance of data and instills an ongoing master data management and data governance mindset. The third benefit is that it enables the team to start to seamlessly address the major issues that programs encounter when assessment is glossed over.

- Create legacy and future D365 data diagrams that capture data sources, business owners, functions, and high-level data lineages.

- Outline datasets that don’t exist today that would be beneficial to have in D365.

- Ensure the future D365 landscape aligns with data governance vision and roadmap.

- Reconcile legacy and future state data landscapes to ensure there are no major unexpected gaps.

- Perform initial evaluation of what can be left behind/archived by data area and legacy source.

- Perform initial evaluation of what needs to be brought into the new D365 based landscape and reason for its inclusion.

- Profile the legacy data landscape to identify values, gaps, duplicates, high level data quality issues.

- Interview business and technical owners to uncover additional issues and unauthorized data sources.

Create the Communication Process

Communication is a critical component of every implementation. Breaking down the silos enables every team member to understand what the issues are, the impact of specification changes, and data readiness. (Read about the impact of siloed communication.)

- Create migration readiness scorecard/status reporting templates that outline both overall and module specific data readiness.

- Create data quality strategy that captures each data issue, criticality, owner, number of issues, clean up rate, and mechanism for cleanup.

- Establish data quality meeting cadence.

- Define detailed specification approval, change approval process, and management procedures.

- Collect the data mapping templates that will be used to document the conversion rules from legacy to target values.

- Define high-level data requirements of what should be loaded for each instance. We recommend getting as much data loaded as early as possible, even if bare bones at first.

- Define status communication and issue escalation processes.

- Define process for managing RAID log.

- Define data/business continuity process to handle vacation, sick time, competing priorities, etc.

- Host meeting that outlines the process, team roles, expectations, and schedule.

Capture the Detailed Requirements

Everyone realizes the importance of up-to-date and comprehensive documentation, but many hate maintaining it. Documentation can make or break a project. It heads off unnecessary rehash meetings and brings clarity to what should occur versus what is occurring.

- Confirm the legal entity structure and the planned usage of data sharing functionality. This will have greatest impact on how the data needs to be prepared.

- Collect the full list of data entities that need to be populated for each legal entity in D365. Ensure the correct version of each entity is carefully considered.

- Customers V2 and Customers V3 are not the same.

- Document detailed legacy to D365 data mapping specifications for each data entity and incorporate additional cleansing and enrichment areas into data quality strategy.

- Incorporate when Microsoft quarterly releases will occur in each instance into the project schedule to ensure any updates to data entities are identified and are reflected in the mapping specs, conversion programs, etc. At least two releases must be accepted every year.

- Be cautious when scheduling test cycles or go-live around accepted quarterly releases.

- Document system retirement/legacy data archival plan and historical data reporting requirements.

Build the Quality, Transformation and Validation Processes

With the initial version of the requirements in hand, it's time for the team to build the components that will perform the transformation, automate cleansing, create quality analysis reporting, and validate and reconcile converted data. To reduce risk on these components, it's helpful to have a centralized team and dedicated data repository that all data and features can access.

- Create data analysis reporting process that assists with the resolution of data quality issues.

- Build data conversion programs that will put the data into the D365 data entity format.

- If necessary, review D365 documentation for any unexpected findings when working with data management delivered imports.

- Incorporate validation of the conversion against D365 configuration from within the transformation programs.

- Enable pre-validation reporting process that captures and tracks issues outside of the data management imports.

- Define data reconciliation and data validation requirements and corresponding reports.

- Create exports in data management to extract migrated data out of D365 to streamline validation and reconciliation process.

- Verify data quality issues are incorporated into the data quality strategy.

- Confirm any delta or catch-up data requirements are accommodated within the transformation programs.

Execute the Transformation and Quality Strategy

While often treated separately, data quality and the transformation execution really go hand-in-hand. The transformation can't occur if the data quality is bad and additional quality issues are identified during the transformation.

- Ensure that communication plans are being carried out.

- Capture all data related activities to create your conversion runbook/cutover plan while processes are being built/executed.

- Create and obtain approval on each D365 load file.

- Run converted data through the D365 import process.

Validate and Reconcile the Data

In addition to validating D365 functionality and workflows, the business needs to spend a portion of time validating and reconciling the converted data to make sure that it is both technically correct and fit for purpose. The extra attention validating the data and confirming solution functionality could mean difference between successful go-live, implementation failure, or costly operational issues down the road.

- Execute data validation and reconciliation process.

- Execute specification change approval process per validation/testing results.

- Obtain sign-off on each converted data set.

Retire the Legacy Data Sources

Depending on the industry/regulatory requirements system retirement could be of vital importance. Because it is last on this checklist, doesn't mean system retirement should be an afterthought or should be addressed at the end. Building on the high-level requirements captured during the assessment, the retirement plan should be fleshed out and implemented throughout the course of the project.

- Create the necessary system retirement processes and reports.

- Execute the system retirement plan.

Following this checklist can minimize your chance of failure or rescue your at-risk D365 implementation. While this list seems daunting, rest assured that what you get out of your D365 implementation will mirror what you put into it. Time, effort, resources, and – most of all – quality data will enable your strategic investment in D365 to live up to its promises.

Formalizing Data Governance As Part of Your Workday Implementation

Modernizing to the Workday platform enables organizations to increase efficiency and gain insights that were not feasible with fragmented legacy systems. However, implementing the Workday platform is not a silver bullet to solving the data management challenges organizations are facing in today’s data-driven climate. Adapting to the increasing speed, scale, and variety of data along with growing data privacy regulations present obstacles that every organization is working to address. These challenges are further compounded with frustrated data consumers having to spend more time collecting and cleansing data than using it. While Workday’s modern platform can help tackle these challenges, a data governance discipline is required to formalize the data standards and policies that not only guide the implementation of the platform but also provide the accountability structure to uphold them across the organization. When planning for a Workday implementation it is essential to evaluate your data governance strategy if one does not exist. Launching your data governance journey with a Workday implementation presents a golden opportunity to shift your data culture forward while working through the critical data and process requirements of the business.

Data Governance with a Workday Implementation

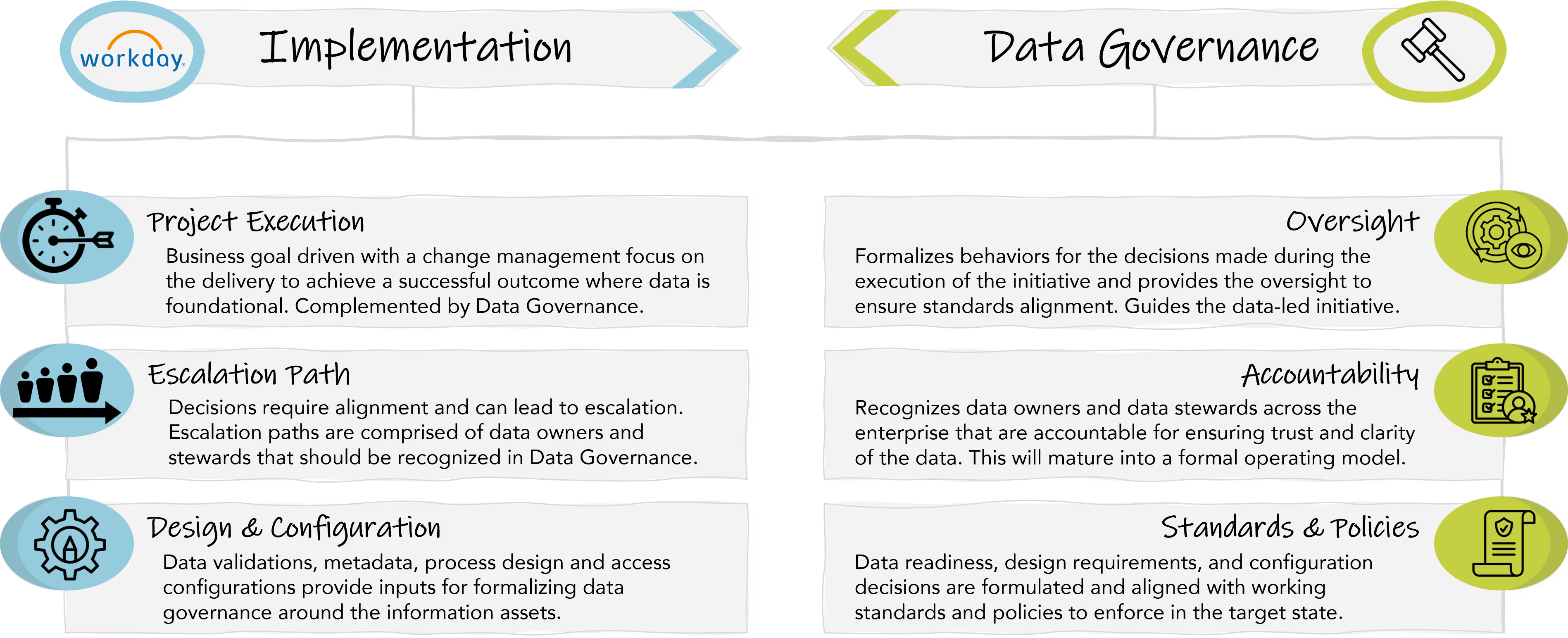

As an official Workday partner, Definian has deep experience supporting organizations with data migrations from complex legacy systems to the Workday platform. Our methodology includes data readiness activities that expose data quality issues that require remediation to migrate data successfully to the target environment. While this exercise is beneficial to ensuring clean data is migrated, there are no methods in place to certify that it will remain clean in the future. For this reason, we strongly advise that data governance is a must to ensure post-implementation success.

Starting data governance as part of a Workday implementation may cause concern when viewed as a constraint. We firmly believe data governance can work in parallel and should never pose any blockers to the execution. Instead, outputs from the data migration and implementation activities complement data governance by serving as inputs and use-cases. While data governance provides oversight, the data readiness, design, configuration, and decisioning aspects of a Workday implementation inform data governance with the accompanying data standards, processes, policies, and accountability structures that need to be formalized.

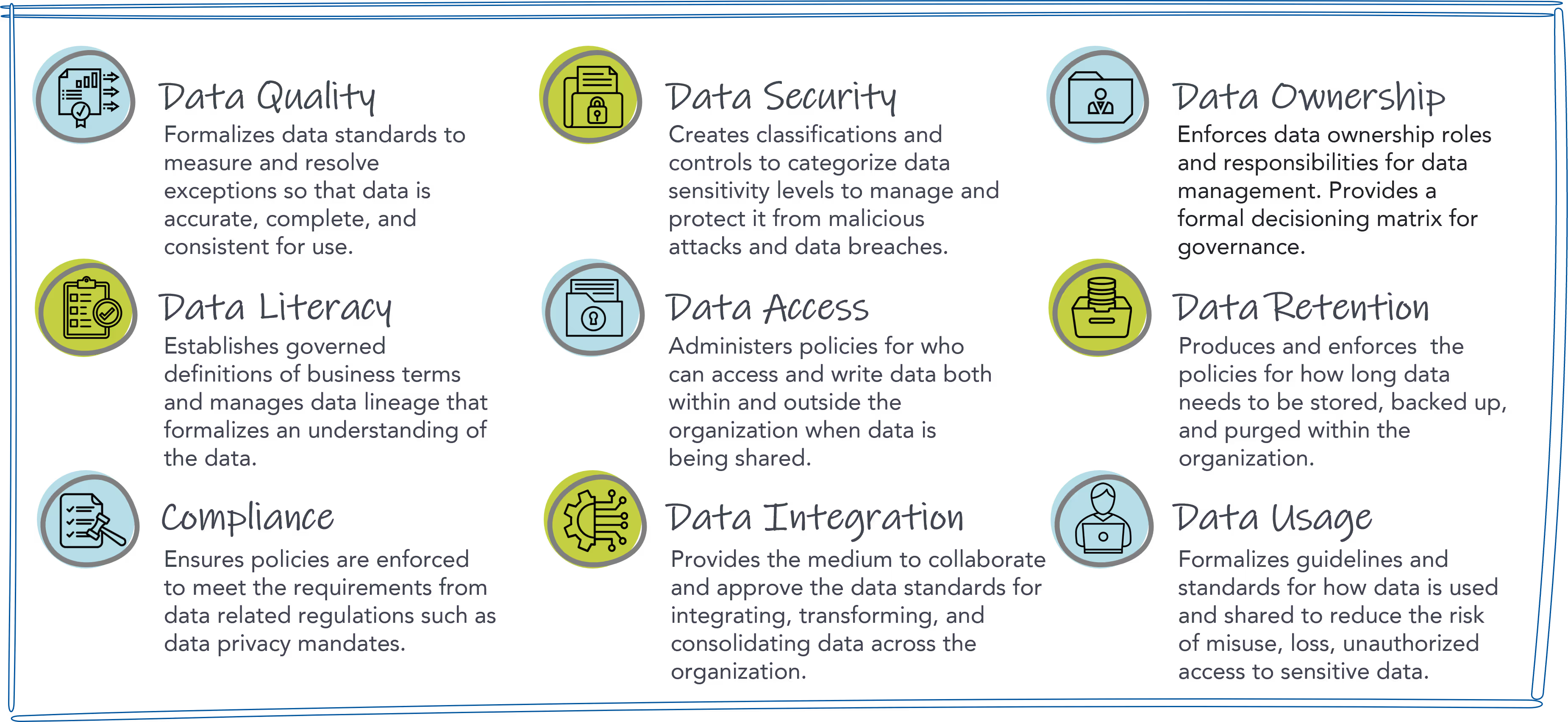

Making the Case for Data Governance

Data governance is a fundamental data management discipline that provides immediate value in addressing the needs for data quality, data literacy, data security and compliance. The simple goal of data governance is to establish clarity and trust in the data that drives the business. This is accomplished by formalizing working definitions, data standards and policies that are enforced through an accountability and stewardship model. With a data governance capability established, organizations can effectively manage their data across the key functions of data management.

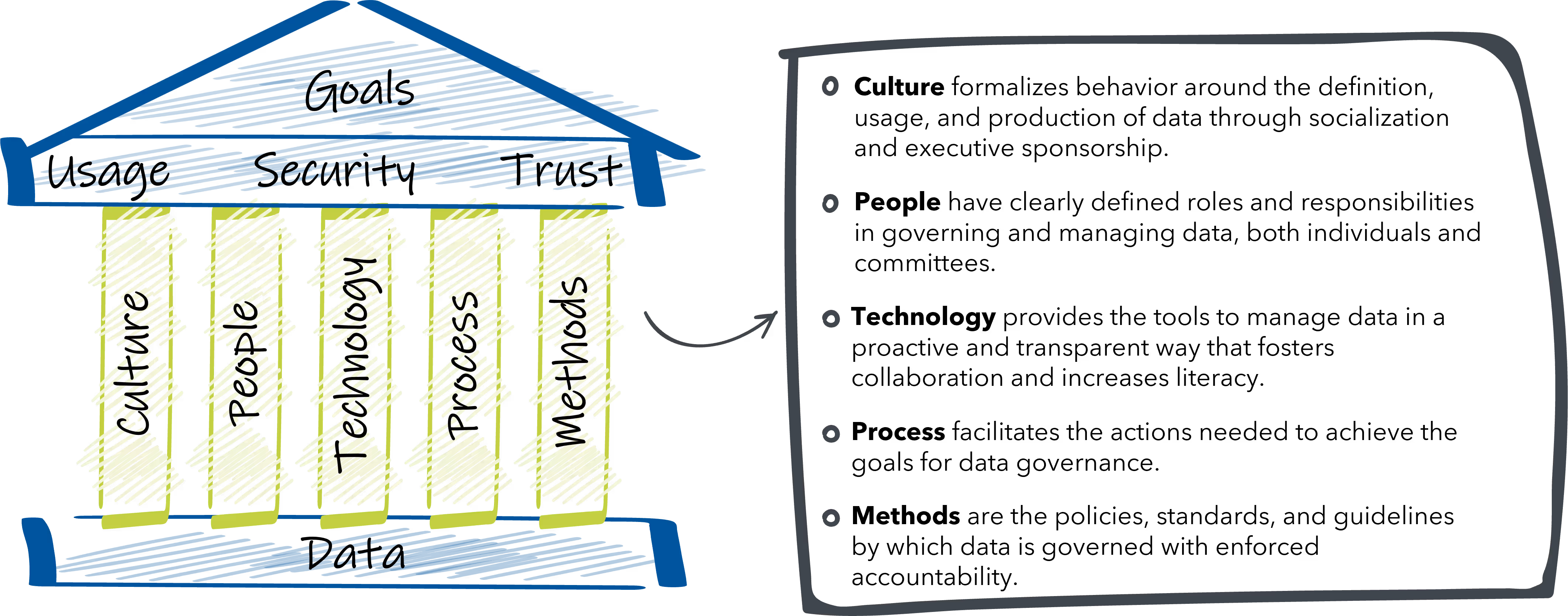

Our Data Governance Framework

The traditional application of data governance has been met with more failure than success. It is characterized as a centralized program that focuses on data protection using command-and-control methods that dictate how data is accessed and used throughout organization. This approach faced failure as it would often result in bureaucratic processes the made data governance a bottleneck to producing any value. A key lesson learned was that data governance is not a one-size-fits all approach and needs to be flexible in tailoring to an organization’s specific needs.

We take a modern approach to data governance by using our agile framework that is centered on the goals of promoting data usage by establishing trust while enforcing adherence to data security policies. With this framework we apply a use-case driven methodology to launch data governance and iteratively scale it to a desired state with an intent to provide immediate business value. The key pillars of the framework ensure that both culture and technology support the people, process, and methods to establish data governance.

Using our framework clients quickly realize that there are many aspects of data management that are already being governed informally in operational siloes or systems. Our framework recognizes the need to formalize those behaviors by establishing a data governance discipline. By raising awareness and fostering collaboration around the working standards, policies, and guidelines for using and managing data, data governance serves as a success enablement factor for any business initiative that hinges on data.

100% Workday Load Success for State Government

Massive Scope

The project was to perform data analysis and migration of Human Capital Management (HCM) and Payroll data from PeopleSoft, learning data from Taleo, and Benefits data from a custom benefits administration system into Workday for a state government with 34,000 active employees and 120 agencies.

“Thank you for putting in all this work [to extract data from Taleo]. In the end, you’ll be saving the state and the project a ton of money and headache,” Director of Workday Operations for the State Government

Important Goals

The state embarked on a transformation initiative to consolidate their processes and data into a single instance of Workday and to eliminate their dependence outdated technology. This project launched amid the COVID-19 pandemic. These circumstances accelerated a variety of work-life changes and underscored the importance of project goals. The goal of the Workday implementation was to modernize the client’s HCM system and keep individuals connected while shifting to a hybrid work environment. Workday HCM created a user-friendly hub of employee resources and workforce data that will allow the client to strengthen themselves as an employer. Looking ahead, employees will also enjoy an enhanced career experience with the new capabilities available to them.

The Risks

The client had several concerns regarding the migration of their data and understood the risk. Definian worked with the state government and Workday teams to address these concerns, ensuring a successful and on-time Workday implementation.

Every data migration and implementation project has inherent risk factors. This client was most exposed to the following risks:

- Limited knowledge on data dependencies across different legacy sources

- Lack of experience with Workday target system requirements

- Employee staffing and overall resource constraints

- Reviewing transformed data in a timely manner prior to Workday loading

- Mapping PeopleSoft payroll codes to Workday reference IDs

Mitigating Risks

Definian mitigated the client’s high-risk areas by employing the following practices:

- Created detailed reports to help the client better understand their legacy data

- Worked alongside the client to develop a strategy to address legacy data issues

- Implemented programmatic data cleansing processes such as address standardization, reformatting of employee names to have proper casing, and reformatting of position IDs to be unique

- Configured data sets shared with the client to draw a connection between an employee’s data across multiple legacy sources

Definian executed the following actions to combat the risks associated with the client’s lack of knowledge of the Workday target system:

- Created validation reports tailored to Workday’s requirements for each individual data piece, allowing the client to address invalid data or missing Workday configuration prior to loading the data.

- Developed conversion error reports which allowed the client to identify legacy system issues, such as invalid, missing, or duplicate data. This enabled the client to address these issues early, a benefit as there is little ability to back data out of Workday once loaded.

- Built specific workbooks designated as client-facing validation files that were tailored for clear presentation and easy understanding of the data

“It has been great working with you all. This was definitely the best government implementation I have worked on,” Senior Associate for the System Integrator

Definian provided the following resources to help with staffing constraints:

- Took ownership of the data conversion process to allow client resources to focus on pre-load and post-load validation

- Led mapping specification sessions with the System Integrator and client to bridge the gap between the legacy and target data

- Facilitated discussions to identify resources and document Responsible, Accountable, Consulted, and Informed (RACI) parties

- Met with the client team to guide the pre-load validation process

To mitigate the risk of client data review deadlines Definian:

- Thoroughly laid out build schedules and review expectations

- Identified client resources for each specific conversion item, including back up resources where needed

- Met with client resource individuals to aid them in their data review

Also, to address the delays caused by mapping legacy payroll codes to Workday reference IDs, Definian:

- Coordinated the SI and the client team to determine pay code conversion logic

- Generated detailed reports highlighting any present payroll codes that did not have configuration in the Workday tenant

- Updated the build schedule to include additional time for new pay code mapping to be completed prior to each data load

Overview of Key Activities

- The team used the Applaud software in a deep analysis of the client data, using integrated reporting tools to identify project risks.

- Transformation and migration processes were developed for all required data from multiple legacy sources.

- Throughout each test cycle, data quality issues and load errors were identified and proactively corrected to alleviate stress leading up to the go-live window.

- The team was able to adjust to updated timelines and changes to the project scope.

- Data defect logs and build cycle trackers were maintained throughout the project to provide clear visibility on requirements and deadlines.

- Built a payroll reconciliation tool to aide in the validation of the payroll results in the Workday tenant.

- Developed a system to run payroll reports to proactively configure new earning and deduction codes ahead of each build window.

Successful Workday Go-Live

Definian played a major role in this successful Workday implementation. Achieving a 100% load success on critical-path files paved the way for a smooth transition into the client beginning HR business processes in Workday. In addition, the payroll reconciliation that provided line by line comparison and analysis of every single paycheck led to a successful payroll data conversion, which allowed for the client to confidently begin payroll processing in Workday immediately.

The Applaud data migration services allowed for a diligent data review process in the initial test cycles of the project, which provided key insight that was later pivotal to the on-time delivery of the Workday tenant. Detailed reporting and documentation maintained the integrity of the data as it was transformed and combined across multiple legacy sources.