Articles & case studies

Why Business Intelligence Is Important

Why Business Intelligence is Important

We are living in the age of technological progression. Digital advancements have completely revolutionized our everyday lives, and one of the largest impacts felt has been in the business world. Companies now have access to data-driven tools and strategies that allow them to learn more about their customers and themselves than ever before, but not everyone is taking advantage of them. Today we’re going to breakdown Business Intelligence and why it’s crucial to the success and longevity of your organization.

What Is Business Intelligence?

Before we jump into the importance, we must first understand Business Intelligence and how it applies to your company’s strategic initiatives. The term Business Intelligence (BI) refers to the technologies, applications, strategies, and practices used to collect, analyze, integrate, and present pertinent business information. The entire purpose of Business Intelligence is to support and facilitate better business decisions. BI allows organizations access to information that is critical to the success of multiple areas including sales, finance, marketing, and a multitude of other areas and departments. Effectively leveraging BI will empower your business with increased actionable data, provide great insights into industry trends, and facilitate a more strategically geared decision-making model.

To illustrate BI in action, here are a few departmental specific examples of insights and benefits that can come from its adoption and application:

- Human Resources: HR can tremendously benefit from the implementation of Business Intelligence utilizing employee productivity analysis, compensation and payroll tracking, and insights into employee satisfaction.

- Finance: Business Intelligence can help finance departments by providing invaluable and in-depth insights into financial data. The application of BI can also help to track quarterly and annual budgets, identify potential problem areas before they cause any negative impacts, and improve the overall organizational business health and financial stability.

- Sales: Business Intelligence can assist your company’s sales force by providing visualizations of the sales cycle, in-depth conversion rates analytics, as well as total revenue analysis. BI can help your sales team to identify what’s working as well as points of failure which can result in dramatically improved sales performance.

- Marketing: BI provides the marketing department with a convenient way to view all current and past campaigns, the performance and trends of those campaigns, a breakdown of the cost per lead and the return on investment, site traffic analytics, as well as a multitude of other actionable pieces of information.

- Executive Leadership: Plain and simple, Business Intelligence allows organizations to reduce costs by improving efficiency and productivity, improving sales, and revealing opportunities for continuous improvement. Business Intelligence allows members of Executive Leadership to more easily measure the organization’s pulse by removing gray areas and eliminating the need to play the guessing game on how the company is doing.

Why Is Business Intelligence Important?

Now you know what Business Intelligence is, what it’s capable of, but the question remains; why is Business Intelligence so important to modern-day organizations? The main reasons to invest in a solid BI strategy and system are:

- Gain New Customer Insights: One of the primary reasons companies are investing their time, money, and efforts into Business Intelligence is because it gives them a greater ability to observe and analyze current customer buying trends. Once you utilize BI to understand what your consumers are buying and the buying motive, you can use this information to create products and product improvements to meet their expectations and needs and, as a result, improve your organization’s bottom-line.

- Improved Visibility: Business Intelligent organizations have better control over their processes and standard operating procedures, as the visibility of these functions is improved by a BI system. The days of skimming through hundreds of pages of annual reports to assess performance are long gone. Business Intelligence illuminates all areas of your organization helps you to readily identify areas for improvement and allow you to be prepared instead of reactive.

- Actionable Information: An effective Business Intelligence system serves as a means to identify key organizational patterns and trends. A BI system also allows you to understand the implications of various organizational processes and changes, allowing you to make informed decisions and act accordingly.

- Efficiency Improvements: BI Systems help improve organizational efficiency which consequently increases productivity and can potentially increase revenue. Business Intelligence systems allow businesses to share vital information across departments with ease, saving time on reporting, data extraction, and data interpretation. Making the sharing of information easier and more efficient permits organizations to eliminate redundant roles and duties, allowing the employees to focus on their work instead of focusing on processing data.

- Sales Insight: Sales and marketing teams alike want to keep track of their customers, and most utilize Customer Relationship Management (CRM) application to do so. CRMs are designed to handle all interactions with customers. Because they house all customer communications and interactions, there is a wealth of data and information that can be interpreted and used to strategic initiatives. BI systems help organizations with everything from identifying new customers, tracking and retaining existing ones, and providing post-sale services.

- Real-Time Data: When executives and decision-makers have to wait for reports to be compiled by various departments, the data is prone to human error and is at risk of being outdated before it’s even submitted for review. BI systems provide users with access to data in real-time through various means including spreadsheets, visual dashboards, and scheduled emails. Large amounts can be assimilated, interpreted, and distributed quickly and accurately when leveraging Business Intelligence tools.

- Competitive Advantage: In addition to all of these great benefits, Business Intelligence can help you gain insight into what your competitors are doing, allowing your organization to make educated decisions and plan for future endeavors.

Conclusion

In summary, BI makes it possible to combine data from multiple sources, analyze the information into a digested format, and then disseminate the information to relevant stakeholders. This allows companies to see the big picture and make smart business decisions. There are always inherent risks when it comes to making any business decision, but those risks aren’t as prominent or worrisome when implementing an effective and reliable BI solution. Business Intelligent organizations can move forward in an increasingly data-driven climate with confidence knowing they are prepared for any challenge that arises.

The Definian team is here to improve your organization’s efficiency by leveraging your existing data. We will provide you with the tools your business needs to transform complex, unorganized, and confusing data into clear and actionable insights. This helps to speed your decision-making processes and ensures that all your business decisions are educated and backed with reliable data, and lots of it! Get in touch with the Definian team today to see how we can improve your business!

5 Key Takeaways from Workday Rising 2024

Last week, some of our team hit the road and headed to Vegas for Workday Rising. This year’s conference offered a deep dive into the challenges and opportunities facing organizations in a fast-moving digital landscape, where there is more technology and data available than ever before. Didn’t make it to the show? Here are the five key takeaways we brought back from the event:

1. Data Quality Remains a Priority (and a Challenge)

Organizations continue to struggle with data quality, highlighting the difficulty in accessing the data they need. A recurring pain point at the conference was the issue of handling historical data—how to store, access, and analyze it without burdening production systems. The sheer volume of data accumulated over time makes it hard to maintain quality while ensuring that critical information is accessible when needed. Companies need better strategies to tackle these data challenges, especially as they continue to rely on data-driven decisions.

2. Change Management Doesn't End After Go-Live

A crucial reminder from the event was that change management extends far beyond the go-live date of any new system implementation. Many attendees noted that using new systems posed unforeseen challenges, reinforcing the need for continuous support and training. Adapting to a new way of working requires time, and organizations must be proactive in managing change post-implementation to drive user adoption and system optimization.

3. Analytics: A Tool for Better Decision-Making

Another major focus at the conference was the importance of analytics in driving better-informed decisions. Organizations that can effectively leverage their data with the right analytical tools are able to make smarter decisions faster. Workday's commitment to improving analytics was evident throughout the conference, with discussions on how these tools can empower teams across finance, HR, and operations. As businesses evolve, those who excel at using analytics will gain a competitive edge.

4. Don’t Underestimate Data Migration

A key discussion repeated throughout the conference was the difficulty of data migration. Many organizations admitted that they were not fully prepared for the complexities involved in moving large amounts of data into new systems. Migrating data—especially when it spans multiple systems, formats, and decades—requires more than just technical know-how; it demands comprehensive planning and strategy. Workday Rising attendees shared stories of underestimating this process and the lessons learned from the experience.

5. Workday’s “Forever Forward” Vision: The Future of AI and Innovation

The overarching theme of the conference, “Forever Forward”, was perfectly aligned with Workday’s mission to continuously innovate. One of the most exciting announcements was Workday Illuminate™, a next-generation AI product designed to accelerate decision-making and problem-solving in HR and finance. In a world moving at lightning speed, Workday’s new AI tools, combined with Orchestrate, and Extend, are unlocking new possibilities for businesses to grow and innovate. This forward-thinking approach signals a future where AI is central to business operations, and those that leverage these technologies can achieve exponential growth.

As Workday continues to push the boundaries of what’s possible, companies have an opportunity to follow suit, embracing new technologies and strategies to stay ahead in a fast-paced world. If your organization is looking to tackle any of these key areas, the Definian team would love to help. As an official Workday partner, we have been successfully helping many companies successfully migrate to Workday and unlock the full potential of their data beyond implementation.

Premier International (now Definian) Appears on Prestigious Inc. 5000 List for Second Time

Definian Makes the 2024 Inc. 5000, Through Continued Growth and Success

NEW YORK, August 13, 2024 – Inc. revealed today that Premier International (now Definian) ranks No. 3604 on the 2024 Inc. 5000, its annual list of the fastest-growing private companies in America. The prestigious ranking provides a data-driven look at the most successful companies within the economy’s most dynamic segment—its independent, entrepreneurial businesses. Microsoft, Meta, Chobani, Under Armour, Timberland, Oracle, Patagonia, and many other household-name brands gained their first national exposure as honorees on the Inc. 5000.

"Making the Inc. 5000 list for the second time is a testament to the value we deliver to our clients and the dedication and expertise of our team at Definian,” states Craig Wood, CEO of Definian. “This achievement is exceptionally rare for a 39-year-old company and underscores our unwavering commitment to providing exceptional data services across the entire data value chain that drive success for our clients. We are honored to be recognized among such esteemed companies and will continue to prioritize excellence and innovation, ensuring our clients achieve their goals with the support of our talented team."

The Inc. 5000 class of 2024 represents companies that have driven rapid revenue growth while navigating inflationary pressure, the rising costs of capital, and seemingly intractable hiring challenges. Among this year’s top 500 companies, the average median three-year revenue growth rate is 1,637 percent. In all, this year’s Inc. 5000 companies have added 874,458 jobs to the economy over the past three years.

For complete results of the Inc. 5000, including company profiles and an interactive database that can be sorted by industry, location, and other criteria, go to www.inc.com/inc5000.

“One of the greatest joys of my job is going through the Inc. 5000 list,” says Mike Hofman, who recently joined Inc. as editor-in-chief. “To see all of the intriguing and surprising ways that companies are transforming sectors, from health care and AI to apparel and pet food, is fascinating for me as a journalist and storyteller. Congratulations to this year’s honorees, as well, for growing their businesses fast despite the economic disruption we all faced over the past three years, from supply chain woes to inflation to changes in the workforce.”

About Definian

Definian is a data services company that helps organizations unleash the full potential of their data. The company delivers services across the entire data value chain, including data strategy, data value realization and the critical areas of data fundamentals that are the backbone of maximizing data value. Driven by our values which we refer to as “The Definian Way”, we are relentlessly focused on our clients and their success, and we have a great time working together to deliver on our promises. We believe that recruiting and retaining top talent are key to serving our clients and are proud to have our culture recognized by others as well. In addition to the Inc. 5000 list of America’s Fastest-Growing Private Companies, Definian has been named one of The Best and Brightest Companies to Work For® in Chicago, one of Crain’s top 100 Best Places to Work in Chicago, and one of the Built In Best Places to Work, both in the US and Chicago.

More about Inc. and the Inc. 5000

Methodology

Companies on the 2024 Inc. 5000 are ranked according to percentage revenue growth from 2020 to 2023. To qualify, companies must have been founded and generating revenue by March 31, 2020. They must be U.S.-based, privately held, for-profit, and independent—not subsidiaries or divisions of other companies—as of December 31, 2023. (Since then, some on the list may have gone public or been acquired.) The minimum revenue required for 2020 is $100,000; the minimum for 2023 is $2 million. As always, Inc. reserves the right to decline applicants for subjective reasons. Growth rates used to determine company rankings were calculated to four decimal places.

About Inc.

Inc. Business Media is the leading multimedia brand for entrepreneurs. Through its journalism, Inc. aims to inform, educate, and elevate the profile of our community: the risk-takers, the innovators, and the ultra-driven go-getters who are creating our future. Inc.’s award-winning work achieves a monthly brand footprint of more than 40 million across a variety of channels, including events, print, digital, video, podcasts, newsletters, and social media. Its proprietary Inc. 5000 list, produced every year since its launch as the Inc. 100 in 1982, analyzes company data to rank the fastest-growing privately held businesses in the United States. The recognition that comes with inclusion on this and other prestigious Inc. lists, such as Female Founders and Power Partners, gives the founders of top businesses the opportunity to engage with an exclusive community of their peers, and credibility that helps them drive sales and recruit talent. For more information, visit www.inc.com.

For more information on the Inc. 5000 Conference & Gala, to be held from October 16 to 18 in Palm Desert, California, please visit http://conference.inc.com/.

Mastering Data Migration for Configured Products in Steel and Process Manufacturing

In industries like steel manufacturing, where configured products and intricate process manufacturing intersect, data migration becomes a critical yet challenging endeavor. These environments demand a meticulous approach to ensure that systems not only capture the nuances of the manufacturing process but also provide the agility to adapt to evolving product requirements.

The Complexity of Configured Products

Configured products in manufacturing often arise from dynamic and highly customized processes. For example, steel manufacturing might involve rolling a particular grade of steel with specific thickness, hardness, and other service quality requirements. When a new product variation emerges, it requires recalibrating existing configurations to meet unique demands.

Migrating data in such scenarios involves more than transferring static datasets. Systems like Manufacturing Execution Systems (MES) must dynamically extrapolate routing steps, testing requirements, and metallurgical constraints to accommodate new product specifications. This adaptability requires data migration to account for:

- Overlapping Specifications: Industry standards (e.g., ASTM), customer-specific requirements, and in-house metallurgical expertise often result in overlapping specifications. Data systems must reconcile these layers without compromising quality or compliance.

- Dynamic Configuration Rules: New product variations demand that the system derive routing steps and process parameters on the fly. Legacy systems may lack the sophistication to handle such real-time adaptations during migration.

- Expert Knowledge Integration: In-house trade knowledge must be encoded into the system during migration to ensure operational continuity. To cite highly specific examples, this might include insights into how manganese levels influence hardness and machinability, or limitations of specific machines on certain steel gauges.

Process Manufacturing: A Data Challenge

Process manufacturing often involves intricate recipes, variable inputs, and precise control over chemical or physical transformations. This approach demands robust systems to track materials, monitor quality, and adjust production parameters in real-time to account for fluctuations in raw materials, equipment performance, and environmental conditions.

For example, achieving the desired hardness in steel might require keeping alloying metals levels within strict tolerances during the smelting process, using precise rolling speed and pressure in the cold rolling process, and special testing to guarantee quality of the final product. Such precision demands accurate and interoperable data systems. However, migrating data for process manufacturing often uncovers pain points such as:

- Data Normalization: Legacy systems might store data inconsistently, making it difficult to normalize during migration.

- Validation Gaps: Older systems often lack robust validation mechanisms, leading to the proliferation of duplicates and errors in migrated data.

- Interconnected Dependencies: Process parameters are tightly interlinked, meaning a change in one parameter can ripple through multiple stages. Migrating such data requires preserving these dependencies.

Bridging Legacy Systems with Modern Solutions

Steel companies often transition from legacy systems to modern platforms like Oracle Cloud ERP or MES solutions to address these complexities. However, successful migration hinges on aligning the unique demands of process manufacturing with the capabilities of the target system. Key strategies include:

- Data Profiling and Cleansing: Before migration, it’s crucial to conduct a thorough data audit to identify gaps, redundancies, and inconsistencies. For example, reconciling varying manganese specifications across overlapping datasets ensures uniformity.

- Metadata Management: Leveraging metadata can help capture relationships and rules, such as specific hardness-to-thickness mappings or grade-specific constraints. This ensures the target system can replicate complex configurations seamlessly.

- Automated Validation Frameworks: Incorporating automated validation rules during migration can prevent the introduction of errors. For instance, systems can flag anomalies where customer specifications diverge from in-house metallurgical constraints.

- Collaboration Between Experts and Technologists: Bridging the gap between metallurgical experts and IT teams is essential. For instance, translating expert knowledge into system rules can preserve critical insights during migration.

Lessons Learned from Real-World Scenarios

A recurring theme in data migration projects for configured product and process manufacturing is the integration of expert knowledge with system capabilities. For example, metallurgists often impose stricter constraints than industry standards, such as narrower manganese ranges, to optimize machine performance. Migrating this implicit knowledge into explicit system rules is essential but requires collaboration across disciplines.

Additionally, migrating overlapping specifications highlights the need for robust reconciliation processes. Consider a scenario where ASTM standards specify a manganese range of 0.8-1.2%, but customer requirements and in-house expertise demand narrower ranges for certain products. Migration efforts must encode these nuances to prevent downstream production issues.

Enabling Future-Ready Manufacturing

Beyond solving immediate challenges, data migration for configured products and process manufacturing must lay the groundwork for future scalability and innovation. This includes:

- Integration with Advanced Analytics: Modern systems equipped with advanced analytics can optimize production by identifying trends and anomalies in real-time.

- Support for Sustainability Goals: Migrated data should enable manufacturers to track and reduce environmental impact, aligning with broader industry sustainability initiatives.

- Agile Configuration Management: Systems must allow for rapid reconfiguration to address evolving customer demands or market conditions.

Conclusion

Data migration for configured product and process manufacturing, particularly in the steel industry, is a multidimensional challenge. By addressing overlapping specifications, dynamic configuration rules, and expert knowledge integration, manufacturers can ensure a seamless transition to modern systems. This not only resolves current complexities but also sets the stage for a more agile and efficient manufacturing future.

Success hinges on a collaborative approach, where technical solutions are informed by deep industry expertise. As manufacturers continue to adopt cutting-edge platforms, the lessons learned from these migrations will serve as a blueprint for navigating the intricate relationship between data, processes, and innovation. To explore how your organization can overcome these challenges and leverage best practices in data migration, connect with our team of experts today and take the first step toward a smarter, more resilient future.

The Definian Story: A Legacy of Data Leadership

Change is inevitable, especially in technology.

For 40 years, we operated as Premier International, helping organizations solve their most complex data challenges. Since our founding in 1985, our work has always been about more than just moving information from one system to another. It has been about transforming data into clarity and outcomes that matter. We were pioneers in this space, and over time both the industry and our role within it have evolved.

Our new brand, Definian, reflects that evolution.

Defining What’s Next

Definian captures the idea of helping organizations define what comes next in their data journey. Data is now the heartbeat of every modern enterprise, and our role is to help leaders harness it with precision and purpose. We guide companies through every stage so they can unlock opportunities, spark innovation, and achieve results that matter.

What We Do

We are an AI-enabled company, built on the belief that strong data foundations are the key to unlocking the power of technology. AI is both how we work and what we deliver. It shapes our approach across strategy, modernization, and insights, and it also defines a dedicated area of focus where advanced methods like machine learning, automation, and predictive analytics open new possibilities.

That journey is shaped by what we call the Definian Data Value Chain, organized into four areas of expertise:

- Strategy: Defining AI strategies, establishing data-driven approaches, and designing future-state architectures that provide clarity and direction.

- Modernization: Delivering governance, quality and observability, master data management, migration, engineering, and archiving to create trusted, future-ready systems.

- Insights: Turning data into decision-making power through visualization, reporting, analytics, and modeling.

- AI & Automation: Applying data science, machine learning, predictive analytics, and workflow automation to open entirely new possibilities.

This combination ensures organizations have the clarity, infrastructure, and insights needed to ensure they are ready to use AI responsibly and effectively to deliver measurable business value.

The Story Behind the Name

The name Definian was chosen with care. It comes from the word define, signaling clarity, direction, and purpose. Just as we help leaders define strategies and outcomes, Definian itself embodies precision and intentionality. The name also carries a sense of forward movement, not only defining where organizations are today, but guiding them toward what comes next.

Definian is more than a name. It is a statement of our promise: to create definition in a world of complexity, and to turn data into possibility.

The Design and Colors

As we step into our future as Definian, our logo and visual identity tell a story that is both deeply rooted and forward-looking.

The logotype itself is modern and intentional, with lowercase letters that convey confidence without pretense. The open corners of the “d” and “n” suggest progress and momentum, while the two dotted “i”s in Definian green represent the dual nature of our work: technical precision and creative possibility.

The bold blue is a deliberate evolution of Premier’s signature color, honoring our 40-year legacy while signaling a stronger, more confident vision. Complementing it is a fresh green, chosen to introduce warmth and approachability. Together, the palette reflects both precision and partnership, the pillars that define how we serve our clients.

Every element was designed with purpose, reflecting who we are, what we value, and how we guide our clients toward the future.

Most Importantly, What Has Not Changed

What has not changed is our foundation. We continue to leverage our deep expertise across the full data lifecycle, delivering decades of meaningful results. Our trusted partnership model continues to put clients first, and our focus remains on turning data into outcomes that fuel innovation, including AI-enabled solutions.

Definian represents more than a new brand. It is our renewed commitment to helping organizations see the path ahead and navigate it with confidence. When data is harnessed with purpose, and when it is ready to power AI, it becomes more than information. It becomes possibility.

The future is here. And the path is clear.

We are Definian.

———

(Note: Our fully updated website will be coming in Fall 2025. For more information on Definian, please read the press release that accompanied our brand launch.)

Data Governance: Taming the Wild Family Circus for Indian Companies

Picture this: Your company’s data is like a big, chaotic family household. You’ve got teenagers hoarding secrets in their rooms, toddlers finger-painting on important documents, and that one uncle who keeps bringing home random objects he found on the street. Sound familiar? Well, welcome to the world of data governance — it’s time to bring some order to this madhouse!

What is Data Governance?

Data governance is like being the superhero parent in this family circus. It involves:

- Knowing where everyone is and what they’re up to (tracking your data)

- Setting house rules that everyone (mostly) follows (creating data policies)

- Making sure little Timmy doesn’t flush the family jewels down the toilet (protecting valuable data)

- Keeping the nosy neighbors (hackers) from peeking through your windows (data security)

Why Should Indian Companies Invest in Data Governance?

- Avoiding Family Feuds (and Fines): Remember when your kids broke that priceless vase playing indoor cricket? Poor data practices can lead to similarly expensive disasters. With the new Digital Personal Data Protection Act (DPDPA) coming, you don’t want the government playing the role of an angry grandmother!

- Winning “Family of the Year” Award: When your family behaves well in public, everyone adores you. Similarly, good data governance makes customers and partners trust you more. It’s like winning the “Most Responsible Data Family” award!

- Finding the TV Remote (and Insights) Faster: Ever spent hours searching for the remote only to find it under the sofa? Good data governance helps you find the right data quickly, leading to better decisions. No more data treasure hunts!

- Saving on Grocery Bills (and Operational Costs): Proper data management is like meal planning for a family of 12. It saves money, reduces waste, and prevents buying that fifth bottle of ketchup you didn’t need.

- Being Ready for Surprise Visits: Whether it’s unexpected in-laws or a data audit, good governance helps you quickly tidy up and put on a good show.

- Impressing the International Relatives: When your family home is well-organized and welcoming, relatives from around the world are eager to visit and stay connected. Strong data practices make your company attractive to global partners.

How Data Governance Improves India’s Global Standing

- Becoming the Favorite Cousin: When Indian companies nail data governance, suddenly India becomes the cousin everyone wants to hang out with at the global family reunion.

- Speaking the Same Language: No more awkward family gatherings where no one understands each other. Aligning with global data standards is like everyone finally agreeing on which cricket team to support.

- Winning the Family Talent Show: India can show off its innovative data practices like a kid showing off a new dance routine — impressing everyone and maybe winning a few competitions.

- Attracting the Rich Uncles: Just as a well-behaved family attracts generous relatives, good data governance can make India more appealing to foreign investors. “Look how responsible they are with their data! Let’s give them some money to play with.”

- Having a Say in Family Decisions: With great data powers comes great responsibility — and influence. India can help shape global data policies like that one charismatic cousin who somehow always decides where the next family vacation will be.

Why Invest Now?

The DPDPA is like a strict new babysitter coming to town. Before they arrive, you’d better:

- Clean up the mess under the bed (assess your current data practices)

- Invest in some child-proofing equipment (get the right data tools and training)

- Establish family rules (develop data governance strategies)

- Teach everyone to pick up their own socks (create a culture of data responsibility)

Conclusion

Data governance isn’t just about following rules, it’s about turning your data family from the chaotic bunch that everyone gossips about into the enviable crew that wins “Best in Show” at the global data dog show. By investing in data governance now, Indian companies aren’t just preparing for a new babysitter (DPDPA); they’re setting themselves up to be the family that every other country wants their kids to have playdates with.

Remember, a well-governed data family doesn’t just avoid time-outs — it thrives, succeeds, and might even score an invitation to the fancy international family reunions. So, put on your superhero cape, gather your unruly data children, and let’s show the world that Indian companies know how to run a tight ship… or at least a ship where not everything is on fire all the time!

What Separates a Data Laggard from a Data Leader?

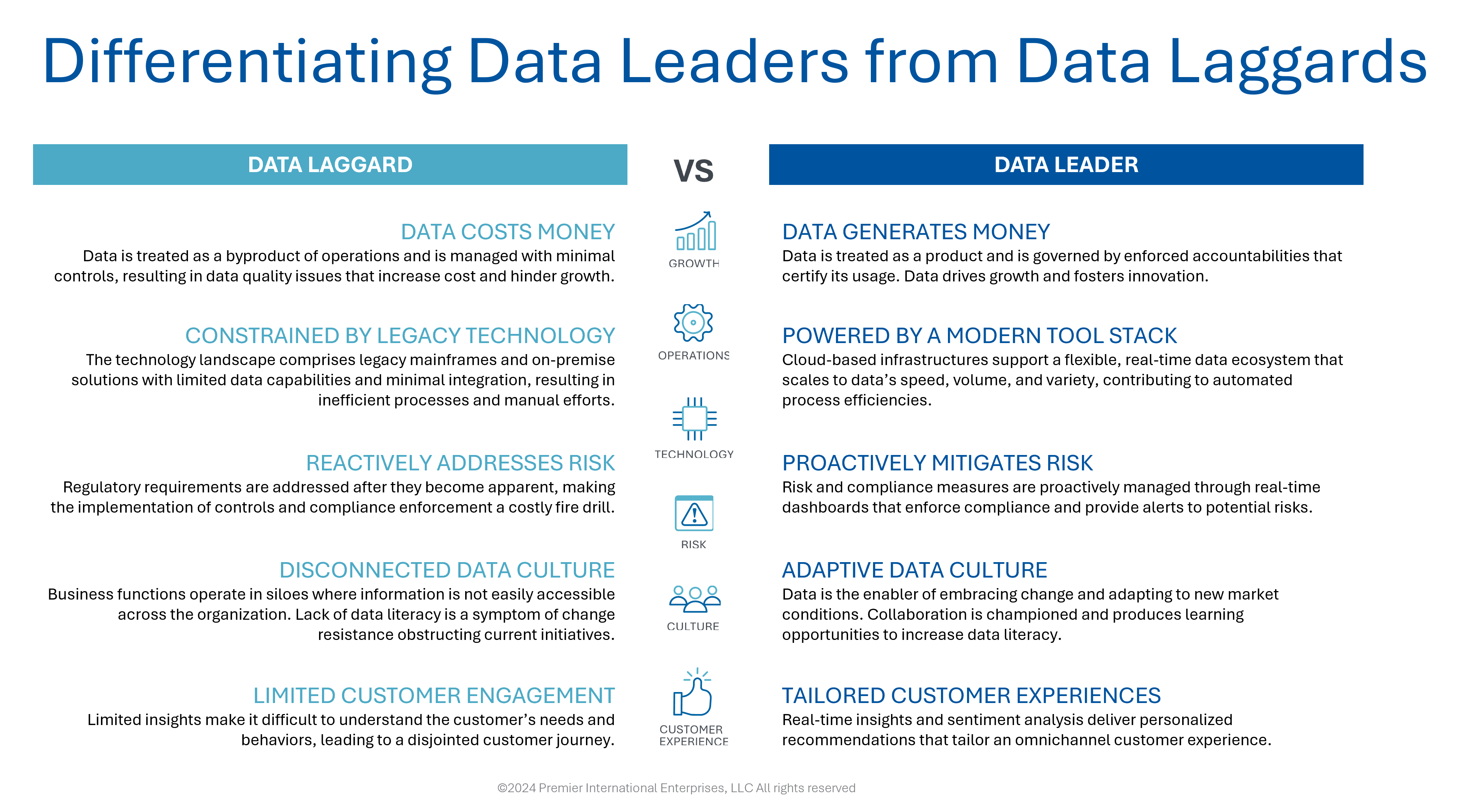

The ability to leverage data effectively can be the deciding factor between business success and obsolescence. Organizations that excel in harnessing data are often referred to as "data leaders," while those that struggle with data utilization are labeled "data laggards." Understanding the distinctions between these two categories can provide valuable insights for businesses aiming to ascend the data maturity curve.

1. Data Culture: Disconnected vs. Adaptive:

Data Laggards often operate with a disconnected data culture. In these organizations, business functions are siloed, making information difficult to access across departments. This fragmentation is exacerbated by a lack of data literacy and resistance to change, which obstructs current initiatives and hampers overall efficiency.

Data Leaders, in contrast, foster an adaptive data culture. Here, data is viewed as a catalyst for embracing change and adapting to new market conditions. Collaboration is encouraged, creating learning opportunities that enhance data literacy across the organization. This culture of adaptability ensures that data is not just collected but also understood and utilized effectively.

2.Technology Infrastructure: Legacy Systems vs. Modern Data Stack

Data Laggards are often constrained by legacy technology. These organizations rely on outdated mainframes and on-premise solutions that offer limited data capabilities and minimal integration. This reliance on legacy systems results in inefficient processes and a high degree of manual effort, stifling innovation and responsiveness.

Data Leaders leverage a modern data stack. They utilize cloud-based infrastructures that support flexible, real-time data ecosystems. These systems can scale to accommodate the speed, volume, and variety of data, enabling automated processes and improving overall efficiency. This modern approach not only streamlines operations but also provides a robust foundation for future growth.

3. Risk Management: Reactive vs. Proactive

Data Laggards tend to address risk in a reactive manner. Regulatory requirements are often met only after issues arise, resulting in costly and inefficient compliance efforts. This reactive stance leaves organizations vulnerable to unforeseen risks and regulatory challenges.

Data Leaders adopt a proactive approach to risk management. They utilize real-time dashboards to enforce compliance and provide alerts to potential risks before they become critical issues. This proactive stance not only minimizes risk but also ensures that compliance measures are integrated seamlessly into daily operations, reducing costs and enhancing security.

4. Customer Engagement: Limited vs. Tailored

Data Laggards suffer from limited customer engagement. Due to insufficient insights, these organizations struggle to understand customer needs and behaviors, leading to a fragmented and disjointed customer journey. This lack of engagement can result in lower customer satisfaction and loyalty.

Data Leaders excel in creating tailored customer experiences. They use real-time insights and sentiment analysis to deliver personalized recommendations, crafting an omnichannel experience that resonates with individual customers. This tailored approach enhances customer satisfaction, loyalty, and overall business growth.

5. Data Value: Costly Byproduct vs. Growth Driver

For Data Laggards, data is often seen as a costly byproduct of operations. Managed with minimal controls, data quality issues abound, leading to increased costs and hindered growth. This perspective prevents organizations from recognizing the full potential of their data assets.

Data Leaders treat data as a valuable product. They enforce robust governance and accountability measures to ensure data quality and certify its usage. By managing data as a critical asset, these organizations drive growth and foster innovation, turning data into a powerful engine for business success.

The journey from being a data laggard to becoming a data leader requires a fundamental shift in culture, technology, risk management, customer engagement, and data valuation. Organizations that embrace these changes can unlock the full potential of their data, transforming it from a byproduct into a strategic asset that drives growth, innovation, and competitive advantage. By understanding and implementing the strategies of data leaders, businesses can position themselves at the forefront of the digital economy, ready to navigate the complexities of the modern market with agility and confidence.

Contact Definian today to engage with one of our data experts who can help you start applying data-first strategies to make the most of your information, gain a competitive edge by analyzing insights that inform decisions, and create value for your organization.

Premier International Rebrands as Definian to Help Organizations Define What’s Next in the Age of AI

FOR IMMEDIATE RELEASE

CHICAGO, August 25, 2025 – After four decades as l, the company today announced its new name and identity: Definian.

For more than 40 years, the firm has partnered with global enterprises to solve complex data challenges, from modernizing systems to advancing analytics. With its rebrand, Definian signals both continuity and change: the trusted partner organizations have relied on since 1985, now with a renewed focus on preparing leaders for the opportunities and demands of AI.

“Definian marks our evolution into an AI-enabled company,” said Craig Wood, CEO. “We’ve built on decades of experience helping organizations achieve clarity with their data, but our focus is squarely on what’s next: giving leaders the strategy, modernization, and insights they need to accelerate their AI journeys.”

The name Definian is inspired by the idea of defining what’s next. Data is no longer a back-office function; it is the foundation of every modern enterprise and the starting point for AI. Definian exists to help organizations make their data reliable, ready, and actionable, equipping leaders to move forward with confidence.

“What hasn’t changed is how we work,” said Mikel Naples, President and COO. “Our clients know us as a trusted partner who delivers measurable results. Definian is about sharpening that promise and bringing precision and purpose to every engagement so our clients can achieve outcomes that matter.”

Definian continues to build on what has always set the firm apart: four decades of experience across the data lifecycle, a proven record of results, and a partnership model that puts clients first. The company now brings those strengths into an AI era, combining deep data expertise with modern capabilities to help organizations prepare, adapt, and accelerate responsibly.

About Definian

Definian helps organizations define what’s next by unlocking the full value of their data. With expertise spanning data strategy, modernization, advanced analytics, and AI, Definian equips leaders to harness data with clarity and precision throughout their digital evolution.

Founded in 1985 as Premier International and rebranded in 2025, Definian has guided thousands of successful initiatives for global enterprises, technology providers, and system integrators. Combining four decades of data expertise with an AI-enhanced approach, Definian ensures organizations achieve measurable outcomes and sustained advantage in a world defined by data.

Understanding the Importance of Data Governance

Today, more than ever, organizations generate and rely on vast amounts of data to drive decision-making, innovation, and competitive advantage. Unfortunately, not everyone in an organization understands the importance of a formal data governance discipline and the risks associated without it. Effective data governance ensures data quality, security, and compliance, while poor data governance can lead to significant risks and increased costs. This article explores the critical role of data governance, the risks of neglecting it, and the opportunities it presents for forward-thinking organizations.

What is Data Governance?

Data governance encompasses the policies, procedures, and standards that manage the availability, usability, integrity, and security of data within an organization. Effective data governance aims to guide behaviors on how data is defined, produced, and used across the organization. It ensures that data is consistent, trustworthy, and used responsibly by:

- Establishing Data Ownership: Clearly defining who owns and is responsible for data across the organization.

- Data Quality Management: Ensuring data accuracy, completeness, and reliability.

- Data Security: Protecting data from unauthorized access and breaches.

- Compliance: Adhering to regulatory requirements and industry standards.

- Data Lifecycle Management: Managing data from creation through disposal.

The Risks of Poor Data Governance

Neglecting data governance can lead to numerous risks, including:

- Data Breaches and Security Issues: Without stringent data governance, sensitive information can be exposed to cyberattacks, resulting in data breaches. These incidents can lead to financial losses, reputational damage, and legal penalties. For example, the 2017 Equifax data breach exposed the personal data of over 147 million people, highlighting the catastrophic impact of inadequate data governance and creating a lasting impact on their brand.

- Improved Regulatory Compliance: Streamlined data governance processes eliminate redundancies and improve data accessibility, leading to greater operational efficiency. For instance, a global steel manufacturer reduced costs by hundreds of millions of dollars by streamlining its supply chain data, integrating internal and external data flows, and using the data to optimize its operations.

- Increased Operational Efficiency: Streamlined data governance processes eliminate redundancies and improve data accessibility, leading to greater operational efficiency. For instance, a global steel manufacturer reduced costs by hundreds of millions of dollars by streamlining its supply chain data, integrating internal and external data flows, and using the data to optimize its operations.

- Better Risk Management: Proactive data governance helps organizations identify and mitigate risks related to data security and integrity. This enables them to respond swiftly to potential threats and minimize their impact.

Actionable Recommendations for Implementing Data Governance

To harness the benefits of data governance, organizations should consider the following actionable steps:

- Craft a Strategic Roadmap: Identify the current data governance needs and capabilities among business and technology stakeholders to develop a business case. Establish a vision for data governance that aligns with business objectives and craft a strategic roadmap outlining key initiatives over a multi-year period. This will formalize an agile data governance function that will iteratively scale across the entire organization.

- Formalize an Operating Model: Design an operating model that aligns with the organizational culture to minimize the impacts of change when formalizing a data governance discipline. Clearly define and communicate the roles and responsibilities for members participating in the data governance program. Acknowledge data producers as contributors to defining and complying with data standards and policies. Establish a Data Governance Council to charter a formal data governance program sponsored by executive leadership.

- Compile an Inventory of Critical Information Standards and Policies: Profile data objects and break down business processes to develop a catalog of critical data elements needed for operations, analytics, financial reporting, and regulatory compliance. Rationalize each element and collaborate to establish a working definition, expected standards, and policies for how the data is created and used across the organization. Socialize the catalog outputs so that it is easily discoverable by data producers and consumers.

- Implement a Data Quality Framework: Deploy a framework that enables data quality to be measured against the standards defined in the critical information catalog. Socialize results to stakeholders and data stewards for review. Develop tactical plans to remediate exceptions and improve data quality to meet governed expectations. Monitor data quality through its lifecycle to identify opportunities from a people, process, technology, and a data perspective.

- Educate and Train Employees: Conduct regular training sessions to educate and empower employees about data governance policies, their roles, and the importance of data integrity and security. Use this as a chance to reinforce the value proposition for data governance to mitigate any change resistance.

- Continuously Monitor, Improve, and Scale: Regularly review and update your data governance policies and practices to keep up with changing business needs and regulations. Use feedback and performance metrics to continuously improve the program while measuring efficacy. Maintain a running backlog of data governance use cases and prioritize them based on their value and alignment with business goals. Scale the operating model as you work through various data governance use cases.

For senior executives and leaders overseeing data, implementing robust data governance is not just a best practice but a necessity. Effective data governance mitigates risks, ensures compliance, and unlocks significant opportunities for operational efficiency, informed decision-making, and enhanced customer trust. By prioritizing data governance, organizations can turn their data into a strategic asset, driving innovation and long-term success. By understanding and acting on these principles, executives can steer their organizations towards a future where data is not just managed but leveraged to its fullest potential.

Sources

- Equifax Breach 2017: https://www.ftc.gov/enforcement/refunds/equifax-data-breach-settlement

- Google GDPR Fine 2019: https://www.digitalguardian.com/blog/google-fined-57m-data-protection-watchdog-over-gdpr-violations

- PwC Consumer Trust Survey: https://www.pwc.com/us/en/library/trust-in-business-survey.html

- Good Data Starts with Great Governance: https://www.bcg.com/publications/2019/good-data-starts-with-great-governance

- The Impact of Data Governance: https://www.techtimes.com/articles/304151/20230121/the-impact-of-data-governance-in-multi-cloud-environments-ensuring-security-compliance-and-efficiency.htm

- Risk Management and Data Governance: https://www.microsoft.com/en-us/security/business/security-101/what-is-data-governance-for-enterprise

Overcoming the Hurdles: Getting past the blockers CDOs face to operationalize Data Governance

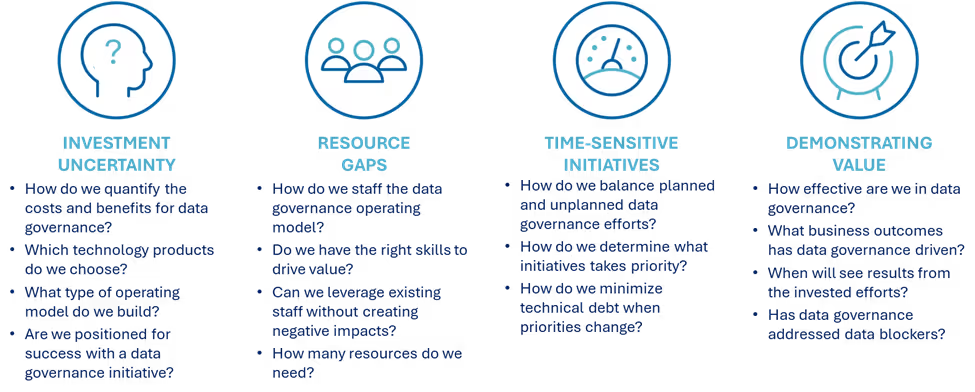

In today’s vast information landscape, a Chief Data Officer’s (CDO) role is critical for unlocking an organization's data potential. Despite their strategic importance, CDOs face unexpected challenges that hinder their ability to deliver tangible business value, leading to an average tenure of less than a couple of years. In this article, we explore four key areas that impede a CDOs' progress and methods that can help build momentum in achieving data governance objectives.

Investment Uncertainty

Data governance initiatives require investments in resources, technology, and personnel. However, securing buy-in and funding from stakeholders can be daunting, especially when the return on investment (ROI) is not immediately apparent. CDOs must navigate the complexities of justifying the long-term benefits of data governance, which may not yield immediate financial gains. This investment uncertainty can lead to hesitation and resistance from decision-makers, hindering CDOs ability to execute their strategy.

To mitigate these challenges, CDOs must proactively communicate the strategic value of data governance and its potential to drive operational efficiencies, risk mitigation, and competitive advantages. A simple method CDOs can use to quantify value is to assess long-term impacts through the lens of the cost of doing nothing. They should also prioritize high-impact, low-cost initiatives and seek to align data governance efforts with the organization's immediate business objectives, delivering quick wins that provide incremental business value.

People Gaps

Effective data governance relies heavily on the expertise and collaboration of cross-functional teams. However, organizations often face a shortage of skilled data professionals, including data analysts, data engineers, and data stewards. These gaps are often filled by assigning existing resources with additional responsibilities they do not have the time or skills to successfully fulfill. This is further compounded by change resistance, which hinders the CDOs ability to build a data culture. This people gap can create bottlenecks in the implementation of data governance initiatives as CDOs struggle to find the right talent to drive their vision forward.

To address the people gaps, CDOs must build a strong data culture, provide comprehensive training and awareness programs, establish clear roles and responsibilities, and foster cross-functional collaboration. Additionally, they should work closely with their Human Resources department to attract and retain top data talent and engage with executive leadership to secure buy-in and support for data governance initiatives.

Time-Sensitive Initiatives

In a fast-paced business environment, CDOs are often responsible for executing urgent projects that demand immediate attention. However, these time-sensitive initiatives can create conflicts in prioritization, leading to a diversion of resources and focus from long-term data governance initiatives. As a result, operationalizing data governance will lack consistency and continuity.

CDOs must effectively balance addressing short-term demands with maintaining a strong commitment to their overarching data governance roadmap, which is no easy task. Rushing through the implementation of data governance can lead to oversights and errors, resulting in technical debt, data quality issues, and increased complexity in maintaining a unified data governance framework.

To overcome these challenges, CDOs must proactively communicate the strategic value of data governance and its long-term benefits to the Business. They should collaborate closely with business leaders to align time-sensitive initiatives with data governance objectives. By prioritizing business needs with the data governance roadmap, the CDO can ensure the right capabilities are being developed to generate business value incrementally with data governance.

Inability to Demonstrate Value

One of the key challenges that CDOs face is the difficulty in effectively communicating and demonstrating the tangible value of data governance initiatives to stakeholders. Data governance is often seen as an abstract concept, making it hard to quantify its impact on business outcomes.

CDOs must create compelling narratives and metrics that resonate with decision-makers, demonstrating how data governance can enhance operational efficiencies, reduce risks, and generate new revenue streams. Failing to articulate this value proposition may result in a lack of support and buy-in from key stakeholders. Without this buy-in, obtaining the necessary resources, budget, and organizational commitment to implement and sustain data governance initiatives becomes challenging. If data governance efforts are not seen as providing tangible benefits, the CDOs role and authority may be weakened, hindering their ability to deliver their data governance strategy.

To address this, CDOs should proactively communicate the strategic value of data governance through clear metrics, success stories, and quantifiable business outcomes. When data governance performs well, organizations should experience improved data quality and optimized business processes. Socializing these results is essential to demonstrating the value and outcomes realized with the investment in data governance. By tackling these challenges directly, CDOs can position themselves as strategic leaders, driving a data-driven transformation and unlocking the full potential of their organization's data assets.

At Definian, we assist our partners in understanding the fundamental importance of implementing data governance and emphasize the value proposition by educating leaders about the impacts of inaction. CDOs can tackle these common blockers by employing an agile, multi-faceted approach that aligns data governance initiatives with business objectives and secures the required sponsorship and support from the top down while establishing stewardship accountabilities from the bottom up. By empowering CDOs to overcome these challenges, they can showcase the value of data governance and drive sustainable progress within their organizations. Our objective is to ensure that data governance initiatives receive the necessary sponsorship, support, and resources to unlock the full potential of data as a strategic asset.

Navigating Compliance and Talent Management Through Effective HCM Data Governance

For a 30,000-employee industrials company growing rapidly through acquisitions, maintaining accurate HR data was a constant challenge. Each new acquisition brought an influx of disparate data, inconsistent structures, and compliance risks, particularly when meeting federal reporting requirements like Affirmative Action Plans (AAP), Equal Employment Opportunity (EEO), and Fair Labor Standards Act (FLSA) regulations. These gaps in HCM data fundamentals not only strained resources but also delayed strategic decision-making about talent across the organization.

When the company engaged us, their HR team faced a tangled web of challenges. Data preparation for newly acquired companies took up to six weeks, consuming hours of manual effort. Inconsistent job classifications, mismatched AAP codes, and errors in hierarchical reporting were just a few of the recurring issues. Over the course of our partnership, we implemented a series of targeted interventions to clean up their data, automate quality checks, and establish sustainable HCM data governance processes that transformed their HR function.

Diagnosing and Resolving Systemic HR Data Errors

The first step in addressing the company’s HR data issues was to thoroughly diagnose the root causes of their errors. Our diagnostics uncovered a range of problems, from blank or missing fields to misaligned reporting structures. For instance, many employee records were missing critical information, such as job or position data, making it nearly impossible to maintain compliance with federal reporting requirements. These gaps highlighted the need for better HCM data best practices around record-keeping and validation.

We also encountered complex issues related to hierarchical relationships within the data. In some cases, managers and subordinates were incorrectly assigned, creating circular reporting structures that led to confusion and undermined organizational clarity. Other inconsistencies included discrepancies between positions and their corresponding job records, as well as mismatches between organizational units assigned to employees and their roles.

To resolve these challenges, we implemented:

- Rigorous data validation processes that flagged errors and inconsistencies.

- Systematic cleanup of reporting structures, ensuring clarity and alignment.

- Enhanced reporting templates that captured the necessary data fields to meet compliance requirements.

These efforts not only reduced immediate errors but also laid the groundwork for more robust HCM data governance practices moving forward.

Establishing Standardized Validation and Configuration

After addressing the initial wave of data errors, we shifted our focus to preventing future inconsistencies. Standardizing HR data configurations across the parent company and its acquired entities was essential. Disparate systems and manual processes in the acquired companies frequently led to issues such as mismatches between Affirmative Action Plan (AAP) codes and Equal Employment Opportunity (EEO) categories. Similarly, pay scales and personnel subgroups were often misaligned, leading to significant compliance risks and reporting inaccuracies.

We developed standardized templates and automated validation rules that ensured alignment across all entities. For example, we predefined valid combinations of AAP and EEO codes, allowing the system to flag invalid entries before they propagated. We also implemented rules to align job classifications with pay scales, addressing common issues where managerial roles were not correctly tied to corresponding compensation structures.

By creating and deploying these templates and rules, we gave the HR team tools to validate data at the point of entry. This significantly reduced the volume of errors and manual corrections, enabling the team to focus on more strategic tasks. These efforts reinforced the importance of HCM data best practices in ensuring consistent and reliable data.

Automating Data Audits and Reporting

Data quality is not a one-time fix; it requires continuous monitoring. Recognizing this, we partnered with the HR team to automate their data quality audits. Previously, audits were conducted sporadically and often relied on labor-intensive processes. We introduced automated tools created in Applaud to generate monthly diagnostics, a cornerstone of effective HCM data governance.

These automated reports provided a clear picture of data health across the organization. For example, they identified discrepancies where employees’ organizational units did not align with their assigned positions, or where Fair Labor Standards Act (FLSA) classifications were mismatched. By detecting these errors close to real time, the HR team could address them proactively.

We also tailored the reports to meet the needs of different stakeholders, offering division-level breakdowns as well as consolidated organizational summaries. This allowed the company to prioritize corrections efficiently and maintain high levels of data integrity while adhering to HCM data best practices.

Building a Scalable Data Governance Framework

To ensure long-term success, we worked with the company to establish a robust HCM data governance framework. This involved defining clear ownership of HR data fields and creating processes for maintaining data accuracy. We documented standard operating procedures for data validation, error escalation, and corrective actions, ensuring that the HR team could sustain high data quality even as the organization continued to grow.

An integral part of this effort was updating training materials for HR administrators. These materials covered everything from field definitions and job classification standards to the proper configuration of manager assignments. By empowering the HR team with the knowledge and tools they needed, we helped build a culture of accountability and precision—essential for maintaining HCM data fundamentals across a rapidly expanding organization.

Optimizing Processes for Acquired Companies

One of the most significant outcomes of our engagement was the transformation of the data onboarding process for newly acquired companies. Previously, it could take up to six weeks to prepare and integrate HR data from a new acquisition—a process fraught with errors and inefficiencies. By adding field derivations, automated validity checks, and simplified handoffs, we reduced this timeline to just one week of part-time effort.

This optimization not only accelerated integrations but also ensured that acquired companies were aligned with the parent company’s standards from day one. Pre-configured templates and automated validation reduced the burden on both the parent company and the acquired entities, creating a seamless transition. These efforts showcased the practical application of HCM data best practices in real-world scenarios.

The Business Impact: From Chaos to Confidence

By the end of our engagement, the company’s HR function had undergone a dramatic transformation. Key outcomes included:

- Regulatory Compliance: The company achieved consistent compliance with federal AAP, EEO, and FLSA requirements, significantly reducing regulatory risks.

- Enhanced Data Quality: Automated diagnostics and continuous monitoring ensured that errors were caught and corrected before they could impact reporting or decision-making.

- Operational Efficiency: Manual data preparation efforts were reduced by 80%, freeing up valuable time for the HR team to focus on strategic initiatives.

- Scalable Processes: A robust HCM data governance framework positioned the company to manage HR data effectively across future acquisitions.

This transformation highlights the power of mastering HCM data fundamentals. Through strategic interventions in HCM data governance, quality, and migration, we helped this company turn its HR data challenges into strengths, providing a solid foundation for compliance and talent management. As a result, they are now better equipped to understand and leverage their workforce, ensuring their continued growth and success.

By emphasizing HCM data best practices, robust HCM data governance, and the essentials of HCM data fundamentals, organizations can build sustainable frameworks that not only meet compliance standards but also enable strategic workforce management. Let this story inspire your journey to smarter, more reliable HR data.

Equipping a Workday Implementation with Data Governance

Background

Our client, one of the largest US ports, was preparing for a vital multi-year ERP transformation journey with Workday at its core. Acknowledging the pivotal role of data and recognizing gaps in their existing data management capabilities, the CIO sought to ground this transformation initiative with a formal data governance function – imperative for aligning business objectives with data requirements and enabling the adoption of Workday through data fidelity and trust.

Choosing Definian

Definian stood out with a unique value proposition that demonstrated expertise not only in understanding the intricate data requirements of a Workday implementation but also in presenting a strategic vision for data enablement that will drive implementation efforts to success. This included foundational data management disciplines for data governance and data quality to not only support the ERP go-live but also are required to maintain data integrity in the future. This, coupled with a proven track record in navigating organizations through complex data transformation initiatives, instilled confidence in Port leadership, affirming our suitability as the ideal partner.

The Process

Definian delivered a multi-phased tactical plan to develop and execute a strategy to formalize a data governance discipline at the Port. The approach tailored and launched a data governance framework aligned with the business operating model. Recognizing that data governance was a novel concept at the Port, an educational component was incorporated to facilitate change adoption and operationalize data governance concepts into an ongoing data management discipline. This was accomplished by working through use cases with the council centered on four targeted end-to-end business processes. Following the launch, Definian transitioned data governance facilitation to the Port’s appointed data governance leader and provided them guidance to sustain the momentum required to operationalize the council.

The objectives of each phase were:

Phase 1: Strategic Assessment

· Obtain a current state understanding through stake holder engagement

· Envision a desired state for data governance tailored to the Port’s operating model

· Translate gaps into prioritized capability-building initiatives

· Define a strategic roadmap of initiatives to implement data governance

Phase 2: Data Governance Launch

· Charter the launch of an Interim Data Governance Council

· Educate concepts for data governance and data quality frameworks

· Develop data governance rigor by working through targeted use cases

Phase 3: Operationalize Data Governance

· Sustain data governance momentum from the initial launch

· Transition facilitation to the Port’s data governance leader

· Compile formal data governance standards and policies for critical information

Results

By delivering on this initiative in less than six months, the outcomes have been promising as the Port approached its Workday implementation.

- Provided a shared understanding of the Port’s current data management capabilities and desired vision for data governance

- Launched a centralized data governance operating model comprising data owners and subject matter experts that’s Business-driven and IT-supported

- Established a cross-functional Data Governance Council promoting collaboration in formalizing data requirements, standards, and policies across the Port’s applications, processes, and reports

- Educated data governance and data quality concepts into practice through the execution of targeted use cases that generated data governance artifacts

- Achieved consensus with an initial inventory of critical data elements and their supporting definitions, standards, and stewardship accountabilities, providing clarity and eliminating redundant efforts from future Workday data migration and requirements tasks

In conclusion, through strategic implementation of data governance, our client not only embraced the intrinsic value of their data but also secured a resilient foundation for their Workday transformation, ensuring a more rapid and cohesive transition tailored to their long-term goals.