Articles & case studies

Data Governance in the Lake

Data Lakes have been with us for some time now. They were originally named by James Dixon, founder and former CTO of Pentaho, who used the analogy of comparing a cleansed, packaged bottle of water to represent a data mart, as opposed to a large body of water in a more natural state with more water streaming in from various sources to describe the Data Lake. Looking at data repositories in these terms, the Data Lake would contain data in all forms: structured, semi-structured, unstructured and raw, cleansed and standardized and enriched and summarized.

Many of the early Data Lakes were deployed in-house on Hadoop infrastructure, and were largely used by data scientists and advanced analytics practitioners who would take advantage of the vast pool of information to be able to analyze and mine data in ways that had been virtually impossible before. Due to the unpredictable nature of the data, and the fact that it was virtually always accessed as part of a large dataset rather than an individual record, the thought was that the Lake did not lend itself to Data Governance in the same way that a Data Warehouse might. Most Data Lakes went largely ungoverned in the early stages.

More recently, however, Data Lakes have begun to migrate to the Cloud to take advantage of the scalability of processing and storage, and the reduction in infrastructure costs. As they become more cloud-based, companies are beginning to take advantage of the added flexibility and reduced costs to move some of their more traditional structured data to the Data Lake. The intention is to make the data more visible and accessible to people who have a need to use it, but have had difficulty in accessing it due to the siloed nature of existing data repositories, and lack of any index of what is available. Some companies are beginning to move their entire Business Intelligence capability over to the Lake.

The addition of the other BI and data accessibility use cases to the Data Scientist one has had two distinct effects on many Data Lakes, one physical and one not:

- The Data Lake has physically changed by deploying multiple ‘zones’ of data that add the capability to cleanse, summarize and enrich the data to make it available for multiple uses

- The democratization of data and the need for multiple use cases that require that the data be fit for purpose and protected from misuse have meant that Data Governance now must play a much greater role in Data Lakes

Data Lake Structure

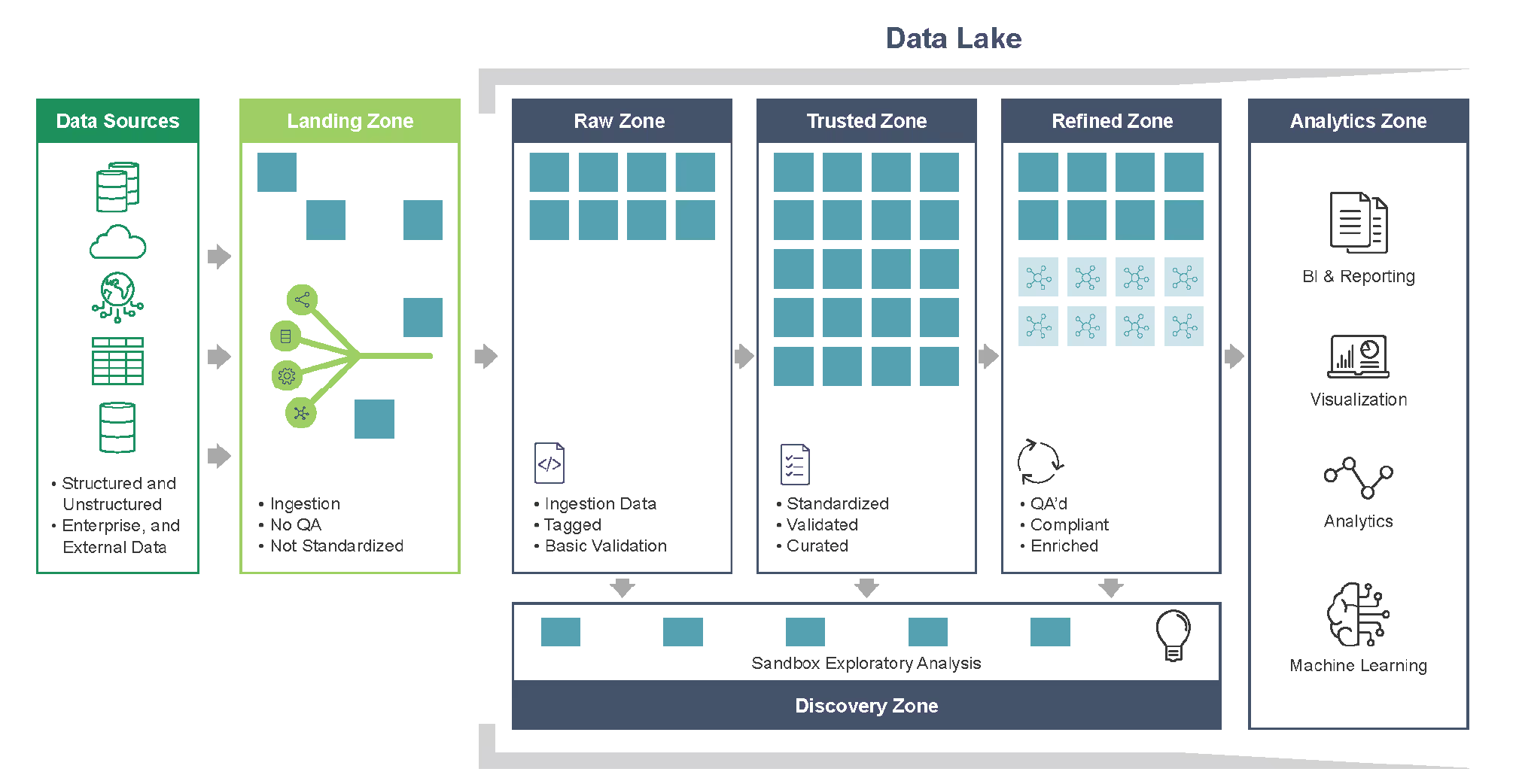

While this paper is not intended to be a deep discussion of the architecture of Data Lakes, it is necessary to look at how they are being deployed in order to talk about the various aspects of Data Governance that may be applicable. This section will provide a high level reference architecture of a Data Lake to facilitate the Data Governance discussion

The depiction above is by no means a universal model. But it does illustrate the types of zones and the types of data that are being deployed in today’s Data Lakes.

- The Sources are coming from both internal and external locations and consist of structured, semi structured and unstructured data.

- While not universally deployed, the Landing zone provides a location for data to be staged prior to ingestion into the Lake. This data is not persisted, it is only in this zone while it is needed.

- The Raw zone is the first true Data Lake zone. It consists of all the data that is ingested into the Data Lake in its native format. It is stored as-is, and provides lineage and exploration capability. Data in this zone is still largely used by data scientists for advanced analytics and data mining

- The Trusted zone is where the data is cleansed and standardized. Data Quality is measured and monitored in this zone. The data is secured for authorized access and may be certified as being fit for purpose.

- The Refined zone sees data being enriched, transformed and staged for specific business uses such as reports, models, etc.

- The Discovery zone receives data from all of the zone for sandboxes and exploratory analysis traditionally done by data scientists.

- Finally, the Analytics zone allows the data to be used. It consists of reports, dashboards, ad hoc access capabilities, etc. It may also be used to provide data to internal or external users in forms such as flat files, spreadsheets, etc.

When looking at this structure using the same analogy as James Dixon, the entire data supply chain in all its forms from the natural state through cleansing and filtering to storage both on a large and small scale would now be in the Lake.

Data Democratization

Locating data in the Lake, free of the artificial restrictions that are inherent in siloed data repositories, provides companies many potential benefits in enabling access to data that is useful to them in their daily jobs. But there are some steps that must be taken in order to be able to take full, allowable advantage of these benefits:

- The data must be properly tagged, described, cataloged and certified so that potential users are able to easily find and understand the data.

- Legitimate restrictions such as company classifications, Government regulations (e.g. HIPAA, GDPR, CCPA), ethical considerations and contractual terms must be followed. There is a risk that making the data available to those who need it may also make it available to those who should not have it.

Both of these considerations can be addressed by developing a Data Governance in the Lake (DGITL) program.

Data Governance

Many companies have tried to address Data Governance over the years, with varying degrees of success. Lack of understanding and commitment, unwillingness to deal with the rigors of Data Governance and unwillingness to invest the amount of money that it would require are amongst the most commonly cited reasons for lack of success. But with the rise of the Data Lake, there is another opportunity to govern at least some of the data that a company owns without going through the rigors of governing it all. And if for no other reason than the ability to adhere to regulations and contractual obligations, there is a business imperative to govern the data. So the question arises, what type of Data Governance program will maximize the usage and efficiency of the Lake without necessarily incurring the cost and effort of a full enterprise program. If companies are willing to adopt a program aimed specifically at the Lake, we believe the answer is yes. Note that this paper is making the assumption that a full program does not exist across the company, although Data Governance may have been adopted for some subset of the data (e.g. Master Data). But even in cases where a robust enterprise program exists, it is still worthwhile looking at the Lake and its data usage patterns and ensuring that the concepts in the remainder of this paper are covered.

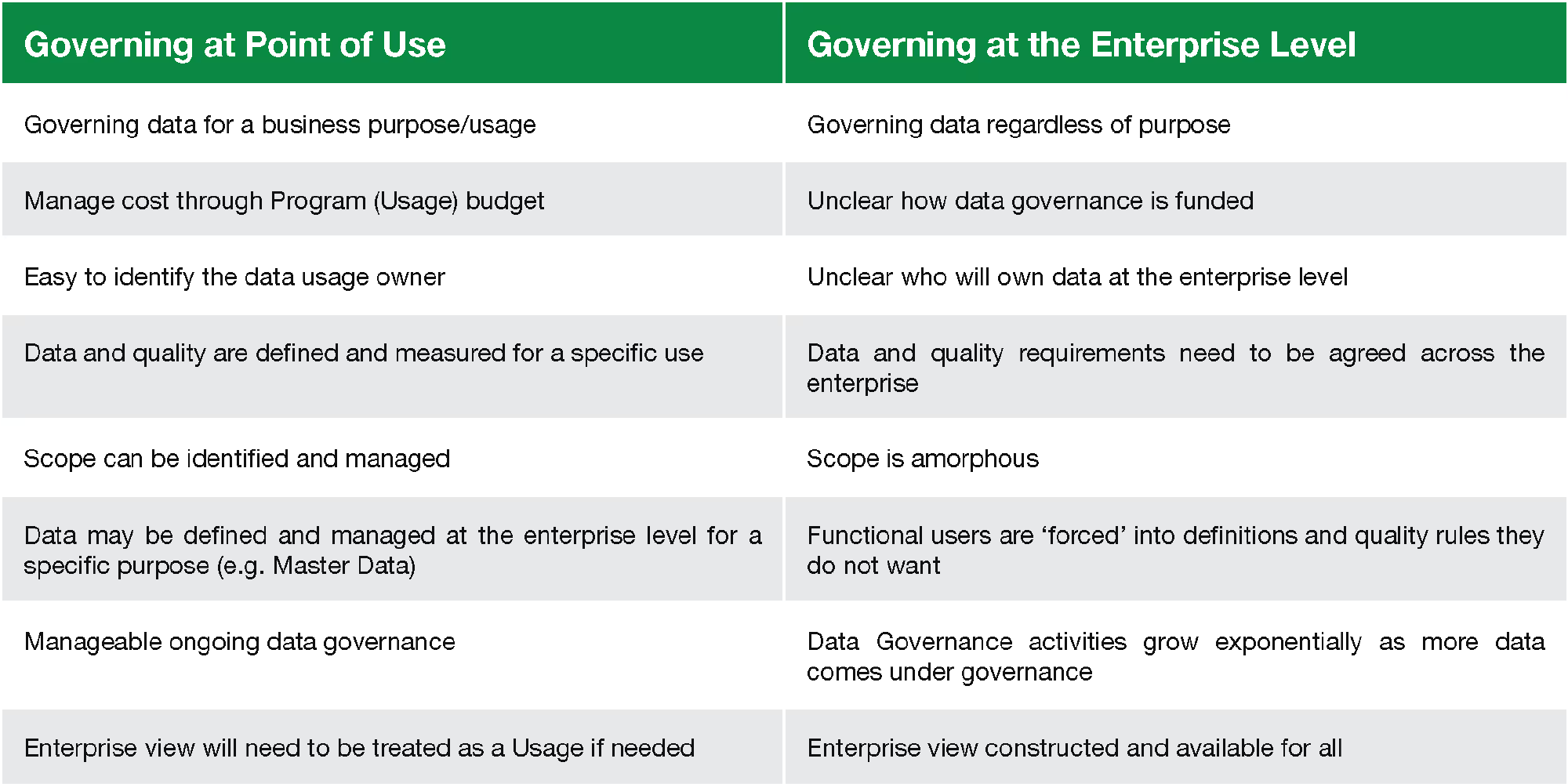

When we look at the way that typical Data Lakes are being used today, we see a difference not only in the types of data that are in the Lake when compared to an enterprise Data Warehouse, but also of the typical usage patterns. Data Warehouses were intended to contain the authoritative source of data for the enterprise, while in many cases Data Lakes are being used to contain ‘slices’ of data intended for different uses. Of course, the Raw Zone discussed above is intended to hold ‘all’ the data, but as the data is moving into other zones, there is a desire to make it fit for different usages. This provides us an opportunity to govern data at point of use rather than for the enterprise. The main advantage of that is that when governing data at point of use, you only need the agreement of the provider of the data and the user of the data on what the data means and when it should be supplied, while if governing at the enterprise level, you need the agreement of everyone with a stake in the data. Other advantages (and one disadvantage) of governing at point of use are outlined on the next page.

It is important to note the last row in the table. While governing data at the Usage level makes the size, scale and investment more manageable, it does not produce an enterprise view of data, nor does it assign Ownership to a single person. This makes it imperative that other aspects of Data Governance such as cataloging the data and its meaning and usage rules to be of increased importance so that the data is locatable and understandable.

Cataloging the data is emphasized by another aspect of the Data Lake. The imperative to ensure that making the data easy to find and use for those who need it must be balanced by the equally important imperative that it must not be available to those who should not have it, or used for purposes that it should not be used for. This has been relatively easy in the past since datasets were typically built for systems to access, and the data was not available to those who did not have access to the systems. Data Lakes will still have security tools that prevent people from accessing the Lake or specific zones of the lake if they do not have the credentials, but having credentials to access the lake does not mean that all data can be accessed. For example, external data may have rules that limit access e.g.

- Purchased prescription data may only be allowed to be used for medical research

- Data from suppliers such as Lexis-Nexus may only be allowed to be used for specific purposes

Managing these types of restrictions becomes much more difficult when the data is in a multi-purpose dataset like the Data Lake. Setting minimum standards for metadata cataloging becomes important to ensure that any company, regulatory or legal restrictions on the data are known at all points where the data is deployed.

But simply knowing what the restrictions are is not enough. There needs to be a facility to ensure that the restrictions are adhered to at all points along the data journey in the Lake. This can be managed by assigning accountability for data at every stop and for every usage, and mandating that as data gets passed from one usage to another, the accountability will also be passed. This requires 2 constructs:

- We need to assign accountability for the data at each place of rest. This may be accomplished by identifying the person responsible for the usage of the data and assigning them the accountability. We often refer to this role as the Usage Owner. This not the same role as the Data Owner in enterprise Governance programs. This person is only accountable for the data that they use. Most often, this person is responsible for a process or function that will use the data.

- A Data Sharing Agreement is then needed between the Usage Owner that is supplying the data and the Usage Owner that is requesting it. This agreement may exist at any point in the data lake, including as the data is being ingested. This Data Sharing agreement established three things:

- The Source Usage Owner understands the requirements for use of the Requesting Usage Owner and agrees that the request is for an acceptable use

- The Requesting Usage Owner understands any restrictions on the Usage of the data and agrees to be accountable for their implementation

- The Requesting Usage Owner agrees to cascade the restrictions and requirements to any subsequent request for that data

This last point on the cascading requirements is important since it prevents all approval of any usage of the data from being referred to the original usage owner, and it provides the capability for Usage Owners at any point to enrich the data and still be able to supply it to others who might need it.

An added extension in the Data Sharing agreement is that it may bring into play other stakeholders around the restrictions such as the Privacy, Legal and Regulatory departments, and even Audit. Once agreed, this Data Sharing Agreement becomes part of the metadata that is stored with the data it covers.

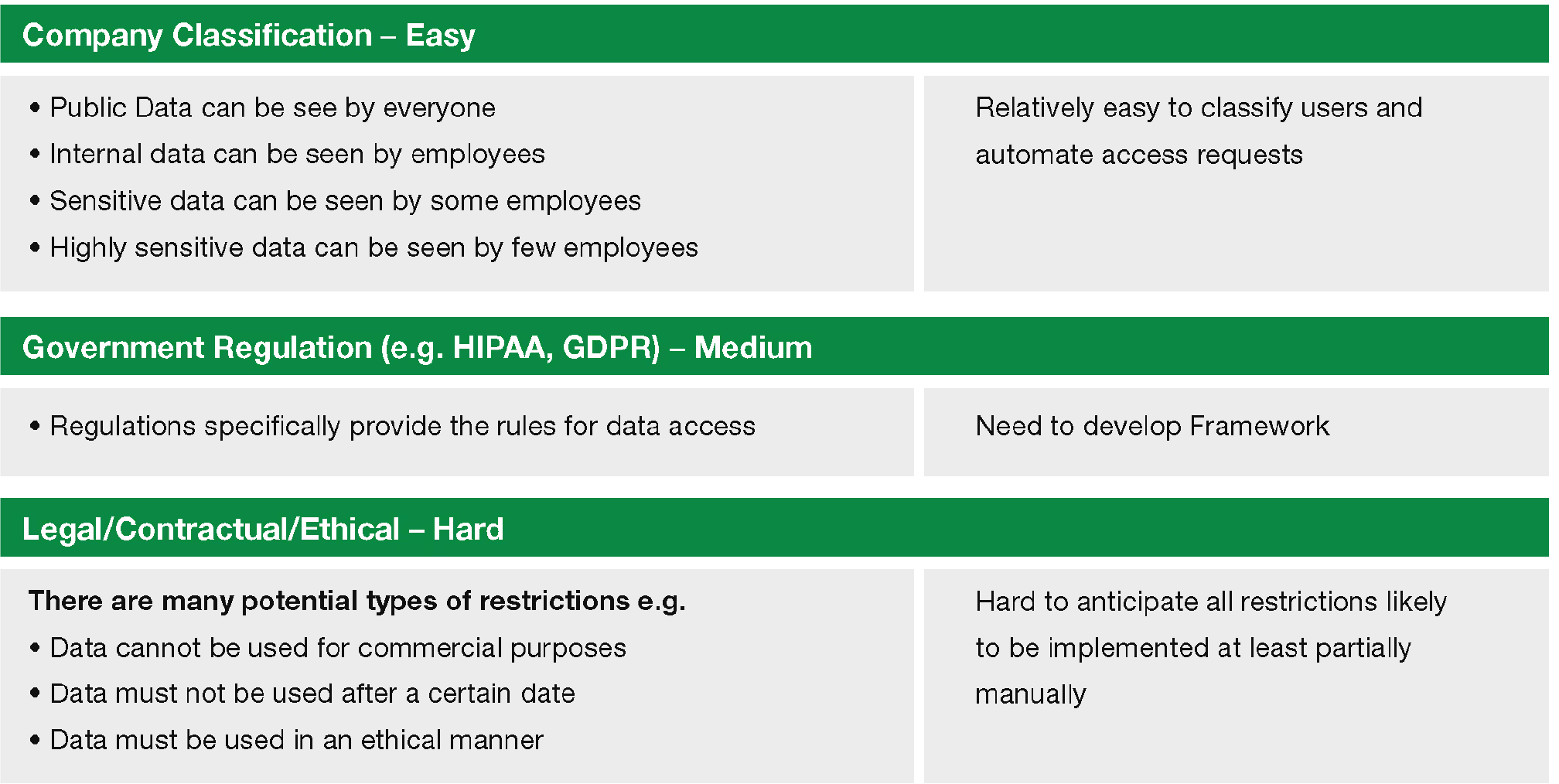

When that is achieved, the only thing that remains is to manage the access to the data according to the restrictions. While managing access to data may not be thought of as strictly a Data Governance process, it does lean heavily on some of the controls put in place by the Data Governance program we have discussed. Ideally, there would be a technical solution to managing access but some types of restrictions are harder to manage than others, as shown in the table below:

As can be seen, it is likely to be much easier to provide automated access management to data such as typical company classification levels than for other restrictions such as contractual terms, where there may be many different types. For this reason, the initial management of access may be manual, at least for some data. Management of use, in our scenario, will be the responsibility of the Usage Owner of the dataset that is being requested. Because of the Data Sharing agreement, this role has agreed to be accountable for the usage of the data at this point – another good reason not to have the data owned by a single person. In many cases, Access Management is being managed by a workflow engine, ensuring that all requests are being addressed.

In fact, this entire process can be enabled by developing a series of workflows to identify and ingest data, move it through the Lake and manage access against it. These flows can be administered manually or through a workflow engine. These high level workflows are depicted below.

This paper has focused on the accountability, ownership, documentation (via metadata) and ability to manage access against restricted data issues that the Data Lake is introducing. There are many other aspects of Data Governance that we have not focused on that remain important to the Data Lake. For example:

- Data Quality may be affected by data being ingested into the Lake. Apart from the fact that Data Quality will not be applied uniformly throughout the Lake (e.g. it will not be applied in the Raw zone), it may be different for different usages in the Lake. Some usages (regulatory reporting) may have much more strict requirements than others (analytics), and this may be reflected in the data that is being used for the usage. If there is an Enterprise usage defined, that is likely where enterprise data quality measuring and monitoring would take place.

- Likewise, there may be different requirements for metadata across zones and usages. Even if this is the case, however, it will be necessary to establish a minimum standard for metadata so that the ownership, meaning and restrictions can be applied.

- Lineage is another Data Governance aspect that may have different requirements for different usages. The collection of Lineage information should be enabled by the transportation into and through the Data Lake, but some usages may need it from its entry into the enterprise ecosystem, and that is not under the control of the Data Lake processes. Under these circumstances, extra effort will need to be expended to capture the full lineage.

These aspects, and others, should be taken into account as the Data Lake is being established and built out. In summary, we have seen that the movement of data to the Lake for Business Intelligence use cases is helping companies address efficiency and cost challenges and is making data much easier to find and understand for business users. But with that move comes the need to ensure that the data is being used for legitimate business purposes, and is not being made available to unauthorized users. The Data Governance capability that we have discussed here can help ensure appropriate access. Due to its focus on Usage of data rather than all data, this program may be appropriate for companies that have not established enterprise data governance programs. For those who have been successful with enterprise data governance, this program may be used as a guide to enhance that program and try not to overload enterprise Data Owners with requests for access to the data for which they are responsible. Either way, the business reasons for moving data to the Lake will mean that Data Lakes will continue to be deployed, and the issues with managing data in a more democratized environment will have to be addressed.

Migrating Data for a Century-Old Furniture Manufacturer

After decades of customizations to their ERP system to support changing business models and incorporate major acquisitions, the client was ready to get back to basics and ’run simple’. When they started their transformation, they quickly realized the custom solutions they had spent 30 years building and adapting did not easily translate to a modern day ERP.

Definian’s initial two-day data assessment quickly identified and quantified the challenges ahead. Thousands of Customer and Vendors were duplicated across various business units. Data was split across multiple systems and was often inconsistent or invalid. None of it was in the right format for SAP. Leveraging facts discovered during the assessment, a plan was developed to address the complex data issues and take data migration off the critical path.

“I was absolutely blown away... Outstanding demonstration of velocity and completeness!”

– IT Director

Client Challenges

There were many obstacles standing in the way of a successful data migration. One hundred years of business growth and inconsistent data governance had to be unraveled and streamlined.

- Separate legacy sold-to, ship-to, invoicing, ordering, and supplier addresses needed to be harmonized, consolidated, cleansed and re-structured to fit the SAP Business Partner and Partner Function structure.

- Customer and Vendor information needed to be combined and reconciled across multiple legacy systems. Discrepancies between systems needed to be identified and resolved.

- Thousands of Customers and Vendors needed to be harmonized between business units, with unexpected variances highlighted and resolved.

- Duplicated Customer and Vendors needed to be identified, combined and restructured to simplify and streamline the data prior to loading the data to SAP.

- All harmonization and restructuring activities needed to be highly automated while still allowing the business to drive key decisions.

- Harmonization decisions needed to flow through downstream conversions and allow traceability back to the separate legacy Customers and Vendors.

- USPS address standardization needed to be applied to surviving Customer and Vendor street addresses.

- Contact information, which was broken across multiple legacy systems, needed to be tied together and data integrity issues addressed. Contact information and site usage (ship-to, sold-to, remit-to, etc.) had to be intelligently merged across duplicate addresses.

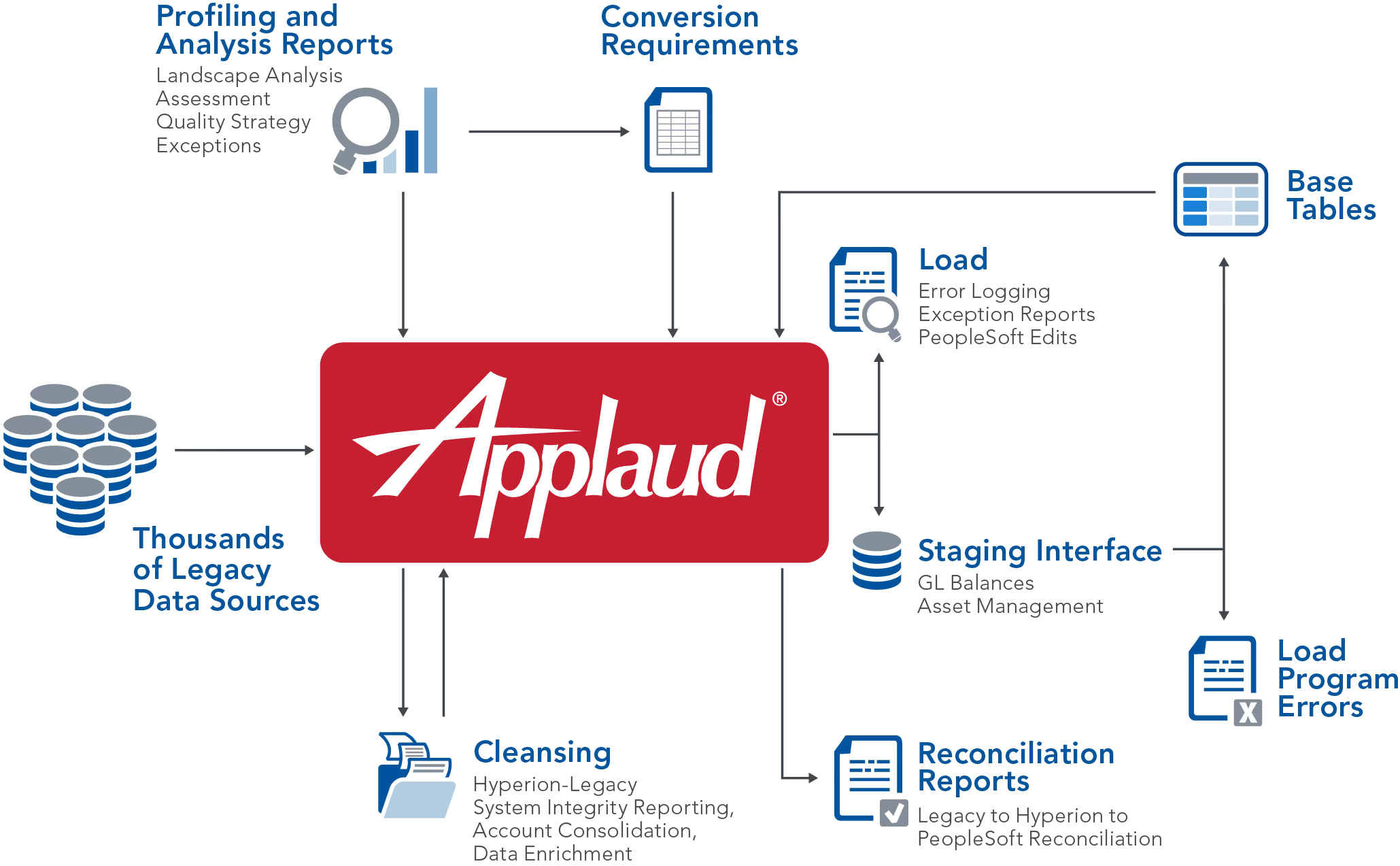

The Applaud® Advantage

Three key components of Definian Applaud solution helped the client navigate their data migration:

- Definian’s data migration consultants: Definian’s services group averages more than six years of experience working with Applaud, exclusively on data migration projects.

- Definian’s methodology: Definian’s EPACTL approach to data migration projects is different than traditional ETL approaches and helps ensure the project stays on track. This methodology decreases overall implementation time and reduces the risk of the migration.

- Definian’s data migration software, Applaud®: Applaud was built from the ground up to address the challenges that occur on data migration projects, allowing the team to accomplish all data needs using one integrated product.

The Applaud® Solution

Definian's team leveraged the Applaud® software to build a sophisticated reporting process to identify duplicate candidates for client review. After being reviewed by the client, this same document was fed back into the conversion process to drive the de-duplication process.

Definian’s solution identified and corrected for a 20% Customer Address redundancy rate and a 70% Vendor Address redundancy rate. Contact information, shipping instructions, and credit notes were successfully merged underneath the surviving Business Partner as part of the migration to SAP.

As part of consolidation, survivorship rules were applied to the relationships between legacy addresses, ensuring each Business Partner created for SAP inherited the Function Assignments from the non-surviving legacy addresses. For instance, when a legacy ordering address survived over an invoicing address, the single Business Partner migrated to SAP was assigned both invoicing and ordering roles.

Definian successfully trimmed a complex web of Customer and Vendor relationships across multiple systems into a cleaner, simpler Business Partner and Function Assignment structure. The conversion process identified a 14% redundancy rate within legacy Ship-To assignments and an additional 18% redundancy rate across vendor invoicing addresses. This meant that the function assignments converted into SAP were cleaner, fewer, and simpler than originally thought, reducing risk from the load.

Since the Applaud® tool uses EPACTL best practices (Extract, Profile, Analyze, Cleanse, Transform, and Load), it was possible to reconcile this dramatic transformation back to the legacy systems.

Definian reported how every legacy address function tied to a converted SAP partner function, illustrating how survivorship was applied and showing where data was merged. This reconciliation identified a small amount of legacy data exceptions which did not follow standard conventions. This exercise helped the client to tighten their conversion requirements and ultimately automate virtually all their partner data conversions – while still allowing the business to drive those key decisions.

The Bottom Line

As part of their SAP S/4HANA implementation, the client achieved their goals:

- Optimized the Customer and Vendor management experience

- Improved customer relations

- Streamlined the shipping process

- Capitalized on material ordering and pricing by better understanding their spend

These goals all hinged on getting to a single source of truth, removing redundancy within the data, and trusting that nothing was lost during the migration to SAP. Definian and Applaud helped the organization understand the reality of their current data landscape and make informed decisions around how best to achieve these goals, with the business involved every step of the way. By having the foresight to invest in the data as part of the process, the client was able to quickly start capitalizing on their implementation.

Legacy Systems

- Infor XA, Homegrown ERP

Target System

- SAP S/4HANA

Key Challenges

- Business Partner Structuring

- Business Function Harmonization

- Address Standardization

Key Results

Common Techniques to Employ in the Identification of Duplicate Data

Duplicate master data is a common problem in across every organization. Identifying and resolving duplicate information on customers, suppliers, items, employees, etc. is an important activity. Solving the duplicate issue can yield increased buying power, more accurate marketing campaigns, reduced maintenance costs, improved inventory management, better forecasting; improvements across all any aspect of the business.

In terms of a new implementation, if the duplicate data is not resolved before or during the migration, the duplicate data will either silently load into the new ERP application and cause problems when the application is put to use or will error out during the load and cause problems because the downstream transaction conversions won’t be able to load. On an ongoing data management process, it is important to prevent duplicates by defining the criteria and keeping scorecard metrics that ensure that the organization is preventing duplicates adding to overall data degradation.

The basic concept for identifying a duplicate candidate is straight forward. Standardize fields that identify a unique entity (e.g. a single customer, vendor, item, etc.) and compare. In practice, depending on the type\cleanliness of the data, the standardization process can be complex.

There are several techniques that are commonly used to identify duplicates within master data.

- Noise Word Removal – The process of removing words that don’t add significance to the data or are often incorrect. Common examples of noise words are “the”, “of”, and “inc”.

- Word Substitution - The process of replacing an existing word or phrase with another word or phrase. It is common to substitute names and abbreviations when identifying duplicates. For example, Tim would be replaced with Timothy, OZ would be replaced with Ounce, a single quote might be replaced with foot, and a double space might be replaced with a single space. Additionally, context of the words in the current field or other fields could affect the substitution that needs to take place.

- Case Standardization – The process of making everything the same case.

- Punctuation Removal – The process of removing all alpha or numeric values that don’t add any significance to the field value.

- Phonetic Encoding – The process of encoding words based on how they sound. For example, “donut” and “doughnut” would be phonetically encoded to the same value. There are several types of phonetic encoding methods that are commonly used.

- Address Standardization – The process of standardizing all of the components of an address prior to comparing values. Usually the process that checks for duplicates utilizes address validation techniques\software\services to make sure the address is valid and to make ensure that all of the pieces of the address are formatted uniformly throughout the data.

- Attribute Standardization - Attribute data can be used to determine a distinct entity, but inconsistencies could make them look different at first blush. For example Unit of measure could be in pounds and ounces, but those could the same once the conversion factor is complete. Or there could be 2 Assemblies that have the same Bill of Material parts list attached to them. This attribute data can be used in the identification of duplicates.

Outside of these techniques there are others that might be used like ignoring all one or two character words, match on the first X number of characters, alphabetize the words in a field or across fields, execute rules in a variety of orders, not requiring a field if it is not populated, merging results of different match criteria together, and many more. There are seemingly endless combinations of rules that can be applied for identifying duplicate candidates. It usually takes several iteration of rules and combinations to determine which rule set(s) are appropriate.

Additionally, if you have large enough datasets, machine learning algorithms coupled with some of the techniques above can further automate the identification and consolidation of duplicate data.

If you have questions on data management, governance, migration, or just love data, let's connect. My email is steve.novak@definian.com.

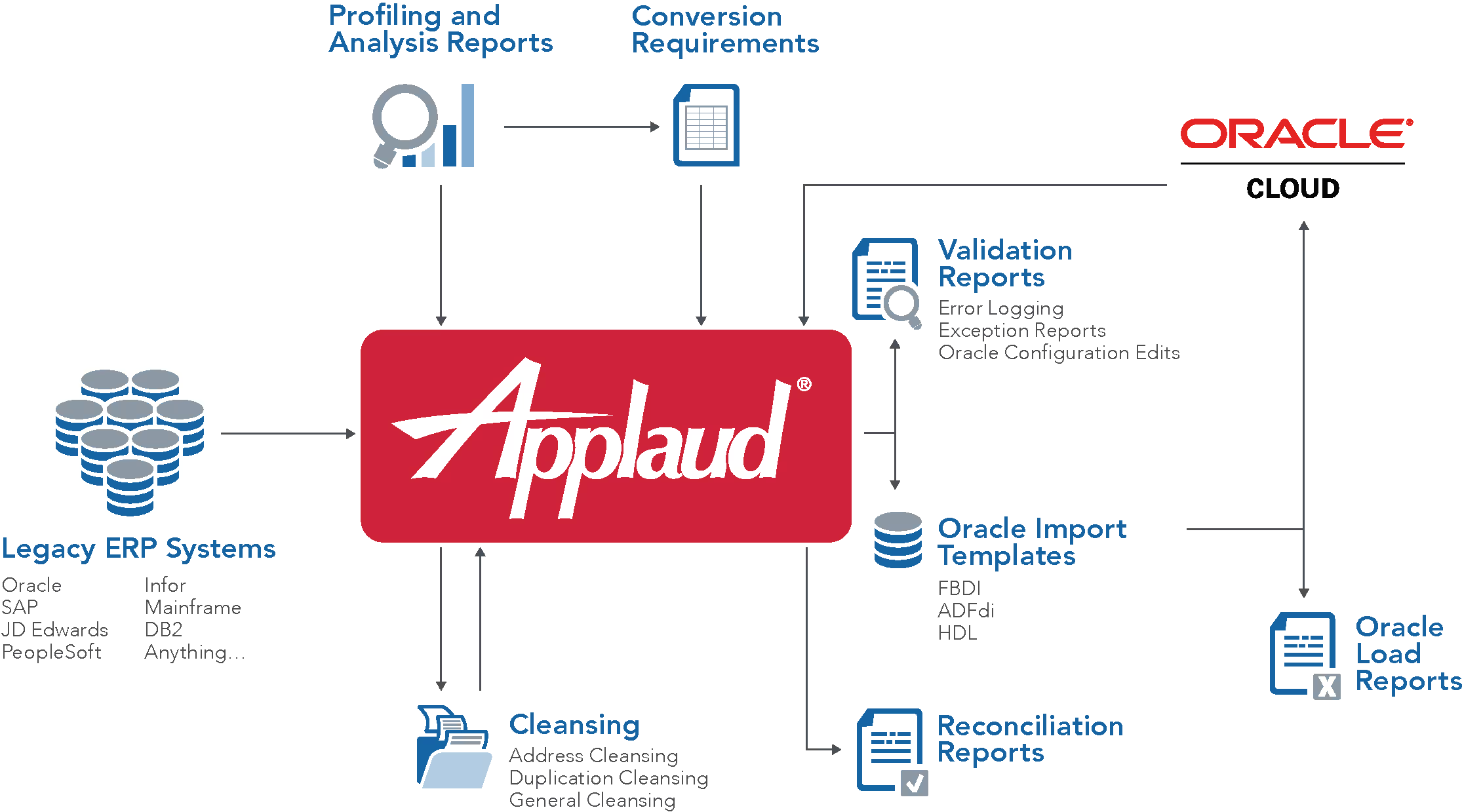

Data Migration Services For Oracle Cloud

We Take the Risk Out of Data Migration

Whether it is Human Capital Management (HCM), Enterprise Resource Planning (ERP) Finance, or Supply Chain Management (SCM), the implementation of any Oracle Cloud application is a major undertaking and data migration is a critical and risky component.

- Data from multiple legacy sources needs to be extracted,consolidated, cleansed, de-duplicated, and transformed before it can be loaded into the Cloud applications.

- The data migration process must be repeatable,predictable, and executable within a specific timeframe.

- Migrated data will require reconciliation against the legacysource data after loading to the Cloud.

- Issues with data migration will delay the entire implementation or Oracle Cloud will not function properly.

- If the converted data is not cleansed and standardized, thefull functionality and potential Oracle has to offer will not be realized.

Data Migration Challenges

Many obstacles are encountered during an Oracle Cloud implementation, each increases the risk of project overrun and delay. Typical challenges include:

- High levels of data duplication across disparate legacy systems.

- Unsupported and/or misunderstood legacy systems, both in their functionality and underlying technologies.

- Legacy data quality issues – including missing, inconsistent, and invalid data – which must be identified and corrected prior to loading to Oracle Cloud.

- Legacy data structures and business requirements which differ drastically from Oracle’s products.

- Frequent specification changes which need to be accounted for within a short timeframe.

The Definian Difference

Definian’s Applaud® Data Migration Services help overcome these challenges. Three key components contribute to Definian’s success are:

- People: Our services team focuses exclusively on data migration, honing their expertise over years of experience with Applaud and our solutions. They are client-focused and experts in the field.

- Software: Our data migration software, Applaud, has been optimized to address the challenges that occur on data migration projects, allowing one team using one integrated product to accomplish all data objectives.

- Approach: Our RapidTrak methodology helps ensure that the project stays on track. Definian’s approach to data migration differs from traditional approaches, decreasing implementation time and reducing the risk of the migration process.

Applaud Eliminates Data Migration Risk

Applaud’s features have been designed to save time and improve data quality at every step of an implementation project. Definian’s proven approach to data migration includes the following:

- Experienced data migration consultants identify, prevent and resolve issues before they become problems.

- Extraction capabilities can incorporate raw data from many disparate legacy systems, including mainframe, into Applaud’s data repository. New data sources can be quickly extracted as they are identified.

- Automated profiling on every column in every key legacy table assists with the creation of the data conversion requirements based on facts rather than assumptions.

- Powerful data matching engine that quickly identifies duplicate information across any data area including employee, dependent, customer, and supplier records across the data landscape.

- Integrated analytics/reporting tools allow deeper legacy data analysis to identify potential data anomalies before they become problems.

- Cleansing features identify and monitor data issues, allowing development and support of an overarching data quality strategy.

- Hundreds of out-of-the-box pre-load validations identify issues within the legacy data and its compliance with Oracle configuration prior to the upload into Oracle.

- Development accelerators facilitate the building of Oracle’s FBDI, ADFdi, and HDL load formats.

- Process that ensures the data conversion is predictable,repeatable, and highly automated throughout the implementation, from the test pods through production.

- Stringent compliance with data security needs ensure PII information remains secure.

- Our RapidTrak methodology provides a truly integrated approach that decreases the overall data migration effort and reduces project risk.

Celebrating a Successful Workday HCM Go-Live

WASHINGTON, DC: Definian is proud to announce the successful Workday HCM Go-Live for a $2.2B transportation management firm. Definian’s Data Migration services played a pivotal role in the project by ensuring that data was not only appropriately migrated, but also validated and fully reconciled in advance of Go-Live.

Our client was migrating from a hosted legacy solution. While the client had the functional knowledge, they had no technical understanding of their underlying data. Definian's legacy data assessment allowed the client to quickly understand their legacy data and relate it to their Workday requirements to devise a viable migration solution. In addition, Definian’s data migration solution identified data errors prior to the execution of the Workday load, allowing the team to proactively address issues well in advance. Our proactive approach led to extremely high load percentages early in the project. At Go Live, 100% of expected data was successfully loaded, ensuring the business hit the ground running from Day One.

Additionally, Definian produced custom gross-to-net payroll reconciliations between the legacy and Workday solutions. These detailed reports allowed the client to validate converted historical payroll data on their own terms, with payroll metrics tied-out to the penny at Go Live. Armed with a 100% successful payroll data conversion and a payroll reconciliation that compared every line of every paycheck and provided exhaustive variance reports, the client began processing payroll in Workday immediately, confident that their data was accurate.

With this achievement, Definian looks forward to continuing our mission of removing data from the critical path on our client’s additional Workday deployments.

ERP System Consolidation Success

AKRON, OH: Definian is delighted to congratulate their partner, an $8.7B medical device manufacturer, on the successful consolidation of multiple ERP systems into a unified Oracle EBS R12 environment. Definian’s Data Migration services were a critical component to accelerating the project timeline.

During this project, Definian’s data migration specialists harmonized, cleansed, and consolidated four legacy ERP applications into an existing Oracle EBS environment. Based on previous experience, the client estimated that Definian was able to complete the work in 1/3 the time it would have taken internal resources. Without Definian’s expertise, the consolidation effort was expected to take 24 months. With Definian, the actual implementation time was 8 months. By reducing and optimizing their overall timeline, the organization was able to more fully focus on business improvement, and has been able to fast-track the next phases of the project.

Definian looks forward to continuing this relationship with the next phases of the initiative.

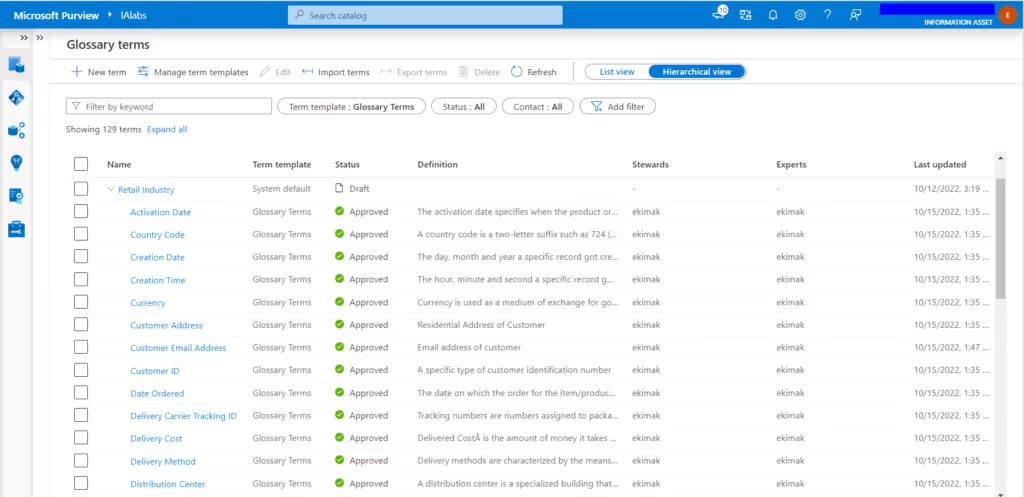

Data Governance Retail Industry Template And Metadata Exchange Solution Using Azure Purview

Executive Summary

An organization is entitled to identify, define, model, and standardize its data as part of a data management framework. However, defining industry-specific and standardized data dictionaries can be an excruciating task. Many organizations hesitate to establish a good data management program without a good business case and, in turn, lack support from leadership. A clean and standardized data template can jump start a data management and governance program and lay the foundation for an Enterprise data architecture.

Definian has assembled a retail industry-specific template with Microsoft Azure Purview. This template includes a standardized set of data definitions, regulations, and policies to kick-start a data governance program with Azure Purview. The retail Industry template can be enhanced and molded as per an organization’s requirements to build a solid foundation and is also easy to adapt, customize, and implement.

Along with the retail industry-specific template, a common theme we have heard from many of our customers is an interest in having Azure Purview co-exist with other data governance platforms. We have developed an integration layer for data management platforms (Meta-mesh), which harmonizes information across various platforms, including Azure Purview and Collibra Data Intelligence Cloud Platform. This solution is built on Azure and can integrate other enterprise-wide data sources.

Retail Industry Templates

Inventory of Retail Specific Business Terms

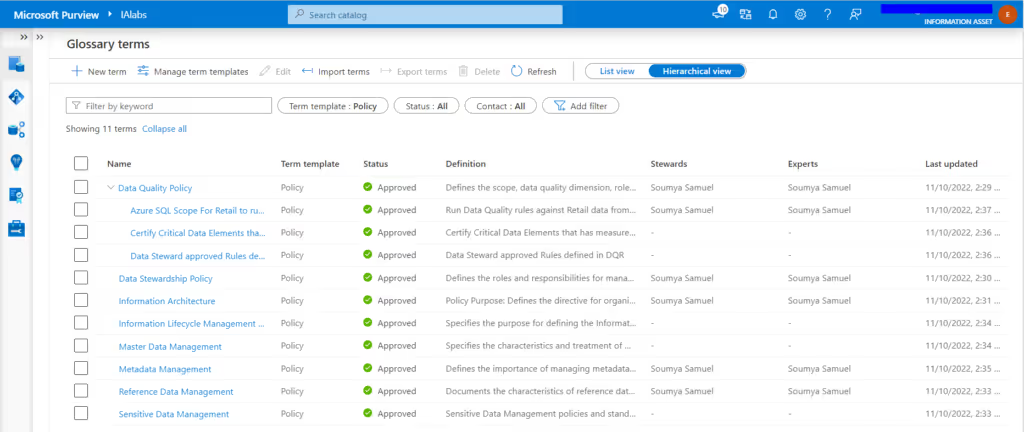

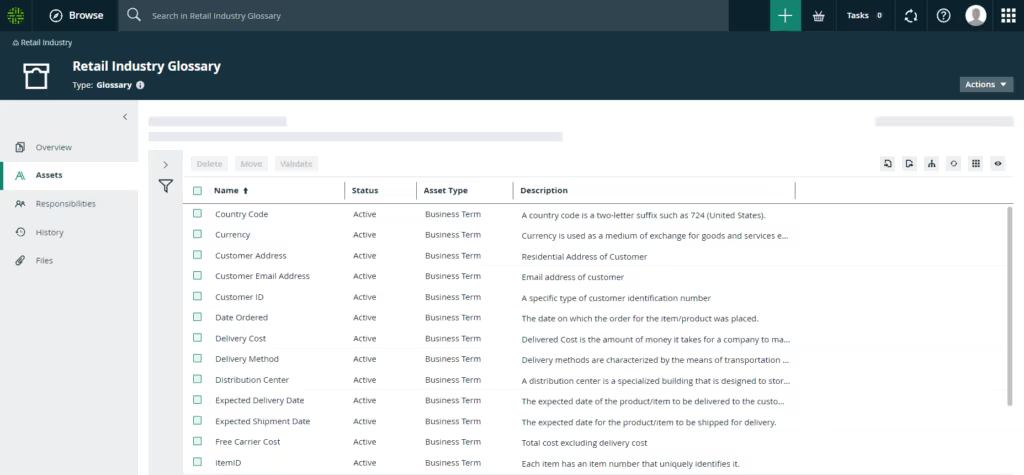

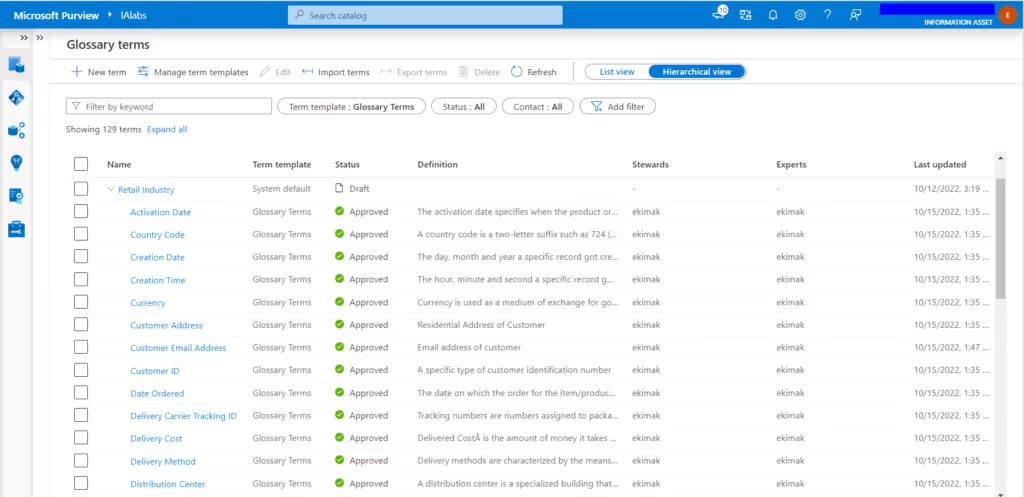

We have built an inventory with over 100 retail glossary terms listed, defined, and enriched with attributes to carry out data governance in Azure Purview. The glossary is described in business terms with a standard format for ease of understanding, whether the consumer of this data is from a business or technical background. Critical Data Elements (CDE's) and Personally Identifiable Information (PII) are identified and tagged accordingly within the glossary (see Figure 1).

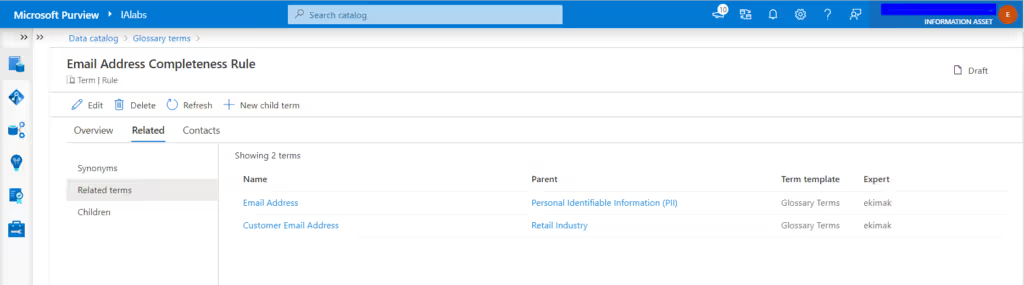

Data Quality Rules

This template includes a set of data quality rules for completeness and validity. You can extend this template to cover rules to measure accuracy, uniqueness, integrity, and consistency (see Figure 2).

Figure 3 illustrates the association of the data quality rule for checking the completeness of the Data Quality Rule to the business terms from the PII and Retail Industry Glossaries.

Inventory of Governance Policies

This template includes an inventory of the policies as per the standard Data Governance framework. These policies are categorized based on specific domains like data quality and stewardship (see Figure 4).

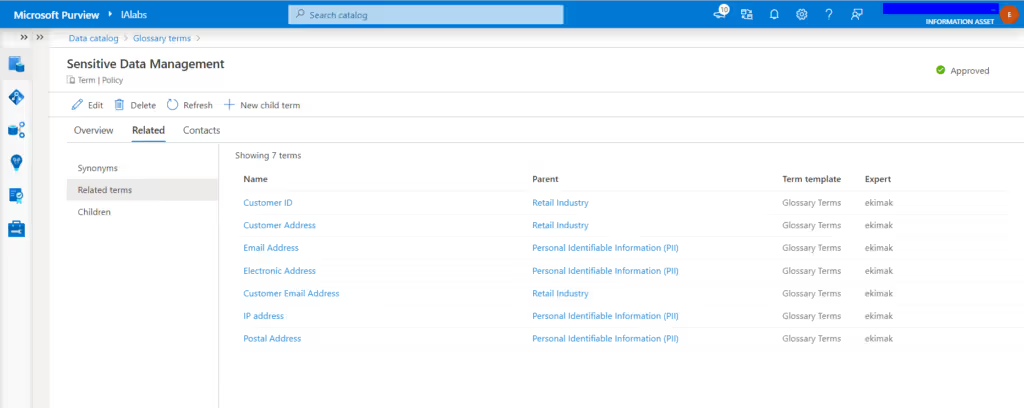

Figure 5 illustrates how a Data Governance Policy for 'Sensitive Data Management' governs the business terms from the respective parent glossaries.

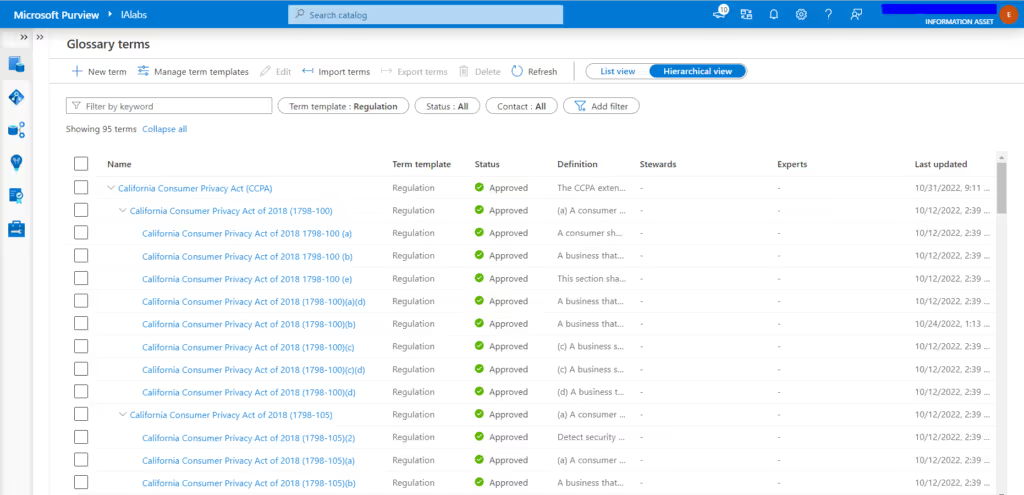

Inventory of California Consumer Privacy Act (CPA) Regulation

The most critical aspect of data governance is to ensure compliance with relevant regulations. Retail industry templates have defined around 100 privacy clauses for the California Consumer Privacy Act (CCPA) (see Figure 6).

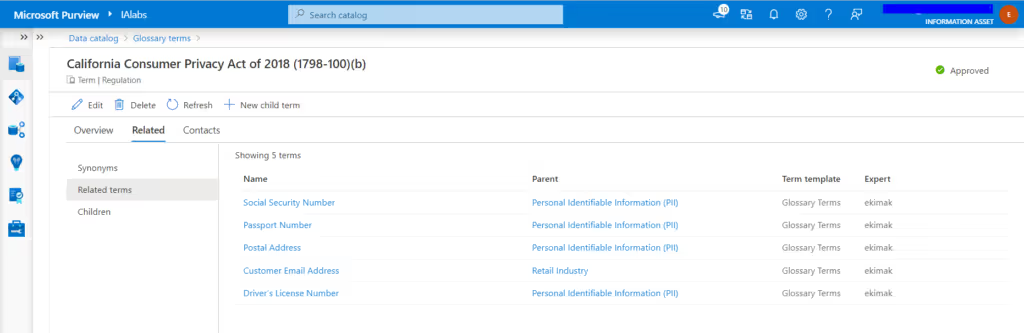

Figure 7 illustrates the glossary terms from Retail industry that are regulated by the CCPA regulation citation (1798-100)(b).

Metadata Exchange Platform (Meta-mesh)

Definian has built a Metadata Exchange Platform, an integration layer for data management platforms. This platform is built on Azure and uses the Azure Event hub to seamlessly move metadata from one platform to another. This platform also integrates Azure Purview and Collibra Data Intelligence, providing a unidirectional flow of metadata such as a glossary, data quality rules, policies, and regulations from Collibra to Azure Purview. This solution is available as a single-app service in Azure. The meta-mesh platform is flexible to use the Kafka data pipeline should an organization choose. Near real-time visualization of data in these pipelines is achievable using Stream Analytics on Azure or integrating third-party tools if an organization chooses to track and report the metadata quality moving between data management platforms.

Figure 8 shows an inventory of approved business terms in Collibra Data Intelligence cloud platform.

Figure 9 shows the migration of the glossary terms from Collibra to Azure Purview using Meta-mesh. The platform provides the ability to map out-of-the-box as well the custom operating model (attributes and relationships) in Collibra to Purview.

Celebrating a Successful SAP Go-Live

ITHACA, NY: Definian is proud to announce the successful SAP Go-Live for a $8.3B auto parts manufacturer. Definian’s custom migration services filled gaps in the organization’s Data Migration process to ensure that the cleanest and most accurate data set was migrated at go live. The use of robust comparison reports, custom address cleansing, and customizable pre-validation reports allowed the organization to Go Live knowing their data was ready to support their business process.

This achievement is memorable due to the multitude of ways the Applaud® file comparison report was utilized, allowing the organization to quickly understand gaps and create impromptu delta load files at a moment’s notice. The ability of the comparison report to quickly be run for any set of files allowed the organization to report against legacy data to identify changes between mock runs, compare legacy data to load data to isolate unauthorized transformations, and compare load data to existing SAP data to preemptively address conflicts. Comparisons were regularly run for more than 75 data files across 13 conversion areas.

With this achievement, Definian looks forward to continuing our mission of removing data from the critical path for the program’s next phases.

Successful Data Quality & Synchronization Deployment

TORONTO, ON, CA: Definian proudly announces the successful completion of a JDE E1 data quality and synchronization initiative for a $1B human resources services and technology company. During this project, Definian partnered with a long term client to perform a data synchronization audit between the data of an acquired company, running on a custom ERP platform, and the organization’s global instance of JDE E1.

Premier International (now Definian) first partnered with this organization in 2012, performing more than five separate implementations and deployments for them over the years. After acquiring another company several years ago, the organization maintained an ongoing interface and manual processes to keep the acquired companies custom ERP in sync with the global instance of JDE E1. Earlier this year, they determined that their interface procedure was not keeping the two systems fully synchronized.

Leveraging the powerful reporting capabilities of Applaud®, Definian quickly identified thousands of discrepancies which had been introduced over the years since the acquisition. Once identified, Definian proceeded to cleanse the impacted data, bringing the two systems back into sync and allowing the organization to move forward with the confidence that their data is accurate.

Definian looks forward to continuing to help companies ensure their data quality remains held to a high standard.

7 Reasons Data Migrations Fail

Industry experts agree that Data Migration poses the largest risk in any implementation. While there is a common misconception that Data Migration is simply moving data from Point A to Point B, the reality is almost always much more complicated.

Gartner has recognized the risk inherent to Data Migration. “Analysis of data migration projects over the years has shown that they meet with mixed results. While mission-critical to the success of the business initiatives they are meant to facilitate, lack of planning structure and attention to risks causes many data migration efforts to fail.” (Gartner, “Risks and Challenges in Data Migrations and Conversions,” February 2009, ID Number: G00165710)

What exactly causes Data Migration to be so risky? How can Definian (PI) help you avoid the pitfalls?

- Poorly understood / undocumented legacy systems: Every company’s data landscape is unique. It may encompass everything from decades old mainframes to homegrown one-off databases, each with its own level of support. Documentation may be non-existent, institutional knowledge may be limited, and key staff may be nearing retirement.

PI Solution: An up-front data assessment can help unravel data relationships across your landscape, providing comprehensive data facts. Our Applaud® software can seamlessly connect directly to many different database types, including mainframes, and quickly generate an overview of your data landscape.

- Incorrect / incomplete requirements that don’t reflect reality: Data Migration requirements are often developed based on assumptions around the data, rather than actual fact. Mappings and translations based on assumptions may miss key values. Duplication between or across legacy data sources may not be expected or accounted for. Data structure disparity between legacy data sources and the new target system may not be fully understood.

PI Solution: Comprehensive data facts provided by our data assessment helps ensure requirements reflect reality. Analysis reports identify duplicated data for resolution, which can be automatically corrected by the migration. Error handling built into the migration programs call out unexpected discrepancies between source and target data structures.

- Poor Data Quality / Incomplete Data: Your new system is only as good as the data underpinning it. Missing, invalid or inconsistent legacy data can cause ripple effects when it comes to the new system. While data may never be 100% clean, lack of attention to Data Quality can cripple even the most straight forward projects, leading to last minute cleansing initiatives. How do you ensure that any data gaps are filled and that you are not migrating too little (or too much) data?

PI Solution: Cleansing is a key pillar of our Data Migration approach. Defining a comprehensive Data Quality strategy right from the start allows time to account for the most pressing issues, including violations of your business specific policies. Starting on day one, we identify data gaps and initiate a data enrichment project to address any missing or invalid data.

- Lack of Attention to Detail: It is very easy to overlook seemingly innocuous changes between source and target systems. Fields with the same name may mean different things, both within and across systems. A different field name may be used for the same purpose across systems or multiple values may have the same underlying meaning. Date and time formatting and field length differences can also easily be overlooked, with disastrous impact.

PI Solution: Our proprietary software, Applaud, automatically alerts us to differences in field length or field types. Our Profiling, Analysis and Deduplication tools are put to work from the start to sniff out field level overlap and disconnects.

- Constant Changes: Data Migration projects are all about the changes. Even the most thought through business requirements start to change once testing gets underway and the business sees how the new system performs. Changes can easily number in the hundreds and need to be applied and tested quickly to avoid putting the schedule at risk by delaying test cycles.

PI Solution: Applaud allows the team to quickly modify the Data Migration programs to reflect (and test) requested changes. Changes that might take days with traditional tools can often be turned around in hours, allowing the team to implement and test changes multiple times a day, if necessary. Our project management tools keep track of all outstanding requirements and requested changes so that everyone knows exactly what’s going on.

- Lack of Planning / Testing: Data Migration is a complex process, but comprehensive testing often takes a backseat to other concerns. Testing needs to be done throughout the project, and not just by the developers. The functional team and the business need to be engaged with testing to ensure that all requirements are properly met and that all rules are properly applied. Bypassing or delaying testing can allow corrupted data to sneak into the new system or result in Go Live delivered data which does not meet the needs of the business. Just because a record loaded doesn’t mean its correct.

PI Solution: Testing is built in at every phase of the project, with full formal test cycles and shorter unit tests validating and confirming the Data Migrations well in advance of Go Live. Extensive error reporting highlights potential issues early on.

- Poor Communication: Data Migration is generally part of a larger project and must coordinate reams of changes to complex technical requirements while also engaging the business functionally. Without strong IT and cross-functional team communication, unintended results are bound to occur.

PI Solution: Our consultants act as the bridge between the functional and technical teams, questioning and challenging illogical requirements to ensure the Migrations meet both sides’ requirements. Constant communication keeps all parties informed

When you keep these risk factors in mind, you can approach every Data Migration with a comprehensive plan to avoid falling behind before you even start, reducing the risk and helping to ensure that the overall project succeeds. At Definian, we help companies all over the world do this every day.

The Impact of a Failed Data Migration

The cost of a Data Migration can be thought of from two angles—the Direct Cost (how much did the services cost) and the Indirect Cost ( lost revenue due to decisions based on faulty data, downtime required to correct bad data, the public relations impact of a high visibility failure, etc).

What happens when a Data Migration goes wrong? What is the impact on your project, your business and yourself?

Project Impact

Budget: Data issues are often addressed by throwing resources at the problem as Go Live nears. While this may keep things on schedule, it causes the budget to explode and quality to suffer.

Schedule: When Data Migration goes wrong, there is a direct impact on your project. The most obvious impact may be to the project schedule, pushing back testing events or even Go Live dates, but the true impact is more widespread. Resources expected to roll onto other projects are unable to do so as they continue to support your new schedule. Timelines for these other initiatives may also need to be adjusted to work around your dates. The domino effect continues from there, hurting not just your project but your entire organization.

Inoperable Solution: No matter how much time and effort is spent configuring and customizing your new system to meet your requirements, none of it matters if the underlying data is not right. Incorrect data may cause the system to behave in unexpected ways—or even not work at all if certain constraints are violated.

Business Impact

Data Loss / Corruption: Untested Data Migrations can hide all matter of problems. Data may not migrate correctly, or at all. While risk can be mitigated with thorough testing and reconciliations, the impact of lost or transposed data cannot be overstated. In addition to potential revenue loss or legal impact, for customer facing enterprises missing data can become a PR incident.

Flawed Decision Making: Reports and metrics are only as good as the data driving them. Inaccurate data leads to inaccurate forecasting and flawed decision making, which in turn impacts productivity and profitability. If inadequate attention is paid to ensuring that the data is complete, consistent, and accurate, your metrics may not be giving you a realistic picture.

Extended Downtime: Data issues identified after Go Live often need to be corrected and the corrections can cause costly downtime as they are applied. Downtime not only impacts profitability and productivity, but can also contribute to lack of trust in the new solution.

Lost Revenue: Data loss, flawed decision making and extended system downtime all contribute to lost revenue. If the system is not available to take orders or if products are not properly defined, the bottom line suffers.

Audit / Regulatory: Depending on the industry, there may be audit or regulatory statutes that dictate the depth of history that must be retained. Issues with the Data Migration may cause these requirements to be inadvertently violated.

Lack of Confidence: When the new system doesn’t act as expected or reports don’t reflect reality, they start to be seen as untrustworthy. The business will start to discount, distrust, or disregard the shiny new system and may even start agitating for a return to the old platforms or building their own workarounds.

Personal Impact

Staff Impact: Increased hours and stress caused by struggling to keep projects on schedule impacts morale, leading to disgruntled employees and higher turnover.

Leadership Impact: When incorrect data leads to flawed decision making or causes a PR disaster, the public only sees the end result—not what led to it in the first place. This reflection leads to lack of credibility, lack of confidence and personal risk for project sponsors, decision makers and stakeholders.

Mitigating Data Risk for a $2.8B International Higher Education Organization

Project Summary

A $2.8B for-profit higher education organization continually acquired universities as their network expanded over the years, necessitating data consolidation and migration to one solution.

Traditionally, each university had utilized separate financial and student management systems. Monthly financial reports were prepared in each of the different financial systems and provided to Hyperion Financial Management, leading to an extended financial close process each month as the results were compiled and reconciled.

To improve this process, the organization initiated a project to implement PeopleSoft as a centralized replacement for the disparate localized solutions, with the intent to consolidate data at the corporate level and streamline financial reporting and student management.

This case study explains Definian’s role in the successful financial ledger data migration to PeopleSoft for nearly twenty of the organization’s South American universities.

Requirements

Below are the four high-level data migration requirements imperative to the success of the implementation.

- The data from several distinct local financial systems needed to be extracted, cleansed, and transformed before it could be loaded into a single instance of PeopleSoft.

- The migrated data needed to be reconciled back to both the source data and to Hyperion after transformation.

- A standardized Chart of Accounts needed to be applied to each in-scope university, aligning with their existing Hyperion structure.

- The data migration process had to be standardized and repeatable, as new universities were added to the scope over the course of the project.

Client Challenges

There were many obstacles to making the data migration successful. Some of the most significant challenges were:

- The local financial systems and locations of the relevant data were not always known or well understood from an IT perspective, which obscured the legacy data landscape.

- Many of the legacy systems were homegrown and continuously in development and under revision, resulting in frequently changing requirements.

- Legacy systems contained poor data quality, such as missing, inconsistent and invalid data.

- Individual universities maintained their financial information across disparate data sources, increasing the difficulty of both conversion and reconciliation.

- The legacy financial data did not always balance with Hyperion’s reported financials, increasing the difficulty of balancing and reconciling all financial data.

- The data structures of the legacy systems were drastically different than the target PeopleSoft structure where accounts needed to be merged, split, and restructured.

- Frequent changes to the Chart of Account mappings, which translated local accounts to the consolidated PeopleSoft financial structure, needed to be applied an validated within short time frames, often several times in a single day.

- The changing target account strings coupled with the stringent audit requirements meant that any invalid data could fail to load to the target system. Bad data and unmapped accounts needed to be identified and corrected early in the process to ensure load success.

Key Activities

- The combination of Definian’s expert data migration experts and Applaud’s automated extraction and profiling abilities enabled a quick and thorough landscape analysis of the various legacy systems. This in turn enabled the team to identify the locations and types of financial data in each system.

- The integrated analytics and reporting functions made it possible to perform a deeper analysis on the legacy data, identify potential data issues and establish a data quality strategy.

- These tools were also used to identify and create unique combinations of entities, accounts and degree codes, enabling the creation and completeness of cross-reference mapping documents.

- Definian's experienced data migration consultants were able to identify, prevent, and resolve data issues before they became project issues

- Applaud’s data transformation capabilities enabled the team to quickly build repeatable data migration programs and to swiftly react to every specification change.

- The transformation capabilities also made it possible to develop a template that could be easily adapted to support additional universities as they came in scope.

- The integrated reporting tools were helpful in developing full financial reconciliations between the legacy source data, reported monthly Hyperion balances, and the translated PeopleSoft load files.

- All the pieces were tied together by Definian’s RapidTrak methodology, which was essential to the team decreasing the overall data migration effort and reducing project risk.

The Bottom Line

The Applaud® Advantage

To help overcome the expected data migration challenges, the organization engaged Definian Applaud data migration Services.

Three key components of Definian Applaud solution helped the client navigate their data migration:

- Definian’s data migration consultants: Definian’s services group averages more than six years of experience working with Applaud, exclusively on data migration projects.

- Definian’s methodology: Definian’s EPACTL approach to data migration projects is different than traditional ETL approaches and helps ensure the project stays on track. This methodology decreases overall implementation time and reduces the risk of the migration.

- Definian’s data migration software, Applaud: Applaud has been optimized to address the challenges that occur on data migration projects, allowing the team to accomplish all data needs using one integrated product.

The Results

The end result of using Applaud data migration services was a series of successful implementations as each subsequent country completed their adoption of the PeopleSoft solution. Without the Applaud team, it is unlikely the number of iterations required to address all mapping issues would have been possible in the required timeframe. Definian prioritized flexibility and standardization, two aspects that allowed the project to consistently adapt to timeline changes and project plan growth.

Over the course of the project, the Applaud process was rolled out to several of the client’s country projects and nearly twenty different universities. By building a repeatable process that could be rolled out to future sites with minimal development, Definian streamlined deployment of the Applaud process to additional countries and universities. The client team was able to focus on the functionality of their PeopleSoft solution going forward rather than the specific process of the data migration, and Definian was able to focus on bringing this project to a successful close.