Articles & case studies

Data Deliverables to Include in an ERP Implementation RFP

Data complexity and its impact on project success is underestimated on large ERP implementations like SAP, Oracle, Workday, Infor, etc. Over the past 35 years of working on complex data initiatives, we’ve seen our share of RFPs that do not include the bulk of the data activities needed drive project and long-term organization success. This high level list outlines data activities to precede the implementation, the data work to be performed during the implementation, and the establishment of ongoing data quality management practices to continue post implementation.

Including these deliverables in the proposal/scoping documents is the first step in transforming the data landscape from its current state to what it needs to be to drive the business for years to come. If the plan is to handle some of these activities internally, they should still be included in the RFP to sanity check the internal resourcing. The overall scope of the project can always be adjusted.

If there are additional questions on data deliverables or execution,Definian can assist with defining the scope and/or take on the execution/management of the data components of your firm's data journey.

Pre-Implementation Assessment

- Confirm the in-scope datasets

- Identify data sources where data for those data sets reside

- Identify which data source is the system of record for each data element

- Identify and quantify major areas of concern and how they will be addressed

- Create a data dictionary/profile for each data set that includes: each element name, type of data it contains, frequency distribution of value (if relevant), etc.

- Generate data quality reporting that identifies high level data quality issues at a summary and detail level

- Provide interim storage strategy including the method and location of where the cleaned data will live and how it will be maintained

- Work with business to determine regulatory and non-regulatory archival/historical data requirements

- Document data validation/reconciliation requirements across each data area

- Recommend solutions for master data maintenance for data integrity in legacy systems during the duration of the project

- Quantify the data effort for remaining portion of the implementation

Data Transformation

- Create and execute a data quality strategy that includes: expected v actual burn down rate, responsibility, criticality level, resolution categorization of automated/legacy clean-up/hybrid automated/manual process

- Creation and execution of data readiness reporting process

- Perform the following automated cleansing: address standardization and accuracy, removal special characters, identification/resolution of duplicate records, survivor management

- Harmonize the overlap of master data between multiple source systems

- Extract data from each legacy data source

- Define and maintain the detailed data mapping specifications

- Utilize a pre-validation readiness process that checks data for majority of errors without touching the new environment

- Transform the data into the target system load formats

- Load data into the new application

- Validation/reconciliation reporting process that includes: data validation plan, scorecards, and reports that support the effort

Post Go-Live/Ongoing Master Data Management

- Manage the establishment of ongoing data governance council through the second meeting

- Establish data quality scorecard metrics and processes

- Document relevant MDM policies, procedures, and data models

Depending on business requirements there is more that can be added to the MDM activities and it could be a significant RFP in itself. These bullets set the groundwork for ensuring that the organization is keeping data on the right foot.

Accelerating and Improving an In-Flight Implementation

Project Summary

As part of a business transformation initiative, a Fortune 500 steel company made the decision to migrate one half of their business, 11 divisions, onto a common EBS 12.1 solution. Most data for the divisions resided in a common legacy solution; however, many additional, disparate systems were used by each division for purchasing, Enterprise Asset Maintenance (EAM), and Manufacturing Execution System (MES).

The increase of the number of divisions handled simultaneously

After bringing on a System Integrator (SI) for the project, the team spent a little over a year designing and configuring the new solution, developing data conversion programs, and going through test cycles in preparation for the go live of their first division. After a year and a half, the first division successfully went live, but it was a struggle. The following year, Definian met with one of the SI team members at Oracle Open World and discussed this project at length. The organization had plans to go live with the remaining 10 divisions within 20 months, meaning they would need to scale from conversion of one division at a time, all the way up to 6 at a time! Having seen the struggles the internal data conversion team had with just one division, the SI knew they needed help. A few weeks later, an introduction was set up to see how Definian could provide value as a strategic partner tackling the data migration challenges. After introductions, a few preliminary discussions, and an onsite meeting regarding current data concerns and Definian’s capabilities, Definian was engaged to conduct a proof of concept.

“Definian has done WONDERS for our data conversion process and really helped us work ahead and shorten the length of our data conversion.”

– Client Data Lead

Proof of Concept

Based on the preliminary meetings and conversations, Definian was under the impression that data was not able to be converted – at all. We assumed they had unsuccessful test cycles because they could not get master data loaded in EBS in early cycles, as frequently seen on other engagements. This was not exactly the case. Outside of some mistakes that were made in the early going, they had a level of success much higher than typically seen with other clients. Customer and supplier conversions would achieve >95% successful data loads. They had a robust master item conversion process. Overall, the master data conversions required an immense amount of man power and manual input, but they did work.

The main area of focus for the POC was customers. To showcase our abilities, Definian designed reports to bring attention to the vast amount of duplicate customer data already in EBS and show how this could impact the business. Reports were designed to show customers with too high of credit limits due to having multiple accounts, inaccurate roll up of credit limits, and other varying data quality issues. The team was impressed by what Definian accomplished in only a few days and certainly intrigued by the idea of Definian putting its talents on full display by taking over all the data conversion responsibility. It was time to get to work.

Learning the Process

Definian quickly realized the extent of labor and hours that went into each of the master conversions. To prevent further customer duplication in EBS, each customer data load required manual creation of cross references to existing accounts, cleanup of addresses on both legacy and EBS sides, and consolidation of legacy site uses. Much like on the customer side, supplier data cleanup required a fully dedicated resource cleaning up data both in legacy and EBS. These processes were both long and drawn out, but both paled in comparison to the item master conversion. This conversion required about three weeks of lead time. All conversion eligible items had to be added to a spreadsheet, so the subject matter experts could research, review, and populate the attributes before the conversion process. Each one of these steps was completely manual; there was no way they could scale this to six concurrent divisions, as required by their project plan.

The number of hours Definian’s pre-validation process saved

All that said, the manual processes associated with data conversion were not their greatest pain point. The data validation process needed a complete overhaul. For each conversion cycle, data was loaded into two instances. The first was what was referred to as a conversion (lower) instance, the second being the actual test (higher) instance. Our initial assumption was that the conversion instance was strictly a technical test instance, and the test instance was the only place the data would be validated by the division. This was not the case.

Each data set needed to be loaded in the lower instance, internally validated (deployment team resource), and division validated. After that was completed, it would be loaded in the higher instance, internally validated, and division validated. Downstream conversions were not even considered until the predecessor was division validated in the instance. Getting through all data conversion leading up to a test cycle was taking well over a month, and even then, they still were not getting through all conversions. During the first cutover we witnessed (but were not involved with), the client was converting formulas, the first dependency after org item assignment, all the way from Saturday night into Sunday morning.

Further complicating data validation was that the documentation of how data was extracted, mapped, and transformed as part of the conversion process was non-existent, out of date, or difficult to read. Each data conversion was assigned to a single developer. Therefore, that developer was the only person who knew exactly how data was being extracted, mapped, and transformed between the legacy and target system. The only way for someone at the division to know why data was converted a certain way was to get in touch with that developer. Data conversion was a black box.

In addition, because different developers worked on different conversions, they struggled with downstream conversions. Different developers would use their own version of legacy to EBS cross references, some would manually update them, some created live views, and some never updated them at all. This caused all sorts of disconnect and made it impossible to trace back issues. Even after the first two cutovers, they never were able to complete the full stream of item conversions.

“I used to be up all night trying to solve the issues for my conversion on Go-Live weekend, now Definian just clicks a button.”

– Client Data Conversion Developer

Moreover, even if they cleared every hurdle leading up to cutover weekend, they still had the daunting task of entering thousands of open purchase order and open sales order lines, because there was no automated conversion process in place. As a result, the division had to shift away from validation of the oft-troubled item related data sets to ensure all orders were entered by Monday morning.

How We Could Help Right Away

By the time Definian was fully engaged, the UAT data conversion for the first division was underway; therefore, it was too late to take on any of the data conversion responsibilities. Definian’s priority was designing reports to simplify and speed the post-load validation process, allowing them to free up some of their resources leading up to and on cutover weekend. The first way Definian did this was through comparison reports. These reports would allow the deployment team and the division to compare data between the lower and higher instance. If the data was the same between both instances, and they already validated in the lower instance, they could move on. If there were differences, they would just need to understand the differences, and confirm whether they were expected.

Post conversion validation reporting instilled confidence where none existed before

Definian also developed several master data reconciliation reports to help track changes that needed to be made through the dual maintenance period. These reports compared the data loaded to EBS against the legacy production environment. These reports not only identified dual maintenance issues, but clearly outlined integrity issues across the existing conversion processes. The information gained from these reports allowed our client to resolve significant data issues before they turned into product manufacturing issues.

After the first go live, the focus shifted to development of data conversion programs. By consolidating the data conversion activities within one cohesive team and working in a single development environment, Definian could ensure consistency between data conversions dependent on one another. For example, rather than each resource creating their own unique mapping, Definian established a single cross reference to map legacy part codes to EBS items that could be referenced by all conversions. By the time the third and fourth divisions were beginning data conversion, Definian had taken on most of the conversion responsibility. The client saw immediate progress in the quality and efficiency in the data migration process. The next challenge was to automate the conversion of open purchase orders and open sales orders.

Definian was told time and time again by subject matter experts that automation of open purchase orders and open sales orders would not be possible. They felt they had too unique of a situation and that it was lower risk to enter every open line in EBS over cutover weekend. However, Definian was able to convince the PMO that it was in their best interest to explore an automated solution, with the understanding that a certain fall out of orders would be expected.

Time improvement on the sales order conversion

Because the SI did not have the bandwidth for development, and because they did not want to take on the risk, Derfinian worked directly with the client’s internal SOA team to build these programs. Initial design, data mapping, and most importantly functional process review with stakeholders at all levels of the organization took a few months. But after thorough testing through SIT and UAT, automated transactional conversions were ready to use for next cutover. Suddenly, open sales order data conversion time went from 48 consecutive hours of stressful, error prone manual entry by a team to about 30 minutes. It was a huge win for all parties.

Long Term

By the time the fourth wave of divisions started up, all data conversion activities were being executed in one common environment. Definian’s processes had been refined and streamlined for maximum efficiency.

Conversions within each process area flowed smoothly between Definian’s team members. Definian built robust, efficient processes to generate customer, supplier, and item cross references specific to any EBS instance that Definian was loading to. This prevented the issue of using the wrong cross reference and served as the backbone to all of Definian’s downstream conversions.

Definian had refined pre-validation checks to the point where migration results across the conversions were known prior to the load into EBS. This helped the team avoid the practice of passing data to a source table, needing someone to execute the load program, and then waiting to learn the results before seeing any data quality issues. Additionally, the pre-validation process allowed the division and deployment teams to preview the data and address issues across entire conversion streams without having to wait for a predecessor to finish loading. This in turn led to dramatically increased data quality and decreased conversion run times.

The Results and Future Plans

Data migration results were known beforeeach conversion cycle

When Definian was brought onto the project, the client had 10+ resources dedicated to data conversion for a singular division. Within 9 months, Definian’s team of 4 was executing the data conversion work for 3, and, at one point, 6, concurrent divisions in less time then it previously took to get 1 division ready. The implementation of pre-validation led to a significant increase in both data quality and validation time. Automation of the open purchase order and open sales order conversions completely changed cutover weekends.

After 10 successful go-lives, the second half of the client’s business is embarking on a four-year transformation initiative that will combine and migrate approximately 15 legacy ERP systems into Oracle ERP Cloud. With a full understanding of the importance of data and the risk it poses to the implementation, the client is going to take this opportunity to adopt and improve data migration practices that couldn’t previously be done under their old process.

In the planning stages of this initiative, the client fully placed their trust in Definian. Definian was selected for the project before the System Integrator and was asked to present suggested process changes for this new line of business. Some of the key changes included simplifying the data mapping process, synergizing work between the data, division, and functional teams, and streamlining the data pre-validation process from nearly entirely post-load, to nearly entirely pre-load. The value of each of Definian’s recommendations was recognized and adopted into the project plan. Through the strength of our partnership and additional process improvements, we are anticipating that we will continue to accelerate data conversion timelines while improving data quality.

More Than a Party: Reflections from a Definian Tradition

I remember the very first Definian lake house party at Cottonwood Lodge. It had only been a few months ago that I was newly married and looking for a place to start my career. I was trying to unravel the technical complexities of my project, learning to manage my newfound finances, and moving into an apartment when the lake house party – at Jim and Dune Hempleman’s own home – was announced. My expectation was another round of interviews, carried out in Hawaiian shirts, with just enough beer to make me less articulate but not enough to make me more confident. I was scared.

When the swarms of bugs grew large and the tiki torches grew dim, we headed inside Jim and Dune’s lake house. Over the course of the day I was able to shake off the nervousness I felt as Definian’s newest employee. Now, almost midnight, seeing everyone migrate indoors I knew that I passed the test and started to feel relief.

My relief was cut short as Jim dragged a 48 inch leather wrapped foot locker between me and him. Kneeling down, he unlatches the case and reveals rhythm eggs, tambourines, triangles, cymbals,maracas, triangles, blocks, and even a rainstick. I am not certain what was said next, I do not know who distributed the instruments, and do not know who struck that first beat. But I do know that by the third beat I was all-in. As my smile grew, I could see Jim’s grow even bigger. Seeing such joy from our founder gave me confidence. My apprehension and preconceptions about a corporate picnic vanished as I realized the adventure that this place had in store for me. The only thing left for me to do was to keep playing, knowing that the folks around me will be there to back me up.

Not every one of Jim and Dune’s lake house parties has drum circles. But they always include something that pushes us to do things we never did before. Have you ever sung karaoke? Have you raced in a tricycle? Have you ever made beef tenderloin and your own Caesar salad dressing? If the answer is ‘No’, then great. If the answer is, ‘No, and I’m terrified of doing so at a company party,’ then even better!

Jim, Dune, and all the principals and managers at Definian pushed me to do things I did not realize I could do – face a tough client, learn a totally new scripting language, meet an aggressive deadline. At the lake house, sitting cross legged on the floor wildly shaking a tambourine, I learned that Definian is a place where I will be encouraged and pushed to succeed while having an entire company behind me, making sure to catch me when I fall.

This year Dune is welcoming not only me and my wife, but also my two sons into her home. The kids are eager for the tangibles – the boat ride, the bounce house, and the fireworks. But I am grateful they get to see the cultural center of a company like this, so that as they grow up they understand what a truly great company feels like.

Dune is the spiritual center of Definian and the driving force behind Definian’s culture. From the boundlessness of her hugs to the leadership she showed when Jim passed, Dune has guided us with dedication and complete selflessness. She also knew how to foster growth of the entire individual, asking me about career aspirations one minute and the next minute reminding me how lucky I am to have Tuyet as a wife.

Dune went out of her way to make sure she spent time with me during the first lake house party. I’ve seen Dune do the same thing with every new round of hires, getting to know who they are and what they aspire to and making them feel included. I’m looking forward to this year’s lake house party, where this warmth and encouragement will inspire new friends to do something beautiful and hilarious like race tricycles. Weeks from now, when they realize that this not only makes them better consultants, but also better people I’ll say, ‘I told you so.’

Then I’ll email Dune and thank her for creating a place where people have the freedom to do incredible things.

Data Migration Checklist For Workday Implementations

Billionaire investor Charlie Munger has said, “No wise pilot, no matter how great his talent and experience, fails to use his checklist.” We’ve followed Charlie’s advice and created a high-level checklist to kick start your data migration and Workday implementation. We’ve paid special attention to highly risky area of data conversion and build on our recommendations for a successful data assessment.

Assess the Landscape

Start planning the data effort by assessing what you have today and where you are going tomorrow. While frequently glossed over, spending time assessing the landscape provides three prime benefits to the program. The first benefit is that it gets the business thinking about the possibilities of what can be achieved within their data, by outlining what data they have today and what data they would like to have in the future. Secondly, this exercise gets the organization engaged on the importance of data and instills an ongoing master data management and data governance mindset. The third benefit is that it enables the team to start to seamlessly address the major issues that programs encounter when assessment is glossed over.

- Create legacy and future Workday data diagrams that capture data sources, business owners, functions, and high-level data lineages.

- Outline datasets that don’t exist today that would be beneficial to have in Workday.

- Ensure the future state Workday landscape aligns with data governance vision and roadmap.

- Reconcile legacy and future state data landscapes to ensure there are no major unexpected gaps.

- Perform initial evaluation of what can be left behind/archived by data area and legacy source.

- Perform initial evaluation of what needs to be brought into the new Workday landscape and reason for its inclusion.

- Profile the legacy data landscape to identify values, gaps, duplicates, high level data quality issues.

- Interview business and technical owners to uncover additional issues and unauthorized data sources.

Create the Communication Process

Communication is a critical component of every implementation. Breaking down the silos enables every team member to understand what the issues are, the impact of specification changes, and data readiness. (Read about the impact of siloed communication.)

- Create migration readiness scorecard/status reporting templates that outline both overall and module specific data readiness.

- Create data quality strategy that captures each data issue, criticality, owner, number of issues, clean up rate, and mechanism for cleanup.

- Establish data quality meeting cadence.

- Define detailed specification approval, change approval process, and management procedures.

- Collect the data mapping templates that will be used to document the conversion rules from legacy to target values.

- Define high-level data requirements of what should be loaded for each Tenant. We recommend getting as much data loaded as early as possible, even if bare bones at first.

- Define status communication and issue escalation processes.

- Define process for managing RAID log.

- Define data/business continuity process to handle vacation, sick time, competing priorities, etc.

- Host meeting that outlines the process, team roles, expectations, and schedule.

Capture the Detailed Requirements

Everyone realizes the importance of up-to-date and comprehensive documentation, but many hate maintaining it. Documentation can make or break a project. It heads off unnecessary rehash meetings and brings clarity to what should occur versus what is occurring.

- Document detailed legacy to Workday data mapping specifications for each conversion and incorporate additional cleansing and enrichment areas into data quality strategy.

- Document system retirement/legacy data archival plan and historical data reporting requirements.

Build the Quality, Transformation and Validation Processes

With the initial version of the requirements in hand, it's time for the team to build the components that that will perform the transformation, automate cleansing, create quality analysis reporting, and validate and reconcile converted data. To reduce risk on these components, it's helpful to have a centralized team and dedicated data repository that all data and features can access.

- Create data analysis reporting process that assists with the resolution of data quality issues.

- Build data conversion programs that will put the data into the Workday specific format (EIB, Advanced Load, etc).

- Incorporate validation of the conversion against Workday configuration and FDM from within the transformation programs.

- Enable pre-validation reporting process that captures and tracks issues outside of the Workday load programs.

- Define data reconciliation and data validation requirements and corresponding reports.

- Verify data quality issues are getting incorporated into the data quality strategy.

- Confirm any delta or catch-up data requirements are accommodated within the transformation programs.

Execute the Transformation and Quality Strategy

While often treated separately, data quality and the transformation execution really go hand-in-hand. The transformation can't occur if the data quality is bad and additional quality issues are identified during the transformation.

- Ensure that communication plans are being carried out.

- Capture all data related activities to create your conversion runbook/cutover plan while processes are being built/executed.

- Create and obtain approval on each Workday load file.

- Run converted data through Workday data load process.

Validate and Reconcile the Data

In addition to validating Workday functionality and workflows, the business needs to spend a portion of time validating and reconciling the converted data to make sure that it is both technically correct and fit for purpose. The extra attention validating the data and confirming solution functionality could mean difference between successful go-live, implementation failure, or costly operational issues down the road.

- Execute data validation and reconciliation process.

- Execute specification change approval process per validation/testing results.

- Obtain sign-off on each converted data set.

Retire the Legacy Data Sources

Depending on the industry/regulatory requirements system retirement could be of vital importance. Because it is last on this checklist, doesn't mean system retirement should be an afterthought or should be addressed at the end. Building on the high-level requirements captured during the assessment. The retirement plan should be fleshed out and implemented during throughout the course of the project.

- Create the necessary system retirement processes and reports.

- Execute the system retirement plan.

Following this checklist can minimize your chance of failure or rescue your at-risk Workday implementation. While this list seems daunting, rest assured that what you get out of your Workday implementation will mirror what you put into it. Time, effort, resources, and – most of all – quality data will enable your strategic investment in Workday to live up to its promises.

8 Benefits of a Central Data Repository

There are several techniques that Definian utilizes on every implementation to eliminate data migration risk. One technique critical to the success of Oracle, SAP, Workday, and other complex implementations is a central data repository. We have been leveraging this technique and optimizing our software’s data migration repository as one the key tenants of our data migration process for many years. The following 8 benefits highlight the importance of the repository and are routinely realized on our implementations.

1. One Location = Improved Analysis

One of the biggest benefits of having a central data repository is that it places the entire data landscape in one location. With the data in a single place, analysis reports that include information about each individual source as well as across multiple sources can be more easily implemented. These analysis reports provide a 360-degree view of the data landscape, identify conditions that cause issues such as duplicates, missing/invalid values, and other integrity problems. Without a data repository, cross system analysis is difficult, leaving many issues uncovered resulting in bad\duplicate\orphan data end in the target system.

2. No Impact to Production

The ability to create and run analysis reports across the entire landscape adds incredible amount of value into the project. Without the data repository, analysis would need to be performed on test or production environments. If the analysis is done in test environments, data will be out of date and out of sync. If there is a data cleansing effort, monitoring the status of data quality issues becomes difficult. If the analysis is on production, impact to the ongoing processing is a concern and activities will be required to take place within limited windows. By being able to extract data as needed, the repository sidesteps both issues as extraction activities have minimal impact to production databases.

3. Predict Conversion Results

Predicting conversion results without actually populating the new application is critical for knowing the data is go-live ready before cut-over. A centralized data repository facilitates this effort. By having a repository, it is possible to import configuration data, table structures, and meta data from the target system and run mini conversion tests that ensure that there aren’t missing cross reference values, setups, and other conversion errors. This predictability becomes more important in Cloud implementations where control of the environments is limited and the ability to restore backups/back-out data is difficult/impossible. Since these mini-test cycles are a frequent occurrence, issues are identified early and pulled forward in the project. When it is an official test cycle or go-live, the project team has a high confidence in the data, as it has already been run through the ringer.

4. Clean Independent of Production

Data quality is the number one complaint on all projects and to meet the data cleansing requirements, additional data sources and cleansing spreadsheets need to be incorporated into the process. Often these data sources are filled out by the business to override data that needs to be cleansed. A data repository provides a method to validate and incorporate these additional cleansing sources into the data conversion\cleansing processes.

5. Consistently Transform and Enrich Across Multiple Data Sources

The legacy and target applications were developed by different companies, with different business requirements, in different eras of technology. A byproduct of this is that the new system will need data that doesn’t currently exist to function. To enhance the data to include this information, new data needs to come from somewhere. Sometimes this data can be calculated by the conversion programs. Perhaps county code is needed in the new system, but doesn’t exist in the legacy systems. Data conversion programs can calculate the county value during their transformations.

However, something like primary contact indicator might not exist but is something that the business wants to utilize going forward. The easiest way to take care of this request is for the business to fill out a spreadsheet that indicates who the primary contact should be for each supplier. This spreadsheet can then be imported into the data repository and utilized by the data conversion programs during the migration.

6. Streamline Data Migration Testing

Data is constantly changing. The constant state of change makes it difficult to validate and test data conversion programs. Since data in the repository is captured at a point in time, enhancements can be reviewed and tested without worrying about timing issues. Without a data repository, validating record counts and tracking down various data issues becomes an effort of futility as those metrics change one minute to the next.

7. Simplify Post Conversion Reconciliation

A critical piece of all data conversion projects is the post conversion reconciliation. There are several ways to approach the reconciliation and the repository accelerates the effort. After the data is migrated, it can be extracted into the repository alongside the original source data. Since the repository has the data at the exact point in time of the conversion, it is possible to definitively prove out the migration process without having to worry about post conversion updates.

8. Zero In on Exceptions

A powerful validation technique is the identification of exceptions/differences that occur from test cycle to test cycle. A central data repository allows data to be captured at any point in time. Once this snapshot is in place, any piece of data that is different compared to another point in time can be identified. This information brings focus only on the differences, meaning no time needs to be spent on re-validating/re-reviewing any unchanged data. This exception-based reporting technique accelerates validation times and a lot of personal frustration of not having to repeatedly check the same data.

There are many other techniques and processes involved in the data migration process that further reduce risk on implementation, but the central data repository is an important one. If you have any questions regarding data migration processes, issues, and ways to reduce data migration risk, email me at steve.novak@definian.com or call me at 773.549.6945.

For more information about data migration repositories, check out this post by my colleague Rachel Kull.

The Making of a Culture

For many of you, today is just another day – August 1, 2019.

For those of us at Definian, the start of August always marks some important milestones:

- Today is the day we welcome in our new class of Associate Consultants, some of the “best of the best” who are starting or continuing their career with us.

- Today is also the first day of our new fiscal year, a chance for us to celebrate what’s new and what’s next for our company over the next 12 months.

- And today is the 34th anniversary of the founding of our company by Jim and Dune Hempleman, a day of intense pride as we celebrate our history and heritage.

We look forward to August 1 every year as it provides the perfect balance of where we have been and where we are going.

While we have evolved as a firm, the one constant over the last 34 years has been the culture of our firm. I believe it is the single most important element behind our longevity and success over the last three decades, and will be the reason we are going to experience unparalleled success and growth in the coming decades.

We all have heard that “Culture eats strategy for breakfast,” and there’s a ton of writing on company culture, from books to blog posts to Tweets and everything in between. There’s no dearth of resources to explore if you want to understand how to build and sustain your company culture.

And don’t kid yourself. Every company has a culture – whether they can articulate it or not. It’s the ethos of the firm, the DNA that makes your company unique. Culture happens over time. It is shaped by management and lived out by the team.

When I joined Definian last year, I spent time exploring and understanding the culture of the firm. There was something special about this company and I quickly realized that my job, as the new CEO, was NOT to change the culture but rather to leverage our unique DNA to help us become an even better version of ourselves, both individually and collectively.

My exploration led me to uncover four key elements of our culture that help make Definian who it is:

1. Grounded in ethics

Jim and Dune founded the company on a Code of Ethics that still guide our work today. The code is simple yet profound:

- Adhere to the highest ethical standards

- Place the client’s interest first

- Serve all clients with excellence

- Maintain a professional attitude

- Preserve the client’s confidence

These are not just words on paper to us. This is our foundation, the rock of our existence. We hire for these ethics. We expect 100% commitment to these ethics. We celebrate and reward them. If one were to list out our core values, ethical would be at the top of that list.

While the concept of being ethical might be considered table stakes to many, that’s unfortunately not the case at so many companies. We are proud of this commitment and stand firmly on the foundation of our Code of Ethics in all that we do.

2. Relentlessly client-focused

Our commitment to our clients and their success is second-to-none. We will do anything within our power to help a client succeed. Whatever it takes, whenever it’s needed – that’s our mantra. Sure, it’s led to late nights, weekends and even less revenue or profit at times. But it’s all in the spirit of serving our clients.

Perhaps the best example of our client-focus is the Go-Live bell that hangs in our office. In other companies, a bell is rung when that company has a success (e.g., a new sale is made). At Definian, we ring the bell when our clients have success, namely in the form of a Go-Live on a technology implementation. We celebrate their success, not ours. It’s a very tangible reminder that when the client is successful, all the other pieces seem to fall in place.

3. Committed to impeccable delivery

We own a critical task in any system deployment – to migrate data from an old, legacy system into a new target system. It’s perhaps the riskiest part of any technology implementation: Gartner states that 83% of all data migration projects either fail outright or cause significant cost overruns.

If we don’t deliver on our commitments, tens of millions of dollars of investment can be wasted. The pressure is on, all the time, to deliver a successful migration with accuracy and timeliness.

And deliver we do! Every single one of our projects over the last 34 years has gone live – yep, 100% success rate. That’s a lot of bell ringing! More importantly, it means that our clients know they can count on us to deliver on our promises and commitments, eliminating that portion of project risk for them.

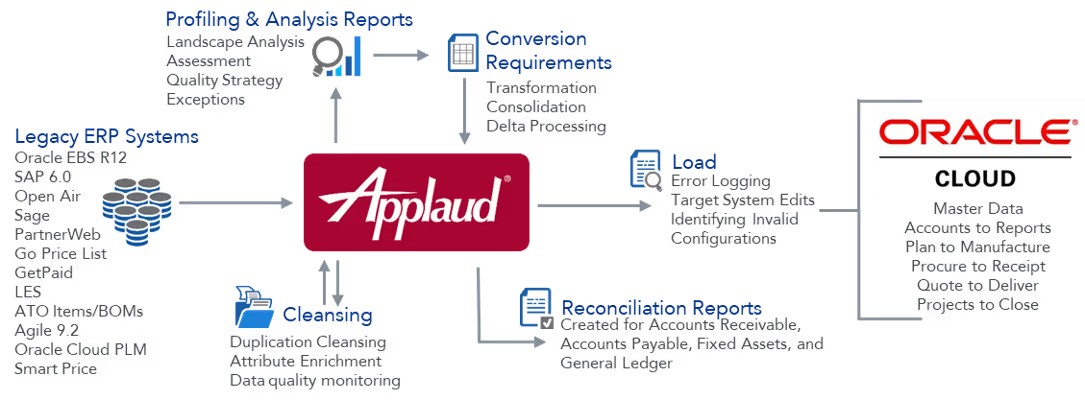

There are a number of reasons why we have this impressive track record – from our incredible team of consultants to Applaud®, our proprietary software that is optimized for this work, to our decades of experience in anticipating and solving complex data migration challenges.

Our culture is built upon delivering excellence and success in every project, every time.

4. Rooted in fun

There is a heavy dose of fun at Definian that I have not experienced other places that I’ve worked and led.

I am not talking about the artificial fun that may come with a foosball table or beer fridge (although both ideas have been mentioned here!). I am talking about genuinely enjoying being with each other and how that manifests itself on a daily basis.

Whether that be the regular lunch gathering in the kitchen to the sudden appearance of miniature Ed Grimley in a remote location to our annual lake party gathering – our team just enjoys being with each other, and it shows in their interaction and engagement.

The laughter, the challenges, the candor and the camaraderie. It’s hard to describe, yet palpable when you experience it. It’s the final piece that makes us unique.

Those four characteristics, when looked at individually, could apply to many companies and cultures in the business world. But the unique combination of all four of them, honed over the last 30+ years by Jim, Dune and the team, makes Definian who we are today … and who we will be tomorrow.

I mentioned that we don’t celebrate our own accomplishments or “ring our own bell” very often … or very well. That said, I am incredibly proud that our company was recently recognized as one of the “Best and Brightest Companies to Work For®” in Chicago this year. It’s a reflection of the culture described above and a significant validation of how special this place is.

Every once in a while, it’s okay to step back and celebrate what makes your company awesome. Today is that day for me.

Happy August 1, everyone.

#WeAreDefinian

5 Hacks to Accelerate Oracle Service Request Resolution

A Service Request (SR) is a tool within Oracle Support that allows customers and consultants to request fixes for issues encountered in Oracle software. Project teams on Oracle Cloud ERP (Enterprise Resource Planning) implementations rely on the timely support of Oracle engineers to work through new issues and use cases as cloud adoption continues to expand. Skillful use of Service Requests will ensure speedy resolution to issues, minimize project risk, and contribute to the software that will drive your business forward for decades to come. Continue reading for 5 tips guaranteed to accelerate Service Request resolution.

Gather Screenshots

Screenshots help Oracle engineers recreate the issue you faced. Collecting an image of each successive step in your process has a few benefits:

- This process helps you slow yourself down, which might make the issue obvious to you and could eliminate the need to log an SR

- There could be multiple ways to navigate to the same screen or execute the same process in Oracle - the issue you faced could be specific to the series of steps you followed

- Oracle engineers frequently request screenshots, gathering this early will avoid unnecessary back-and-forth

- Your screenshots might include key or ID data that is useful for the engineer's backend research (like a 'Request ID')

Gathering screenshots is easy. Windows for example, allows you to take screen clipping by simply pressing the Windows + Shift + S. For Mac, Shift + Command + 3 achieves the same result.

Articulate the Business Impact

The business impact of your issue will help the Oracle engineer understand the importance and reasoning behind your SR. When thinking about business impact, consider the following:

- Mention if the issue impacts a test cycle, design sessions, or is critical path

- Numbers help - how many users are affected, is there a dollars/hours impact

- Explain how the issue impacts a critical business function

Additionally, by explaining the business impact, you give the Oracle engineer greater ability to find an alternate solution that achieves the desired result without encountering the reported issue.

Collect Files

Including files is another way that you can guarantee the engineer has enough information to begin resolving a problem immediately. Consider including the following:

- Any log files that are output by Oracle

- Sample data, especially if the issue impacts a data load

- Copies of any automated emails from Oracle (like those initiated by a workflow)

These files can be difficult to search for and collect after the fact. Depending on Oracle settings, sometimes the logs or reports disappear after a set number of days. One word of caution: avoid sharing sensitive data and realize that Oracle uses a global team - this could have GDPR (General Data Protection Regulation) implications if you were to share the data in a manner that violates their guidelines.

Understand the Severity

Without getting too granular, these are the most important severity levels for you to be aware of:

- Severity 1 - constitutes a complete outage, particularly for production or cutover issues.

- Severity 1, 24/7 - same as above with 24-hour support is expected from both Oracle Support and the requesting organization

- Severity 2 - severe loss of service without known workarounds

A word of caution, 24/7 support should only be requested if your organization is also willing to staff it 24/7. Additionally, understand that the ticket will need to be handed off from one engineer to another between shifts.

Request a Meeting

Complex technical issues can be difficult to explain in words. For issues like this, an Oracle Web Conference (OWC) can help by virtually brining you shoulder to shoulder to an Oracle engineer. Be sure to mention your receptivity to an online meeting.

- Preemptively share your availability to avoid a scheduling back-and-forth

- Be ready to share your screen so that the engineer can see how the issue is manifesting on your end

- Add a personal touch - give the engineer a chance to meet you and learn more about your organization

For me, Oracle Web Conference (OWC) has been one of the surprising joys of working on Oracle Cloud ERP implementations. Each meeting has brought me in touch with someone who cares deeply about Oracle products and their role in the customer's success. Best of luck to you and your organization as you continue your Oracle Cloud ERP implementation!

The Challenges of Supporting Cloud Migrations

Cloud Computing is by no means a fledgling industry. Its roots go back as far as 2006, some argue a bit further. Regardless, as an industry that is more than a decade old, it is not new. While cloud computing is in its infancy, especially in the ERP space, that is less a statement about its age or the number of vendors with enterprise solutions, and more about its maturity and the number of challenges it presents. Truth be told, cloud computing is like a hormonal teenager that thinks it is ready to take on the world and make its own decisions without any parental guidance/supervision. This immaturity has spurred Definian to develop solutions that enable organizations to be successful while the Cloud solutions grow up.

One of my Senior Engineers, who is also one of the longest standing employees in the company, made a very interesting observation:

“Regarding the relative immaturity of cloud technology, and the multitude of very different methods of importing/exporting data, it reminds me of the heyday of mainframes. One reason that Applaud was written to support so many DB and Import file types, and so many data field formats, is the wide variation across various mainframes (and mini-computers) which evolved semi-haphazardly over so many decades”

Most providers of cloud-based services offer a robust feature set for their respective industries. That isn’t the real problem when it comes to maturity. The real problem comes from a data migration perspective and the requirement that adopters migrate their existing data into this new cloud-based solution.

Definian has been fortunate enough to be trusted by many clients looking to migrate their legacy data into several cloud-based solutions. While I can’t speak to most of the more intimate aspects of those projects, I can speak from a more unique position. In each of these projects it falls upon my team to develop custom solutions to many of the more challenging roadblocks. Through these experiences we have first-hand knowledge of and develop solutions that address the fractured and clunky means of uploading and validating data within them.

So, what are some of the challenges?

Import/Upload

Possibly the most important challenge is importing/uploading legacy data into the fresh new instance of any cloud-based solution.

Looking at 5 different cloud-based solutions, chances are they all have different ways of importing/uploading data. One might be through web services. Another may provide an Excel template-based import. Or the vendor may require you to stage your data in files and allow them to load your data. Whatever their method, the lack of a standard across platforms, or even within a platform, presents the challenge of additional development to load the legacy data. Given the web-based nature of most of these import/upload interfaces, there are typically concerns over performance and internal limitations because cutover times need to be fast, and it's not acceptable for web-based interfaces to time-out while the data is being processed.

Take Oracle ERP Cloud applications as an example. They have multiple modules that represent various business operations. Some use Excel templates for importing. Some use a method called ADF (Application Development Framework). Others require the use of various web services, many of which are used in a record-by-record fashion or with some batch limitation. Another example is Workday, which has a method that takes an Excel spreadsheet and converts it into XML before it is ready for import.

This inconsistency within and across Cloud applications increase the difficulty for clients and represent one aspect of the immaturity of the solutions.

Data Validation/Verification

Given some of the complex rules necessary to transform the legacy source data into the expected format of the target system, robust data validation/verification tools are necessary for data migrations to be successful.

Blindly uploading the data without verifying it is foolhardy. Unfortunately, many of the solutions don’t have user-friendly ways of querying the data. This makes it difficult to develop validation reports that can be automated and plugged into the conversions so that problems can be caught early and dealt with. One example is how Oracle recommends to query/validate data within its applications.

BI Publisher is Oracle’s recommended tool to query the data within Oracle ERP Cloud and is a powerful for one-off reporting. This tool is very powerful and quite useful for one-off reporting. In order to leverage this tool, for automating validation, my team developed a scriptable interface for BI Publisher. This enables us to execute targeted queries that isolate portions of the total dataset. With this interface, validation can be done in a more efficient manner and can be more easily integrated into a conversion process that reports inconsistencies in the target data.

Bugs/Service Requests/SLAs

Bugs are the bane of every project and enterprises today tend to release solutions that are much less stable than they should be. This is generally due to some internal cost/benefit calculation that suggests polishing it off is more costly than rolling it out and working through the bugs as they come in. The problem with this model is that the service areas become inundated with requests and the developers find themselves buried in a mountain of backlog.

Cloud-based providers are no different. We have seen many projects that result in a backlog of bug reports and delays due to either:

- Faulty programming

- Poorly planned integration

- A general lack of knowledge/documentation of how a solution “actually works” as opposed to how it is “believed to work”

- Templates that don’t match the processes they belong to

Every project will encounter bugs, the question is merely how much padding needs to be added to the timeline to account for those delays. Experience with each solution is the only way to guide that decision.

Cryptic Error Reporting

Along with the bug/stability challenges listed above are the unclear/short/non-descriptive/cryptic error messages that frequently accompany the bugs and crashes.

Good programming results in clear, user friendly error messages that help the user understand what went wrong, what to do in order to rectify the problem, or provide some direction as to where to find the help needed to correct any issues. Unfortunately, the model that has been standardized by the tech industry titans is not one of clear, descriptive, and informative error messages.

Today, cloud-based solutions continue to follow that model. Generic error messages like “An internal error occurred” or “A parsing error occurred” or “An error occurred contact your system administrator” can be seen throughout cloud-based solutions. Generic error messages like this leave no real course of action other than submitting a ticket into the queue, waiting, or moving onto something else. The tools that my team have built and Definian’s institutional knowledge deciphers many of these types of errors. However, these genericized messages illustrate the level of maturity of some of the cloud solutions.

Bottomline, it is important to plan on being flexible with timelines and order of operations so that when these problems occur, the project isn’t crippled or faced with long delays.

Final Thought

There is no doubt that cloud solutions are powerful and will continue to be where the market heads. As various industries expand into this arena, it is important to keep the above challenges in mind. Until the maturity of these solutions catch-up with the demands of the industries implementing them, one must be prepared to pivot when necessary and/or shift resources towards finding creative ways to work as roadblocks arise.

Pre-Validation Reports: The Secret to Alleviating Data Conversion Anxiety

It's time for go-live! As cut-over approaches, the "this is real" feeling sinks in. The project plan, quality of data, migration programs, and solution readiness are all examined under a microscope. With the weight of go-live, the team knows that despite months of hard work, the project success is ultimately determined by a few short days of carefully-coordinated activities. Nobody wants any surprises to pop up; everyone wants the new system to work as expected. Anxiety levels ramp up, and suddenly everyone second-guesses themselves.

Will my primary vendor convert? Will sales orders get shipped twice? How many open purchase orders are left in the system? Are we missing any salesperson configurations? Will general ledgers get hit twice when we age open invoices?

No matter how many test cycles have been completed or how well-prepared the team is, these questions will be asked. However, there is a tool that preemptively answers these questions, calms nerves, and creates go-live confidence, the pre-validation report. Pre-validation reports identify data quality issues, show what the data will look like after conversion, and provide high-level metrics regarding go-live readiness.

Getting Ahead of Data Issues

One major benefit of pre-validation reporting is the identification of data issues and load errors before upload into the target application. By identifying data issues early, the team is afforded time to address and reconcile any records that might fall out during a load. Additionally, since pre-validation reports can be run at any stage of the project, consistent generation prevents surprises, ensuring that the project’s data quality strategy is properly executed, and the data is go-live ready.

What’s Reported

The reported data issues on pre-validation reports typically fall into three main categories: critical error, warning, and informational.

Critical errors are typically the highest priority issues that need to be resolved and indicate one of two severe consequences. The first is that the data will outright fail to load, either for a specific record or the entire data set. Alternately, the data will load, but introduce poor data quality situations that are technically allowed but decrease the efficiency of the new solution or prevent the data from being used at all. Examples of this include missing configurations in the target application, suppliers with invalid payment terms, salespersons missing in the target, taxpayer ID duplicated across multiple vendors, and non-purchasable items on a purchase order.

Warning messages flag situations that will not cause a data conversion failure, but should still be corrected. Warnings are typically data quality issues that are allowed into the new solution, but should be reviewed and addressed to increase the effectiveness of the solution and improve overall data quality. Examples of warnings include gaps in cross references where a default value needs to be used, when non-critical data needs to be dropped such as duplicate phone numbers or email addresses, and when address standardization cannot be utilized.

Informational messages indicate both situations that will occur during the conversion, and metrics about the data that will be converted. These messages provide a solid sanity check about the data, and even provide an opportunity to validate the data before it’s too late—after the data has been loaded. Examples of informational messages include record creation or consolidation to help with record count auditing.

Know What the Data Will Look Like Post-Conversion

Go-live often entails a list of requests regarding how the data will look in the target application. Pre-validation reports are a helpful tool for showing the business previews of converted data, such as customers, sales orders, or items. These previews are even more critical in cloud applications, like in Oracle’s, SAP’s, or Microsoft’s SAAS Cloud solutions, where it is impossible to back data out and often difficult to correct incorrect datasets.

There are typically two types of preview reports: summary metrics and detailed data views. Summary metrics contain statistics about important fields and overall record auditing. They allow the business to see how many records exist in legacy, how many are excluded (and for what reason), how many are created to enrich the data, and the final expected load count. Examples of important data sets include distinct sales order types, on-hand quantities, open accounts receivable invoices (in quantity and amount), and general ledger balance totals.

Detailed data views allow the business to sort and find information on any record or field in question. Large datasets can be segmented or filtered to increase usability, and the views will also show the legacy data matched to its target. This type of information is key for complicated and business-critical “dollar amount” conversions such as general ledger balances and asset depreciation reconciliations.

Seeing the data at both summary and detail levels alleviates anxiety and provides crucial insight. The team can trace key data like on-hand, open orders, and customers through the data transformation and prove to the business that nothing will be lost.

Boost Project Leadership Confidence & Prevent Project Timeline Slippage

It is standard for the PMO to receive consistent feedback on the project status so that they can gauge go-live readiness for an implementation. The readily-available metrics within the pre-validation reports provide visibility to the data migration track, and allow the PMO to increase magnification on the data and make predictions on the success of the data conversions. Pre-validation reports provide management with the tools to see the summary statistics and conversion previews; anxiety can be instantly alleviated with positive reports, or if there are issues, resources can be assigned to focus on resolution before deadlines are missed and timelines slip.

In addition to the team’s boost in morale in the data quality and conversions processes, a comprehensive pre-validation reporting process can help dramatically reduce project implementation and conversion cycle load times. Time (and budget!) is saved whenever issues can be preemptively identified. When Definian joins projects that are struggling with data, one the most critical components we implement is a pre-validation reporting process. Once this process is in place, not only do projects stabilize, but we have seen cutover cycles drop by up to 40% and increase the amount of data/business units that can simultaneously go-live. (Read Case Study)

If you have any questions regarding data quality, data conversions, and ways to reduce risk for large-scale business system implementations, email me at steve.novak@definian.com or call me at 773.549.6945. I have spent the past 20 years helping some of the world’s most recognized brands focus on what makes them great by removing the riskiest obstacle of their transformation and would like to do the same for you.

Accelerating the Journey from Mainframe to the Cloud

The Challenge

The nature of software implementation is transformation. For institutions, governments, and enterprises that rely heavily on mainframe systems this transformation begins by confronting their own legacy data landscape, attempting to understand the technology that shaped it, and recognizing that enterprise software is only as good as the data that its runs on. Bridging the vast gap between mainframes and the Cloud requires a complex and comprehensive data conversion and migration effort. Issues handling data will result in project delays, cost overruns, and resource burnout. If the migration from mainframe to the Cloud is incomplete or inaccurate, end users will lose confidence and fail to adopt the new system. Definian’s mission is to remove data migration risk and our team has identified the following mainframe-specific technical considerations that must be made:

- Mainframe data must be converted from its native EBCDIC form to readable ASCII while simultaneously maintaining other complexities like packed-decimal formatting, binary fields, repeated segments, and Julian dates. Simply reading the data becomes its own conversion project, before any transformation work can begin.

- COBOL programming is inherently slow due to its verbosity, lack of structure, and poor modularity. As such, it is ill-equipped to handle the frequent specification changes and necessary short turnaround time during an implementation.

- Poor data quality abounds on mainframe systems because historically, they have not included the built-in validations and edits common on modern systems. This results in missing, inconsistent, and invalid data.

- The difficulties of COBOL programming together with poor data quality result in a mysterious and problematic data landscape – one that is difficult to understand in any comprehensive way. Consequently, programmers are forced to rely on incorrect assumptions, instead of facts, when developing conversion code.

Before the new system can go-live, data in the legacy systems must be consolidated, cleansed, converted, and transformed. Without the proper tools and approach, IT staff is tasked with clunky COBOL or RPG programming, file manipulations, and spreadsheet workarounds to prepare data for the Cloud. Consequently, less time is committed to training on the new system and other value-add activities like data quality initiatives, report building, and integrations.

Definian In Action: One of the world’s largest retailers wanted to implement a modern solution for Fixed Asset accounting. However, lack of understanding into their legacy mainframe solution was a major hurdle. Outside of COBOL copybooks, virtually no documentation existed and no-one was familiar with technical workings of the system beyond running the monthly reports. Definian leveraged the Applaud® data migration platform to reverse engineer critical functionality, reveal how depreciation and appreciation rules were being applied, and develop a transformation program for 1 million assets

The Tools

Definian’s approach is powered by our exclusive data migration software, Applaud. Applaud accelerates every step of the data conversion process using one team and one tool for data extraction, profiling, analysis, cleansing, transformation, and reconciliation. Leveraging our software and decades worth of experience solving the world’s toughest data challenges, we circumvent the key problems specific to mainframe data migrations.

- Built-in conversion tools give our consultants the ability to read EBCDIC data without having to write a single line of code. Immediate access to data saves times and money, allowing research and conversion development to ramp up sooner in the project.

- Applaud’s data repository quickly accommodates mainframe data sources making it possible to analyze mainframe files in relation to other sources in the data landscape, including ones that the mainframe system has never interfaced with. This makes formerly unsolvable problems – like duplicated master data across disparate systems – finally solvable.

- Comprehensive data profiling and analysis tools provide visibility into the mysteries buried in mainframe systems, leading to more focused requirements gathering efforts later in the project.

These tools have been optimized for the complex challenges of data migration. Naïve approaches rely on hand-coded extracts from individual mainframe files, conversion programs that are siloed based on source system or functional area, and COBOL programmers who are struggling to support data migration on top of their primary duties and training for the new system. To contrast, Definian uses Applaud to eliminate error prone and laborious hand-coding, provide a data repository with a holistic view of the data, and free up resources across the project.

DEFINIAN IN ACTION

A 22.5 billion dollar industrial conglomerate needed to shut down a mainframe system that cost $200,000 a month to maintain. The last remaining mainframe resource was desperate to retire, but after weeks of attempts, issues converting binary and packed fields left him and his entire team at an impasse. Definian took the lead and directly extracted, converted,and loaded the data into the target warehouse in half the expected time. This saved the company hundreds of thousands of dollars and allowed our counterpart to triumphantly retire.

The Results

Implementation is not just about getting to the Cloud. It’s about getting there and building a better enterprise in the process. Definian’s involvement allows the rest of the project team to focus on the future of their organization rather than be slowed down by data concerns and difficulties dealing with aging IT infrastructure and applications. By having an approach that immediately addresses the technical challenges inherent to mainframes, Definian can quickly graduate to impactful, value-adding activities as part of data conversion.

Every implementation is unique, but in each case quality data makes the difference between failure and resounding success. Definian’s ability to directly extract and profile EBCDIC data without the need for additional resources or mainframe programming accelerates all downstream efforts and provides a 360 degree view of the data landscape. Direct benefits include merging duplicate vendors and enabling better payment terms and volume discounts to be negotiated, identifying inaccurate payroll deductions and missing benefits so that employees can be served more fairly, and automating address cleansing to eliminate wasted revenue on undeliverable mail. Definian’s proven approach and the Applaud platform deliver a predictable, repeatable, and highly automated process that ensures the data migration is delivered on-time, on-budget, and with the highest quality data.

Project Snapshot: Oracle ERP Cloud Migration for a $4.4B Data Center Equipment and Services Provider

Challenges

- Client needed to adapt to the new paradigm of the Cloud from their decades old on-premise solutions.

- Constantly changing configurations and future state business requirements.

- Inconsistent and changing Oracle Cloud Security.

- Client’s lack of legacy of technical resources due to cutbacks.

- Shifting timelines.

- OracleCloud planned and unplanned downtime.

- Missing data load functionality and bugs within Oracle ERP Cloud.

- Multiple concurrent projects (Oracle ERP Cloud, PLM Cloud, CPQ Cloud, HCM Cloud, SalesCloud, and Microsoft Dynamics 365) with inter-dependencies.

- Significant amounts of duplicated Sales Cloud Prospects, Customers and Suppliers within and across the legacy systems.

- OracleTCA structure and new business requirements required complete restructure of legacy customer data.

"I wanted to one more time say thanks for the professionalism, commitment to excellence, and extraordinarily hard work demonstrated by every member of the Definian team. Definian provided an exceptional value … and I personally enjoyed working with your team."

— ERP Program Manager

Key Solutions

- Created actionable reports to help the client better understand their legacy data landscape, define requirements, and identify legacy data issues.

- Worked directly with Oracle Engineers to jointly troubleshoot and help develop new load processes.

- Developed and maintained a detailed project plan that tracked dependencies for every step in the data conversion project cycle.

- Worked with the Global MDM team to clearly define matching criteria and auto-merge rules so that Customers, Suppliers, and Sales Cloud Prospects were automatically merged. This saved time on the review of the high volume of duplicate records, while achieving deduplication resolution from the business.

- Leveraged Applaud’s ability to create customized reconciliation reports that could track records from the Legacy aging reports, through the conversion process, and into the final Cloud loads. This ensured that the Client had a full financial reconciliation between the legacy and target systems in preparation for go-live.

Mitigating Risk for a $2.2B Transportation Management Firm

Project Summary

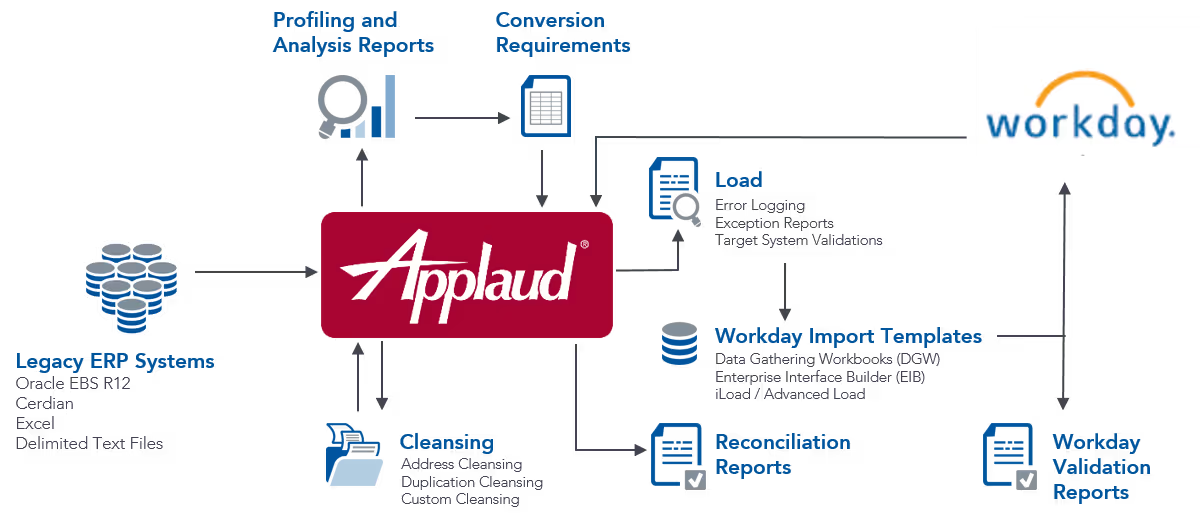

The project scope was to perform the data analysis and migration of all HCM data (HR and Payroll) from a Ceridian system and financial data from an Oracle EBS R12 system into Workday for a $2.2B airport/transportation management firm based in Arlington, VA.

The client implemented Workday to consolidate their HCM and Financial business operations which were operating in separate systems; Ceridian and Oracle EBS R12, respectively. A key limitations was that legacy Ceridian system offered little transparency, versatility, and reporting abilities to support business needs.

The client had a number of concerns regarding their data migration. Definian worked with the organization to address these concerns, ensuring a successful and on-time Workday implementation.

Project Risks

Every ERP implementation has critical risk factors. The areas this organization was most exposed to risk were:

- Lack of knowledge of the legacy Ceridian system and the quality of the data therein.

- Lack of experience of target Workday system requirements.

- Payroll reconciliation between the Ceridian and Workday system

Mitigating Risks

Definian mitigated the client’s lack of knowledge around the legacy Ceridian system. To do this, Definian:

- Created meaningful reports to help the client better understand their legacy data and identify legacy data issues.

- Worked with the client to develop a data quality strategy to address legacy data issues, either by data cleanup or data translation rules applied during conversion.

- Implemented programmatic data cleansing, such as employee and dependent address standardization, to ensure incomplete or bad data was corrected prior to Workday go-live.

Definian also needed to navigate the client’s lack of experience with the target Workday system. To do this, Definian:

- Created validation reports tailored to Workday’s requirements for each individual data piece, allowing the client to address invalid data or missing Workday configuration prior to loading the data.

- Developed conversion error reports which allowed the client to identify legacy system issues, such as invalid, missing, or duplicate data. This enabled the client to address these issues early, a benefit as there is little ability to back data out of Workday once loaded.

- Produced data load files in the exact format that Workday required, with embedded XML encoding, as well as any special field formatting rules required.

Finally, Definian addressed the client’s concern regarding payroll reconciliation between Ceridian and Workday. To do this, Definian:

- Produced reporting tools which allowed the client to fully validate converted historical payroll data, with payroll metrics such as Gross Wages, Net Pay, etc., tied-out to the penny.

- Built a complete gross-to-net payroll reconciliation tool that allowed the client to reconcile every line from every paycheck for every employee. This report exceeded the standard Workday version because it was customized to meet their exact needs, displaying Ceridian’s payroll data alongside Workday’s.

- Provided the client with complete visibility to potential payroll issues, helping them identify error patterns and correct issues at a systemic as well as employee level before go-live.

Key Activities

- Used Applaud’s extraction capabilities to extract the raw data from the legacy systems into Applaud’s data repository.

- Utilized Applaud’s automated profiling on each relevant column in every one of the legacy systems to assist with the creation of the data conversion requirements.

- Deployed Applaud’s integrated analytics/reporting tools to perform deeper analysis on the legacy data to identify numerous legacy data issues as well as Workday-specific validations, identifying missing or invalid configuration prior to loads.

- Leveraged Applaud’s ability to develop custom payroll reconciliation reports, allowing the client to compare every line of every paycheck, represented to the business in a meaningful way.

- Leveraged Applaud’s flexibility to create import files in any format required by the target system, including XML files with embedded encoding.

- Used Applaud’s data transformation capabilities to quickly build out easily repeatable data migration programs and to quickly react to every specification change.

- Employed experienced data migration consultants to identify, prevent, and resolve problems before they became issues.

The Bottom Line

The Applaud® Advantage

To help overcome the expected data migration challenges, the organization engaged Definian’s Applaud® data migration Services.

Three key components of Definian’s Applaud solution helped the client navigate their data migration:

Definian’s data migration consultants: Definian’s services group averages more than eight years of experience working with Applaud, exclusively on data migration projects.

Definian’s methodology: Definian’s EPACTL approach to data migration projects is different than traditional ETL approaches and helps ensure the project stays on track. This methodology decreases overall implementation time and reduces the risk of the migration.

Definian’s data migration software, Applaud®: Applaud was built from the ground up to address the challenges that occur on data migration projects, allowing the team to accomplish all data needs using one integrated product.

The combined aspects of the Applaud solution were leveraged to meet the challenges of this project.

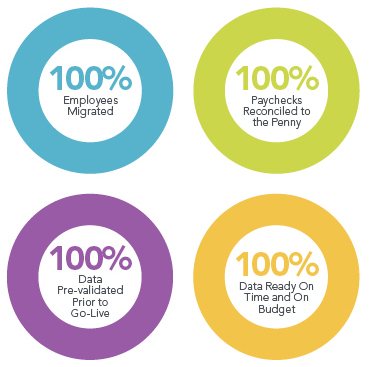

The Results

Bringing Definian into the project drastically reduced the risk of failure. The end result of using Applaud data migration services was a successful implementation. With 100% of converted records successfully loaded, the business could begin running HR business processes in Workday right away. In addition, with a 100% successful historical payroll data conversion and a payroll reconciliation that compared every line of every paycheck and provided exhaustive variance reports, the client began payroll processing in Workday immediately, confident that their data was accurate.