Data best practices for modern organizations

Definian explores practical methods, proven frameworks, and actionable guidance that help teams work smarter with data across the enterprise.

Navigating Compliance and Talent Management Through Effective HCM Data Governance

Best Practices

Data Governance

Case Study

Streamlining HR data for a 30K-employee industrial firm, cut new division onboarding from 6 weeks to 1, ensuring compliance, accuracy, and scalability amid rapid acquisitions.

Shifting Perspective: Your Data is Not Garbage

Best Practices

Labeling data as garbage stifles potential. Reframing challenges as opportunities leads to refinement and discovery.

Formalizing Data Governance As Part of Your Workday Implementation

Workday

Best Practices

Definian's agile data governance framework accelerates Workday implementations and provides organizations the structure to maintain data clarity and trust long after go-live

Including Data Governance as Part of your ERP Implementation

Best Practices

Service Offering

After working on dozens of implementations, we find that organizations frequently overlook using the implementation as an opportunity to upgrade data governance to fuel long term data performance.

3 Keys to Drive Your Payroll Validation Success

Best Practices

Digital transformations are a major investment in your organization’s future. We’ve identified 3 keys needed to accomplish an enterprise payroll validation.

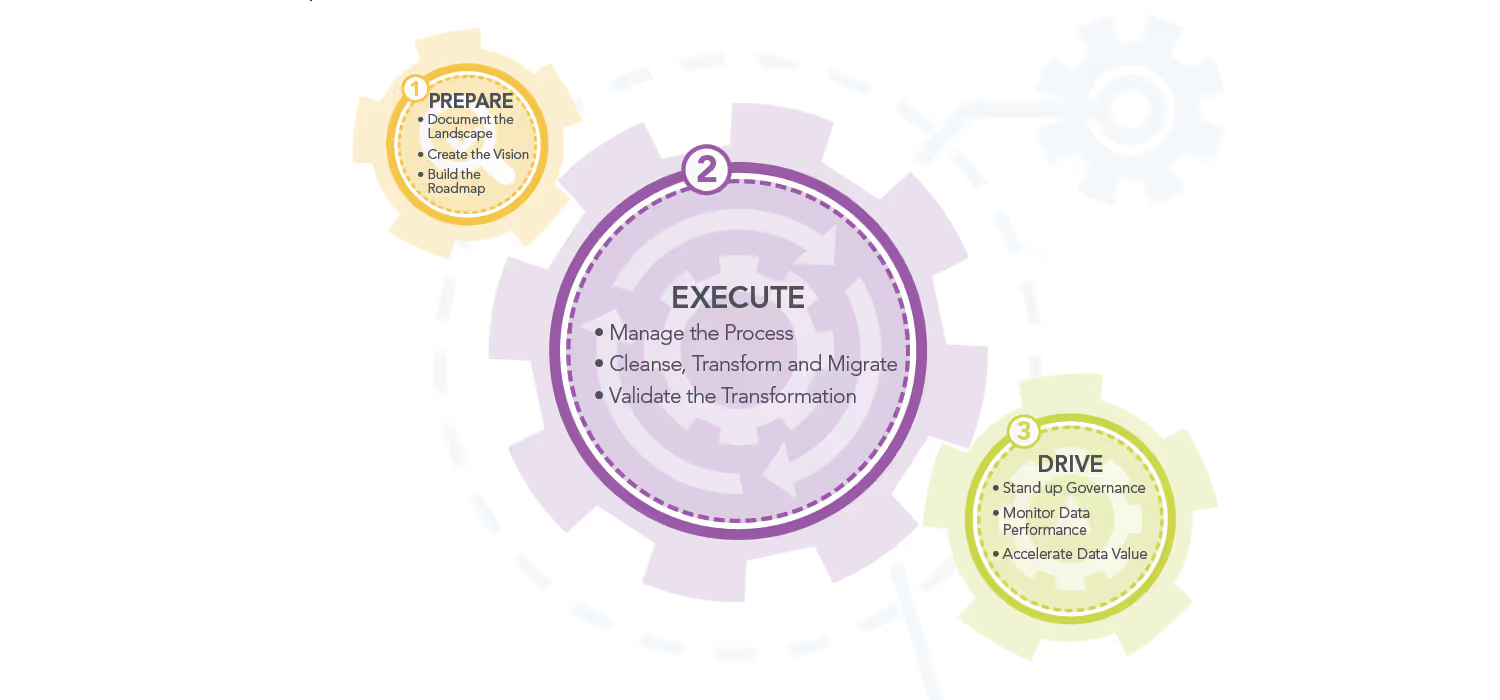

Best Practices Data Migration Services Explained

Best Practices

A comprehensive data migration approach covers the phases from preparing the target vision, executing the transformation, and driving long term data performance.

What's the Difference Between Data Validation and Testing?

Best Practices

Data validation and testing are both crucial when migrating data to any system. It’s important to understand the difference as they are frequently mistaken to mean the same thing.

How a Go-Live Dress Rehearsal Ensures Cutover Success

Best Practices

The final test cycle before cutover deserves as much or even more attention than cutover itself. A successful dress rehearsal is an excellent indication that the entire project team can deliver a successful go-live.

Data Governance Kick-Off Meeting

Best Practices

Kicking off your organization’s data governance implementation with an introductory meeting is an effective way to ensure stakeholder buy-in and set expectations for things to come.

Data Holds Organizations Back

Best Practices

Service Offering

According to McKinsey, organizations spend 30% of their time on non-value added tasks because of poor data management. Read on to explore why data impacts performance and how Definian can work with you on these issues.

Mastering the Item Master: A Tour de Force Wrangling Unconventional Data

Dynamics

Best Practices

As part of a global rollout of Dynamics 365, the Item Master for a particular cold finishing mill required a dramatic redesign.

7 Barriers to Successful Data Migration

Best Practices

Successful completion of a data transformation and ERP implementation will provide huge advantages to your organization. But before you can realize these benefits, your organization must rise to the challenges ahead.